Building upon my earlier post — SmartFlat AI: Building AWS Multi-Agent System — this article explores how to use Google’s ADK (Agent Development Kit) to create an intelligent, web-based multi-agent system. The agents will answer questions about Singapore’s HDB market, leveraging free models available through OpenRouter.

While the concept is similar to the AWS-based version, this project focuses on Google’s ADK ecosystem and demonstrates how to integrate it with the MCP Toolbox and PostgreSQL database for structured reasoning.

Prerequisites

For this post, I will be using MCP Toolbox for Databases to handle database connections and tool registration.

$VERSION = "0.18.0"

Invoke-WebRequest -Uri "https://storage.googleapis.com/genai-toolbox/v$VERSION/windows/amd64/toolbox.exe" -OutFile "toolbox.exe"

# To run Toolbox from binary

.\toolbox

# Or with the Toolbox UI

.\toolbox --uiWe’ll start by initializing a new Python project using

uv

— a fast Python package manager and environment tool.

mkdir adk-web-multi-agent

cd adk-web-multi-agent

# Initialize project

uv init

# Create and activate virtual environment

uv sync

.venv\Scripts\activate

# Add dependencies

uv add google-adk litellm toolbox-coreSample pyproject.toml:

[project]

name = "adk-web-multi-agent-system"

version = "0.1.0"

description = "Multi Agent System for Google ADK"

readme = "README.md"

requires-python = ">=3.12"

dependencies = [

"google-adk>=1.17.0",

"litellm>=1.78.7",

"toolbox-core>=0.5.2",

]Setting up PostgreSQL Database

For this demonstration, I used a locally hosted PostgreSQL database containing

resale flat prices from Jan 2017 onwards

.

Below is the DDL for the resale_transactions table:

CREATE TABLE public.resale_transactions (

"month" text NULL,

town text NULL,

flat_type text NULL,

block text NULL,

street_name text NULL,

storey_range text NULL,

floor_area_sqm text NULL,

flat_model text NULL,

lease_commence_date text NULL,

resale_price int4 NULL

);Configuring MCP Toolbox

Toolbox acts as the interface layer between ADK and the database.

Here’s a sample configuration using the default tools.yaml file:

sources:

my-pg-source:

kind: postgres

host: 127.0.0.1

port: 5432

database: postgres

user: postgres

password: postgres

tools:

postgres-list-tables:

kind: postgres-list-tables

source: my-pg-source

description: Use this tool to retrieve schema information for all or specified tables. Output format can be simple (only table names) or detailed.

execute-sql-tool:

kind: postgres-execute-sql

source: my-pg-source

description: Use this tool to execute sql statement.

list-hdb-flats-by-town:

kind: postgres-sql

source: my-pg-source

description: Finds the most recent 5 resale transaction records for HDB flats in the specified town

parameters:

- name: town

type: string

description: The town of the HDB flat

statement: SELECT * FROM public.resale_transactions t WHERE t.town ILIKE '%' || $1 || '%' ORDER BY t.month DESC LIMIT 5;

count-hdb-flats-by-town:

kind: postgres-sql

source: my-pg-source

description: Calculates the total number of HDB resale transactions that have occurred in the specified town since 2017

parameters:

- name: town

type: string

description: The town of the HDB flat

statement: SELECT COUNT(*) as count FROM public.resale_transactions t WHERE t.town ILIKE '%' || $1 || '%';

percentile-price-by-town:

kind: postgres-sql

source: my-pg-source

description: Calculates a specific resale price percentile (e.g., median price) based on all recorded HDB resale transactions in the specified town

parameters:

- name: town

type: string

description: The town to check the percentile price for

- name: percentile

type: float

description: The percentile to calculate (e.g., 0.5 for median, 0.9 for 90th percentile)

default: 0.5

statement: SELECT PERCENTILE_CONT($2) WITHIN GROUP (ORDER BY t.resale_price) AS percentile_price FROM public.resale_transactions t WHERE t.town ILIKE '%' || $1 || '%';

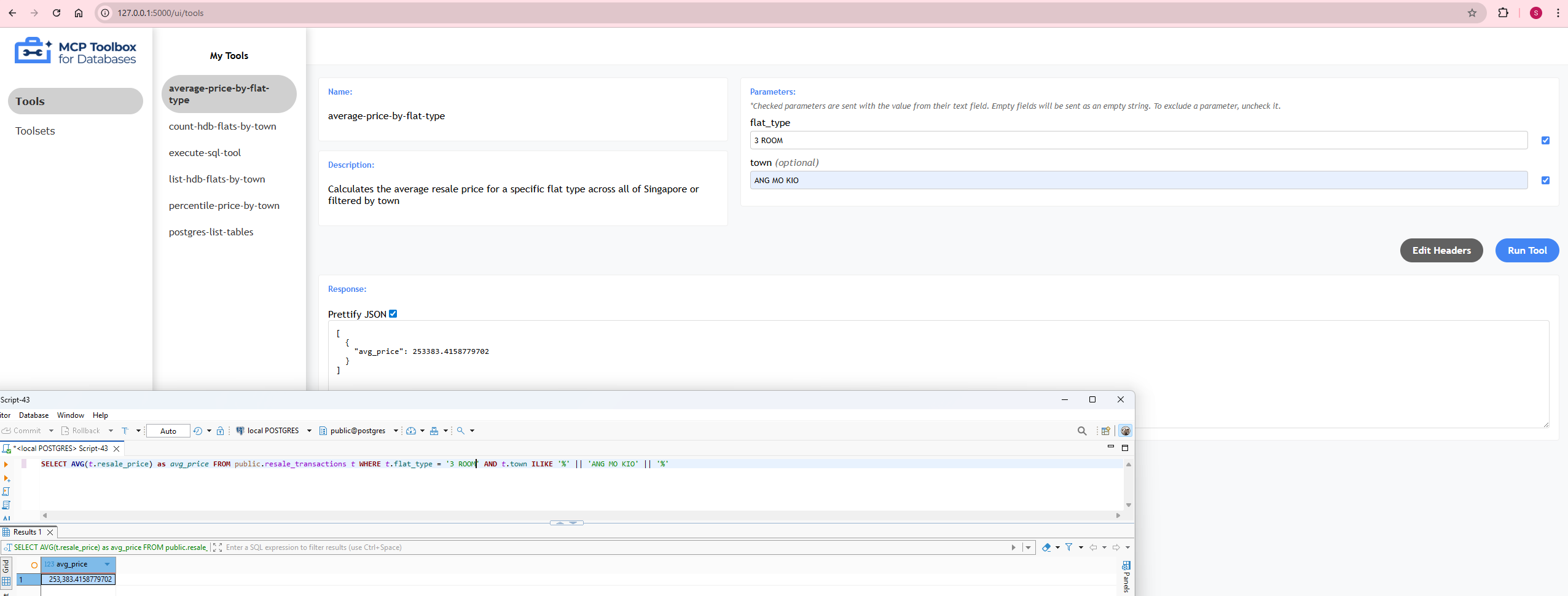

average-price-by-flat-type:

kind: postgres-sql

source: my-pg-source

description: Calculates the average resale price for a specific flat type across all of Singapore or filtered by town

parameters:

- name: flat_type

type: string

description: The type of flat (e.g., '3 ROOM', '4 ROOM', '5 ROOM')

- name: town

type: string

description: Optional town filter. Leave empty or use '%' for all of Singapore

default: '%'

statement: SELECT AVG(t.resale_price) as avg_price FROM public.resale_transactions t WHERE t.flat_type = $1 AND t.town ILIKE '%' || $2 || '%';

toolsets:

my-toolset:

- postgres-list-tables

- execute-sql-tool

- list-hdb-flats-by-town

- count-hdb-flats-by-town

- percentile-price-by-town

- average-price-by-flat-type

Building the Agent

With the tools configured, we can now create an ADK-powered database agent that interacts with PostgreSQL.

Step 1. Import Dependencies

# Import the necessary libraries

from google.adk.agents import Agent

from google.adk.models.lite_llm import LiteLlm

from google.adk.sessions import InMemorySessionService

from google.adk.runners import Runner

from google.genai import types

from typing import Optional, Dict

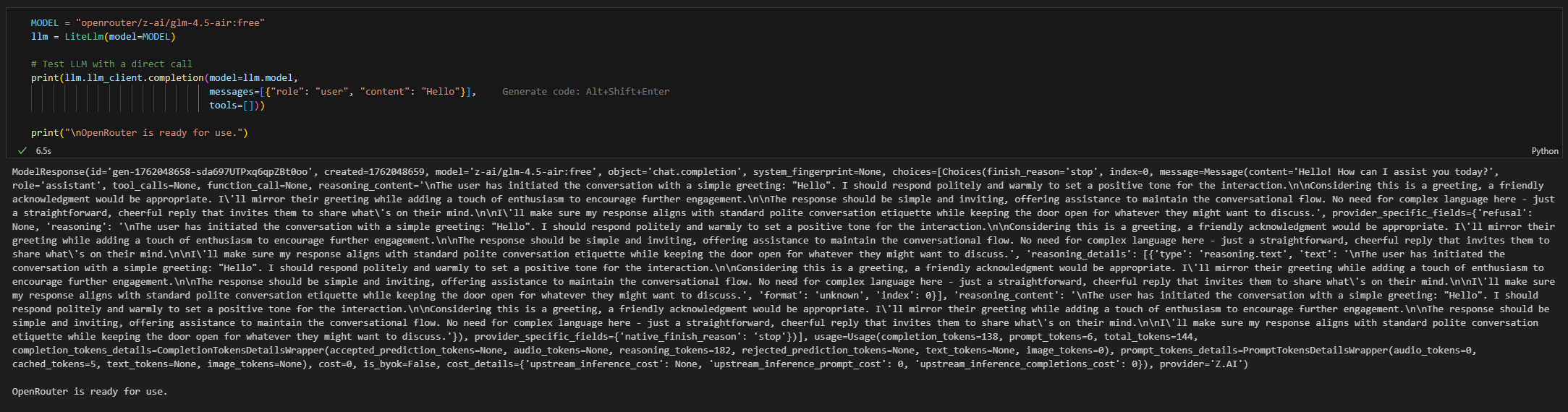

from toolbox_core import ToolboxSyncClientStep 2: Configure the Model

MODEL = "openrouter/z-ai/glm-4.5-air:free"

llm = LiteLlm(model=MODEL)

# Test LLM with a direct call

print(llm.llm_client.completion(model=llm.model,

messages=[{"role": "user", "content": "Hello"}],

tools=[]))

print("\nOpenRouter is ready for use.")

openrouter/minimax/minimax-m2:free produced inconsistent results, while openrouter/openai/gpt-oss-20b:free currently lacks tool-calling support.

Step 3. Load the Tools

client = ToolboxSyncClient("http://127.0.0.1:5000")

# This will load all tools

all_tools = client.load_toolset()

print(f"Loaded {all_tools} tools from MCP server")

# Sample Output

# Loaded [<toolbox_core.sync_tool.ToolboxSyncTool object at 0x0000021D4120F680>, <toolbox_core.sync_tool.ToolboxSyncTool object at 0x0000021D422D03B0>, <toolbox_core.sync_tool.ToolboxSyncTool object at 0x0000021D422D1970>, <toolbox_core.sync_tool.ToolboxSyncTool object at 0x0000021D422D0200>, <toolbox_core.sync_tool.ToolboxSyncTool object at 0x0000021D422D02C0>, <toolbox_core.sync_tool.ToolboxSyncTool object at 0x0000021D422D0410>] tools from MCP serverStep 4. Creates the Runner

# Global cache for runners (maintains sessions)

_runner_cache: Dict[str, tuple] = {}

async def chat_with_agent(

agent: Agent,

message: str,

user_id: str = "user_001",

session_id: Optional[str] = None,

maintain_session: bool = True,

verbose: bool = True

) -> str:

"""

Send a message to an agent and get the response.

"""

if session_id is None:

session_id = f"{agent.name}_session"

app_name = f"{agent.name}"

cache_key = f"{agent.name}_{user_id}_{session_id}"

# Reuse or create runner

if maintain_session and cache_key in _runner_cache:

runner = _runner_cache[cache_key]

else:

session_service = InMemorySessionService()

await session_service.create_session(

app_name=app_name,

user_id=user_id,

session_id=session_id

)

runner = Runner(

agent=agent,

app_name=app_name,

session_service=session_service

)

if maintain_session:

_runner_cache[cache_key] = runner

if verbose:

print(f"\n>>> User Message: {message}")

content = types.Content(role='user', parts=[types.Part(text=message)])

final_response_text = None

async for event in runner.run_async(user_id=user_id, session_id=session_id, new_message=content):

if verbose:

print(f"[Event] Author: {event.author}, Type: {type(event).__name__}, Final: {event.is_final_response()}")

if event.is_final_response():

if event.content and event.content.parts:

final_response_text = event.content.parts[0].text

elif event.actions and event.actions.escalate:

final_response_text = f"Agent escalated: {event.error_message or 'No specific message.'}"

break

if verbose:

print(f"<<< Agent Response: {final_response_text}")

return final_response_textStep 5. Creates the Agent

db_agent = Agent(

name="agents",

model=llm,

description="An intelligent database assistant for HDB resale transaction data",

instruction="""You are a helpful database assistant with access to HDB resale transaction data from 2017 onwards.

**Your Role:**

- Answer user questions using the available tools

- Always respond in clear, natural language

- Format prices with proper currency notation (e.g., $500,000)

- Format large numbers with commas for readability

**Response Guidelines:**

- After calling any tool, ALWAYS provide a complete natural language summary

- Never output raw JSON or technical data structures

- Be conversational and helpful

- If a query returns no results, explain this clearly

**Available Data:**

The database contains HDB resale transactions with information about prices, locations (towns), flat types, flat models, floor areas, lease dates, and transaction dates.

Use the available tools intelligently to answer user questions. Each tool's description explains when to use it.""",

tools=all_tools,

)

print(f"\nAgent '{db_agent.name}' created successfully with {len(all_tools)} tools")

# Sample output

# Agent 'database_agent' created successfully with 6 toolsStep 6. Tests the Agent

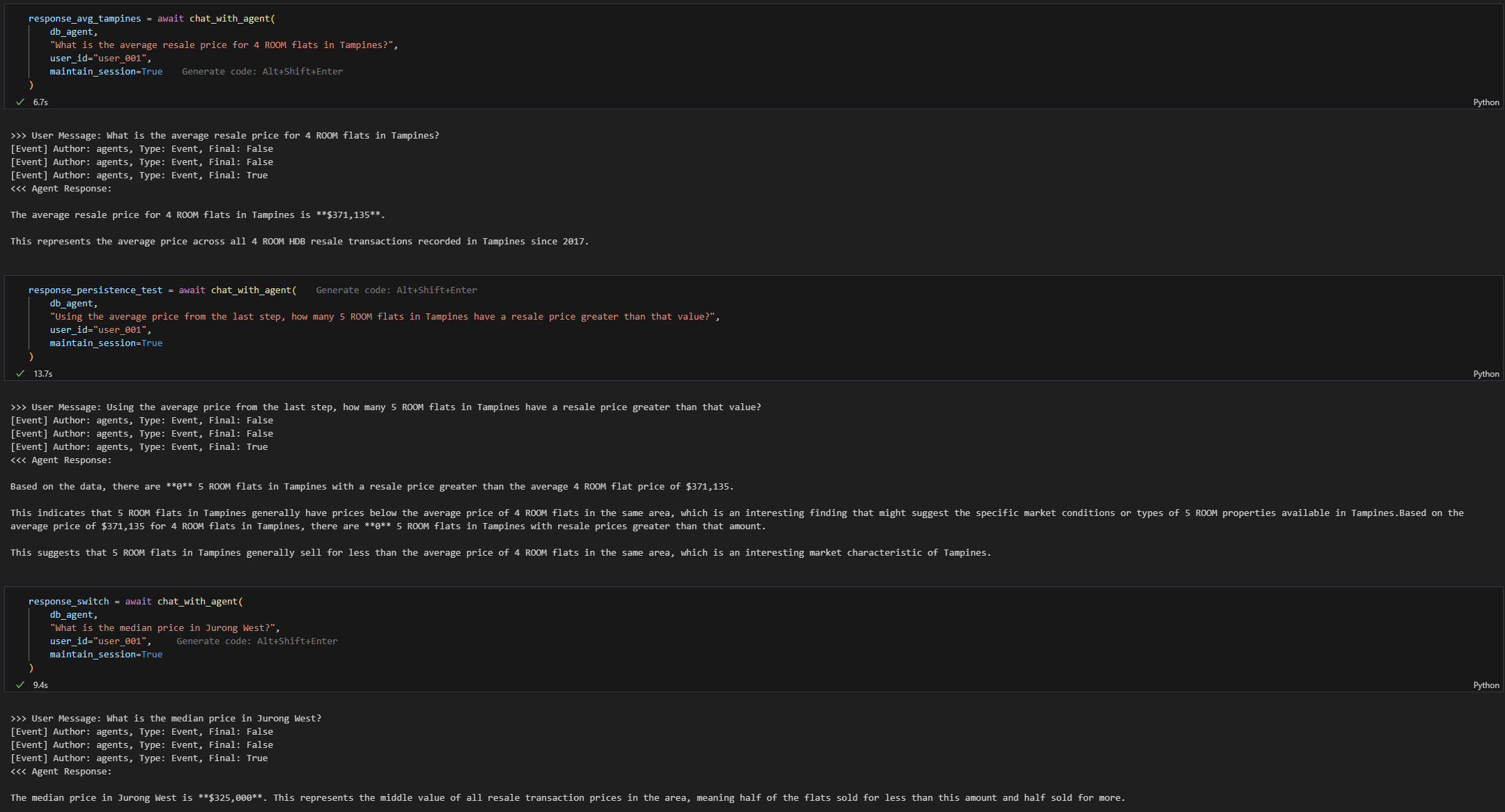

response_avg_tampines = await chat_with_agent(

db_agent,

"What is the average resale price for 4 ROOM flats in Tampines?",

user_id="user_001",

maintain_session=True

)

response_persistence_test = await chat_with_agent(

db_agent,

"Using the average price from the last step, how many 5 ROOM flats in Tampines have a resale price greater than that value?",

user_id="user_001",

maintain_session=True

)

response_switch = await chat_with_agent(

db_agent,

"What is the median price in Jurong West?",

user_id="user_001",

maintain_session=True

)

Step 7. Tests without Maintaining Session

response_memory = await chat_with_agent(

db_agent,

"What was the average price we calculated for 4 ROOM flats in Tampines?",

user_id="user_001",

maintain_session=True

)

# >>> User Message: What was the average price we calculated for 4 ROOM flats in Tampines?

# [Event] Author: agents, Type: Event, Final: True

# <<< Agent Response:

# The average price we calculated for 4 ROOM flats in Tampines was **$371,135**.

response_reset = await chat_with_agent(

db_agent,

"What is the total count of flats in Tampines?",

user_id="user_002",

session_id=None,

maintain_session=False

)

# >>> User Message: What is the total count of flats in Tampines?

# [Event] Author: agents, Type: Event, Final: False

# [Event] Author: agents, Type: Event, Final: False

# [Event] Author: agents, Type: Event, Final: True

# <<< Agent Response:

# Based on the HDB resale transaction data from 2017 onwards, there are **51,077** flats in Tampines.Datacommon API

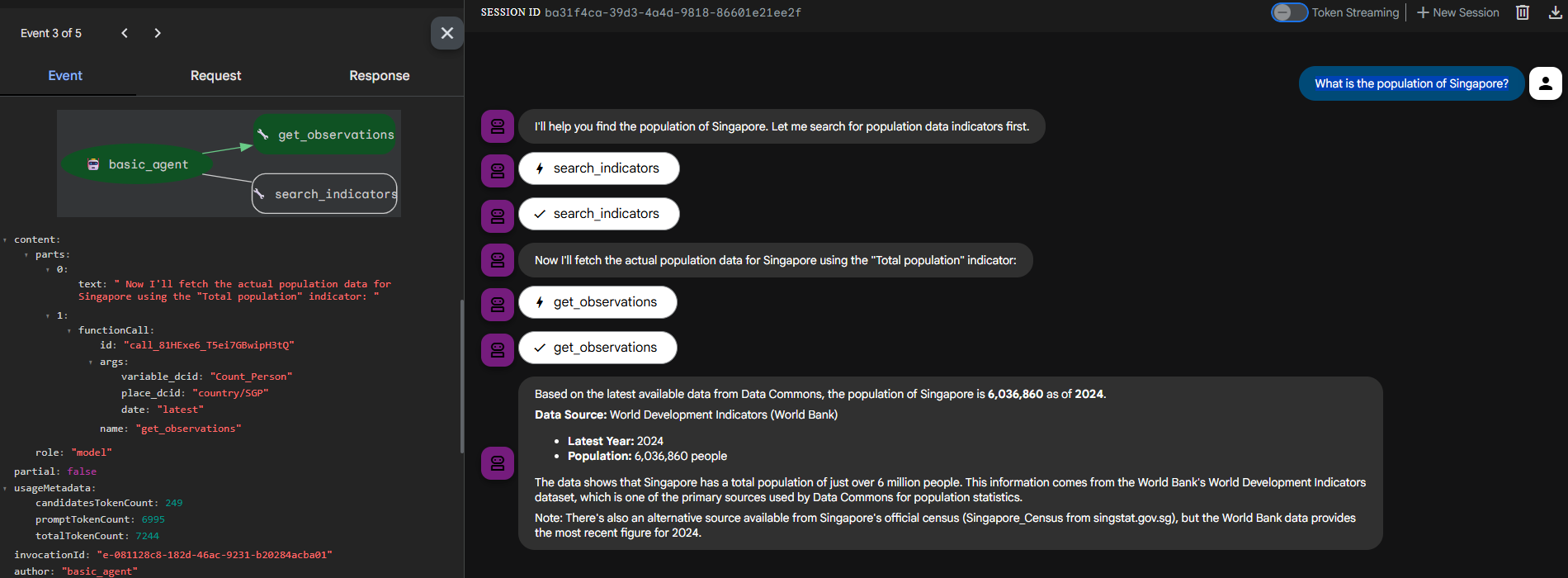

Data Commons provides a single source for publicly available statistical data. To run a local instance of DataCommons API :

uvx datacommons-mcp serve http --port 8000

Datacommon Agent

This is the agent.py:

# agents/datacommons_agent/agent.py

from google.adk.agents.llm_agent import LlmAgent

from google.adk.tools.mcp_tool.mcp_toolset import (

MCPToolset,

StreamableHTTPConnectionParams,

)

from google.adk.models.lite_llm import LiteLlm

from .instructions import AGENT_INSTRUCTIONS

MODEL = "openrouter/z-ai/glm-4.5-air:free"

llm = LiteLlm(model=MODEL)

root_agent = LlmAgent(

model=llm,

name="basic_agent",

instruction=AGENT_INSTRUCTIONS,

tools=[

MCPToolset(

connection_params=StreamableHTTPConnectionParams(

url=f"http://localhost:8001/mcp"

)

)

],

)ADK Web

Following Develop your own ADK Agent , let’s run with the following command:

adk webLet’s test with this question: “What is the population of Singapore?”

Coordinator / Dispatcher Pattern

To handle multiple data sources, we can use a Coordinator agent that intelligently delegates questions to the right specialist agent. If a question involves HDB resale data, it routes to the PostgresAgent; if it’s about global or demographic data, it delegates to the DataCommonsAgent.

# agents/coordinator.py

from google.adk.agents import LlmAgent

from google.adk.models.lite_llm import LiteLlm

from agents.datacommons_agent.agent import dc_agent as datacommons_agent

from agents.postgres_agent.agent import db_agent as postgres_agent

COORDINATOR_MODEL = "openrouter/z-ai/glm-4.5-air:free"

llm = LiteLlm(model=COORDINATOR_MODEL)

COORDINATOR_INSTRUCTION = """

You are the Master Coordinator for an intelligent assistant system. Your job is NOT to answer questions directly, but to delegate them to the correct specialist sub-agent.

**Delegate based on topic:**

1. **POSTGRESQL AGENT (`PostgresAgent`):** Route any request that involves **HDB resale transactions, flat prices, flat counts, town names (Tampines, Jurong West), or flat types ('4 ROOM', '3 ROOM')**. If the user mentions *any* HDB-specific term, delegate here.

2. **DATACOMMONS AGENT (`DataCommonsAgent`):** Route requests involving **global data, countries, continents, economics (GDP, income, inequality), health statistics, or general population data**.

3. **Other:** If the request is not clearly HDB-related and not clearly global data, default to routing to the `datacommons_agent` as it covers general knowledge.

**CRITICAL:** You must only output a **LLM-Driven Delegation call** using the format: `transfer_to_agent(agent_name='<target_agent_name>')`. Do not generate any other text or tool calls yourself.

"""

root_agent = LlmAgent(

name="MasterCoordinator",

model=llm,

instruction=COORDINATOR_INSTRUCTION,

description="Master router for HDB data and Global Data Commons queries.",

sub_agents=[

postgres_agent,

datacommons_agent

]

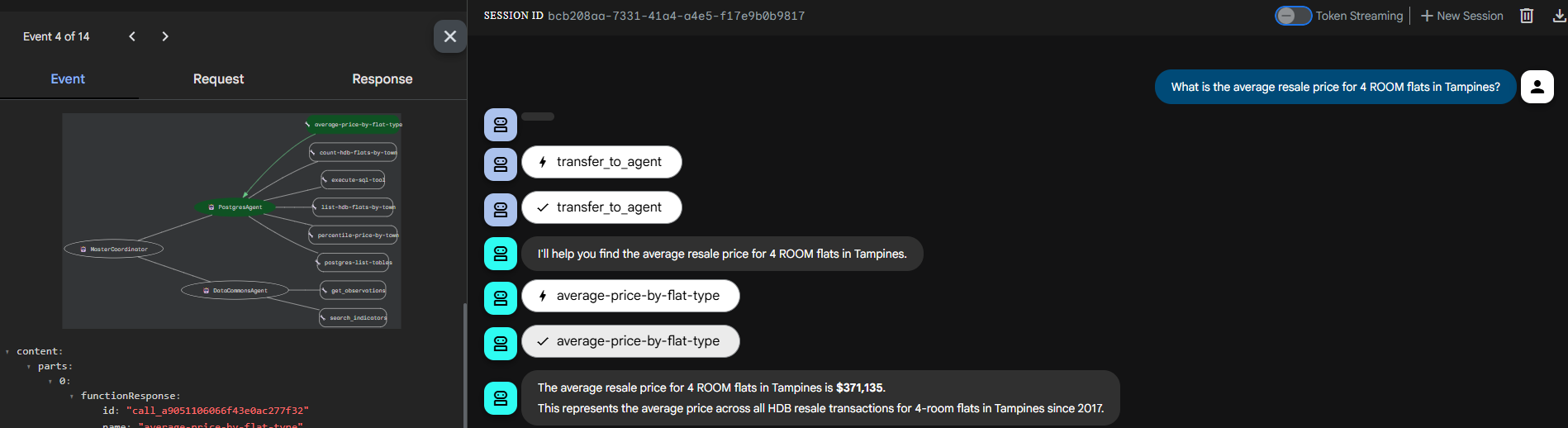

)Question 1: “What is the average resale price for 4 ROOM flats in Tampines?”

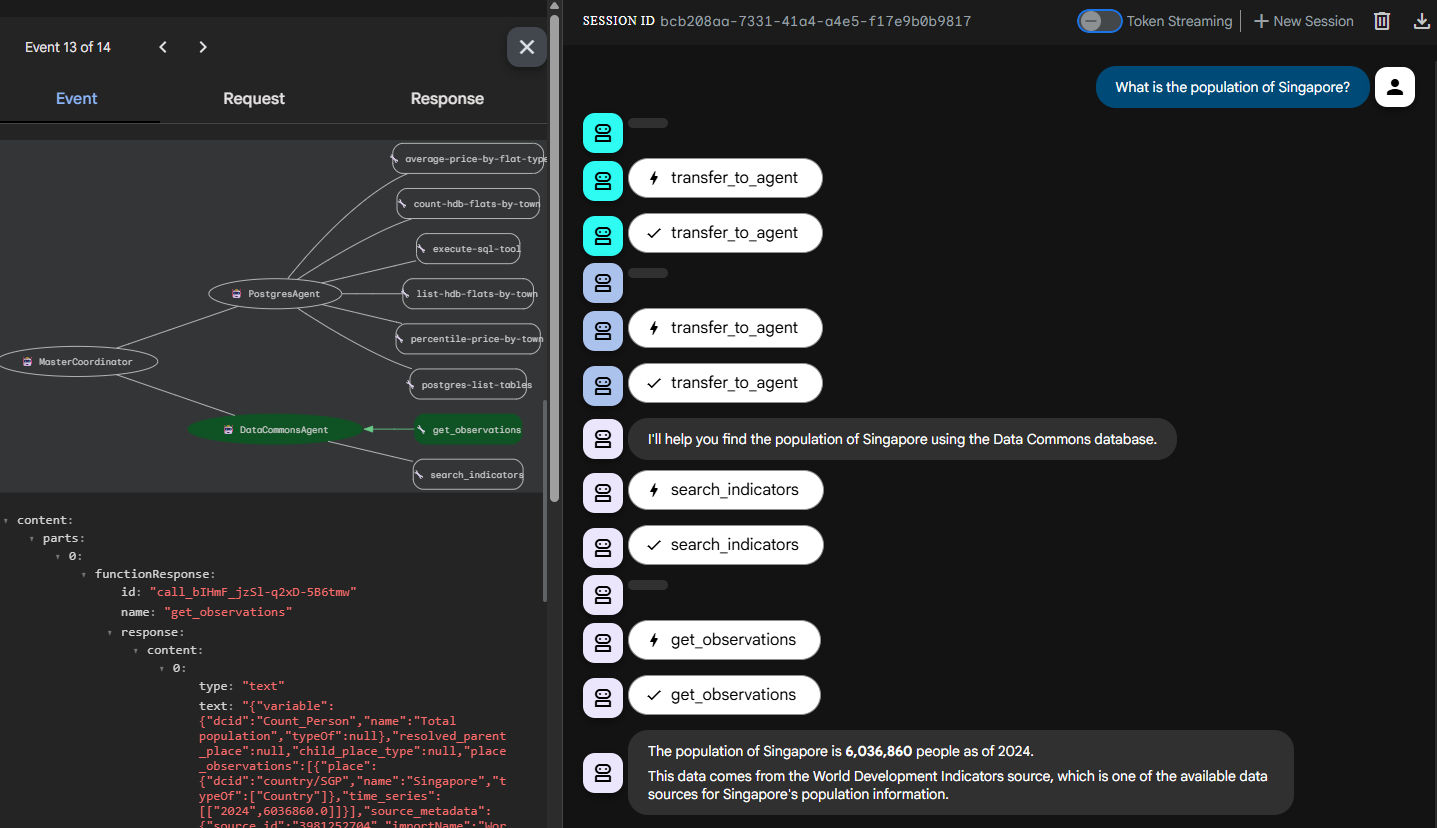

Question 2: “What is the population of Singapore?”

Conclusion

This project demonstrates how Google’s ADK can be combined with OpenRouter models and MCP Toolbox to create a fully functional, multi-agent system that integrates both structured (Postgres) and unstructured (DataCommons) data. By using a Coordinator/Dispatcher pattern, the system can scale to include more specialized agents — each handling its domain efficiently while maintaining a seamless conversational experience.

Future Work

In the next phase, I plan to extend the system using LangChain and LangGraph to bring more sophisticated agent orchestration and reasoning capabilities.

-

LangChain Integration: LangChain can serve as the backbone for chaining complex reasoning steps — such as combining property search, analytics, and neighborhood insights into a cohesive workflow. It will also simplify managing context between agents, enabling memory persistence and multi-turn query understanding.

-

LangGraph Exploration: LangGraph offers a declarative, graph-based approach to building multi-agent workflows. By defining agents as graph nodes and their interactions as edges, it becomes easier to visualize, debug, and evolve complex behaviors like task delegation, dependency management, and parallel execution.

Together, these enhancements would elevate the current architecture from a rule-based agent system to a truly autonomous, reasoning-driven network capable of learning from user interactions and adapting over time.