Building upon my previous post , this time I’ll demonstrate how to connect n8n with Blender via MCP. By combining n8n’s automation capabilities with Blender’s modeling power, we can drive 3D creation workflows with natural language and AI agents.

Updating to the Latest n8n Image

As mentioned in Automating Workflows with n8n , I’m updating to the latest n8n image and configuring a runner as a sidecar. Task runners provide a secure and performant mechanism to execute tasks.

Here’s my deployment configuration:

# n8n-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

io.kompose.service: n8n

name: n8n

namespace: n8n

spec:

replicas: 1

selector:

matchLabels:

io.kompose.service: n8n

strategy:

type: Recreate

template:

metadata:

labels:

io.kompose.service: n8n

spec:

containers:

# Main n8n container

- env:

- name: DB_POSTGRESDB_HOST

value: postgres

- name: DB_POSTGRESDB_PASSWORD

value: postgres

- name: DB_POSTGRESDB_USER

value: postgres

- name: DB_TYPE

value: postgresdb

- name: N8N_DIAGNOSTICS_ENABLED

value: "false"

- name: N8N_PERSONALIZATION_ENABLED

value: "false"

- name: OLLAMA_HOST

value: ollama:11434

# Task runner configuration

- name: N8N_RUNNERS_ENABLED

value: "true"

- name: N8N_RUNNERS_MODE

value: external

- name: N8N_RUNNERS_BROKER_LISTEN_ADDRESS

value: 0.0.0.0

- name: N8N_RUNNERS_AUTH_TOKEN

value: my-n8n-runners-secure-token

- name: N8N_NATIVE_PYTHON_RUNNER

value: "true"

envFrom:

- configMapRef:

name: env

image: n8nio/n8n:1.111.0

imagePullPolicy: Always

name: n8n

ports:

- containerPort: 5678

protocol: TCP

- containerPort: 5679 # Task runner broker port

protocol: TCP

volumeMounts:

- mountPath: /home/node/.n8n

name: n8n-storage

- mountPath: /data/shared

name: n8n-claim2

- mountPath: /demo-data

name: demo-data

# Task runners container

- env:

- name: N8N_RUNNERS_TASK_BROKER_URI

value: http://localhost:5679

- name: N8N_RUNNERS_AUTH_TOKEN

value: my-n8n-runners-secure-token

- name: N8N_RUNNERS_AUTO_SHUTDOWN_TIMEOUT

value: "15"

image: n8nio/runners:1.111.0

imagePullPolicy: Always

name: n8n-runners

volumeMounts:

- mountPath: /data/shared

name: n8n-claim2

hostname: n8n

restartPolicy: Always

volumes:

- name: n8n-storage

persistentVolumeClaim:

claimName: n8n-storage

- name: n8n-claim2

persistentVolumeClaim:

claimName: n8n-claim2

- name: demo-data

persistentVolumeClaim:

claimName: demo-data-pvcDeploy with:

kubectl apply -f n8n-deployment.yaml

kubectl rollout restart deployment n8nAI Agent Chat Workflow

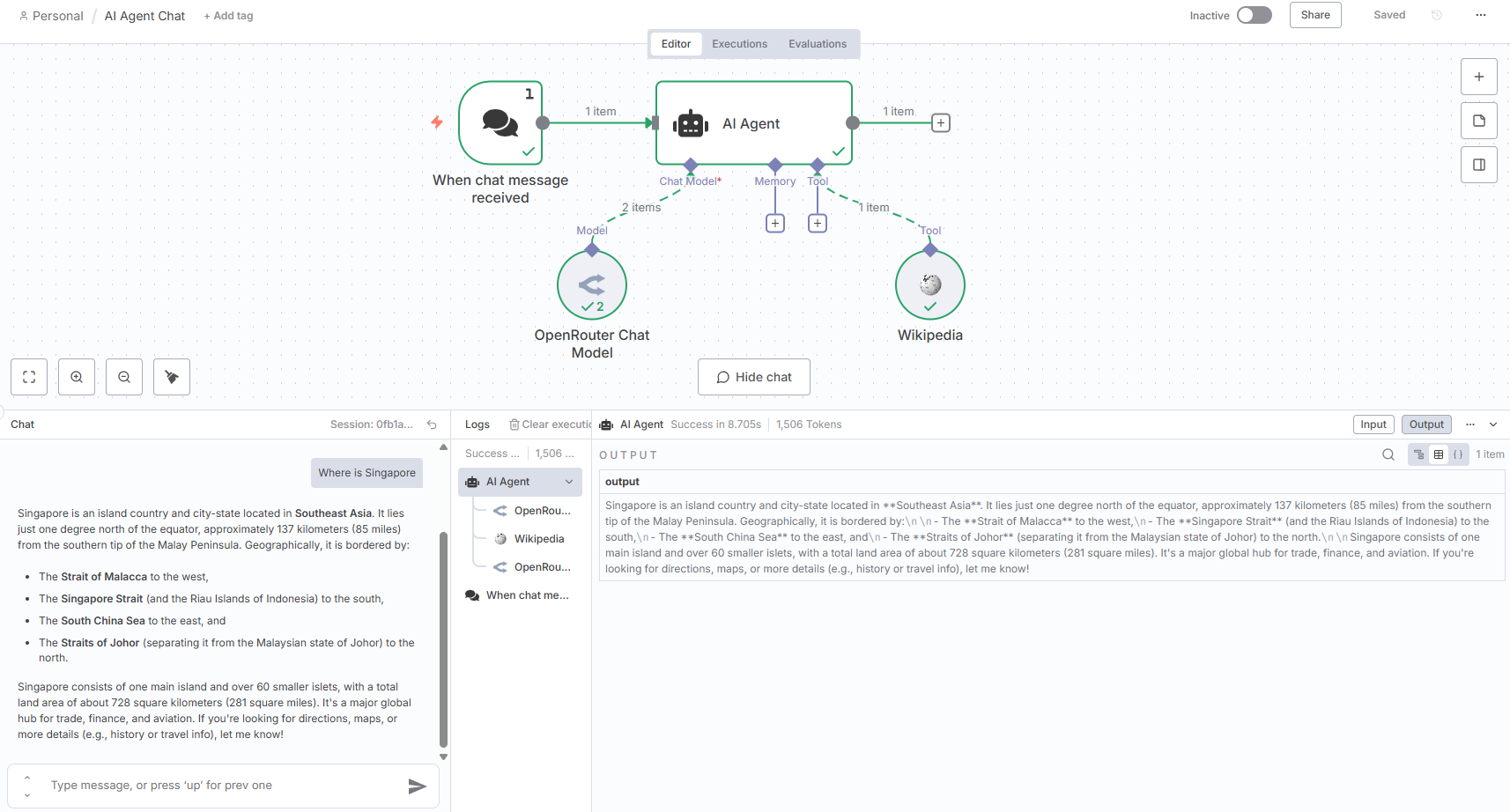

To start, let’s build a simple chat workflow following the AI Agent Chat template . I added the following nodes:

When I tested with:

- My name is seehiong

- Where is Singapore

- Whats my name

…the chat model responded, but it didn’t retain my name.

Adding a Simple Memory node , fixed this—allowing the agent to recall previous messages.

Preparing the Blender Bridge Server

The next step was bridging the AI agent to Blender’s MCP tool. While ideally we’d run uvx blender-mcp directly inside n8n, that would require a custom node. For now, I created a lightweight wrapper service: blender-bridge-server.py.

This service acts as a Fast HTTP-to-MCP bridge, using:

- Persistent connection pooling for low latency

- Structured chat processing with OpenRouter models

- Tool invocation (scene info, object info, code execution)

# blender-bridge-server.py

"""

Fast HTTP-to-MCP Bridge Service for Blender

Uses persistent connections for speed matching original raw TCP client

"""

from flask import Flask, request, jsonify

import asyncio

import os

import json

import threading

import time

import uuid

from datetime import datetime, timedelta

from typing import Dict, List, Optional, Any

from dataclasses import dataclass

import logging

from openai import OpenAI

from dotenv import load_dotenv

import queue

load_dotenv()

# Configuration

@dataclass

class Config:

model: str = os.getenv("MODEL", "openrouter/anthropic/claude-3-sonnet")

blender_host: str = os.getenv("BLENDER_HOST", "127.0.0.1")

blender_port: int = int(os.getenv("BLENDER_PORT", 9876))

openrouter_api_key: str = os.getenv("OPENROUTER_API_KEY")

max_messages: int = 30

session_timeout: int = 1800

temperature: float = 0.0

seed: int = 42

max_connections: int = 20 # Connection pool size

config = Config()

# Logging setup

logging.basicConfig(level=logging.INFO, format='%(asctime)s - %(levelname)s - %(message)s')

logger = logging.getLogger(__name__)

class PersistentMCPConnection:

"""Single persistent connection to Blender MCP"""

def __init__(self, connection_id: str):

self.id = connection_id

self.reader: Optional[asyncio.StreamReader] = None

self.writer: Optional[asyncio.StreamWriter] = None

self.connected = False

self.last_used = time.time()

self.use_count = 0

self._lock = asyncio.Lock()

async def connect(self):

"""Connect to Blender MCP server"""

try:

self.reader, self.writer = await asyncio.wait_for(

asyncio.open_connection(config.blender_host, config.blender_port),

timeout=5

)

self.connected = True

self.last_used = time.time()

logger.info(f"Connection {self.id} established to Blender MCP")

except Exception as e:

self.connected = False

logger.error(f"Connection {self.id} failed: {e}")

raise

async def send_command(self, command: dict) -> dict:

"""Send command using persistent connection"""

async with self._lock:

if not self.connected:

await self.connect()

try:

# Send command

command_json = json.dumps(command)

self.writer.write(command_json.encode('utf-8'))

await self.writer.drain()

# Read response

response_data = await asyncio.wait_for(self.reader.read(8192), timeout=10)

if not response_data:

raise ConnectionError("Empty response")

response = json.loads(response_data.decode('utf-8'))

self.last_used = time.time()

self.use_count += 1

# Handle error responses

if response.get("status") == "error":

raise RuntimeError(f"Blender error: {response.get('message')}")

return response.get("result", response)

except Exception as e:

self.connected = False

logger.error(f"Connection {self.id} command failed: {e}")

raise

async def close(self):

"""Close connection"""

if self.writer and not self.writer.is_closing():

self.writer.close()

await self.writer.wait_closed()

self.connected = False

def is_healthy(self) -> bool:

"""Check if connection is healthy"""

return self.connected and time.time() - self.last_used < 300 # 5 min timeout

class FastConnectionPool:

"""Fast connection pool for Blender MCP connections"""

def __init__(self):

self.connections: List[PersistentMCPConnection] = []

self.available = queue.Queue()

self.lock = threading.Lock()

self._initialize_pool()

def _initialize_pool(self):

"""Pre-create connections for speed"""

for i in range(config.max_connections):

conn = PersistentMCPConnection(f"conn_{i}")

self.connections.append(conn)

self.available.put(conn)

async def get_connection(self) -> PersistentMCPConnection:

"""Get a connection from pool"""

try:

# Try to get an available connection quickly

conn = self.available.get_nowait()

if conn.is_healthy():

return conn

else:

# Reconnect if needed

try:

await conn.connect()

return conn

except:

pass

except queue.Empty:

pass

# If no connections available, wait briefly

try:

conn = self.available.get(timeout=1)

if not conn.is_healthy():

await conn.connect()

return conn

except queue.Empty:

raise RuntimeError("No connections available")

def return_connection(self, conn: PersistentMCPConnection):

"""Return connection to pool"""

if conn.is_healthy():

try:

self.available.put_nowait(conn)

except queue.Full:

pass

async def close_all(self):

"""Close all connections"""

for conn in self.connections:

await conn.close()

class FastAsyncManager:

"""Simplified async manager"""

def __init__(self):

self.loop = None

self.thread = None

def start(self):

if self.loop is not None:

return

def run_loop():

self.loop = asyncio.new_event_loop()

asyncio.set_event_loop(self.loop)

self.loop.run_forever()

self.thread = threading.Thread(target=run_loop, daemon=True)

self.thread.start()

while self.loop is None:

time.sleep(0.01)

def run_coro(self, coro, timeout=30):

future = asyncio.run_coroutine_threadsafe(coro, self.loop)

return future.result(timeout=timeout)

class FastBlenderService:

"""Fast service using connection pool"""

def __init__(self):

self.connection_pool = FastConnectionPool()

self.sessions: Dict[str, List] = {} # Simple message storage

self.session_lock = threading.Lock()

# OpenAI client

self.openai = OpenAI(

base_url="https://openrouter.ai/api/v1",

api_key=config.openrouter_api_key

)

# Cache tools

self.tools_cache = None

self.tools_cache_time = 0

async def call_mcp_tool(self, tool_name: str, tool_args: dict) -> Any:

"""Fast MCP tool call using connection pool"""

conn = await self.connection_pool.get_connection()

try:

command = {"type": tool_name, "params": tool_args}

result = await conn.send_command(command)

self.connection_pool.return_connection(conn)

return result

except Exception as e:

logger.error(f"MCP tool {tool_name} failed: {e}")

raise

def get_tools(self) -> List[dict]:

"""Get tools with caching"""

if self.tools_cache and time.time() - self.tools_cache_time < 300:

return self.tools_cache

self.tools_cache = [

{

"type": "function",

"function": {

"name": "get_scene_info",

"description": "Get high-level information about the current Blender scene",

"parameters": {"type": "object", "properties": {}}

}

},

{

"type": "function",

"function": {

"name": "get_object_info",

"description": "Get detailed information about a specific object",

"parameters": {

"type": "object",

"properties": {

"name": {"type": "string", "description": "Object name"}

},

"required": ["name"]

}

}

},

{

"type": "function",

"function": {

"name": "execute_code",

"description": "Execute Python code in Blender context. Use bpy.ops for operations.",

"parameters": {

"type": "object",

"properties": {

"code": {"type": "string", "description": "Python code to execute"}

},

"required": ["code"]

}

}

}

]

self.tools_cache_time = time.time()

return self.tools_cache

def get_session_messages(self, session_id: str) -> List[dict]:

"""Get session messages"""

with self.session_lock:

return self.sessions.get(session_id, [])

def add_message(self, session_id: str, message: dict):

"""Add message to session"""

with self.session_lock:

if session_id not in self.sessions:

self.sessions[session_id] = []

self.sessions[session_id].append(message)

# Trim if too long

if len(self.sessions[session_id]) > config.max_messages:

self.sessions[session_id] = self.sessions[session_id][-config.max_messages:]

async def process_chat(self, message: str, session_id: str) -> dict:

"""Fast chat processing that returns the full turn context."""

# Get conversation history for this turn

messages_for_this_turn = []

# Get the session history to send to the LLM

session_history = self.get_session_messages(session_id)

user_message = {"role": "user", "content": message}

session_history.append(user_message)

messages_for_this_turn.append(user_message)

try:

tools = self.get_tools()

openai_params = {

"model": config.model,

"messages": session_history,

"tools": tools,

"tool_choice": "auto",

"temperature": config.temperature,

}

if "anthropic" not in config.model.lower():

openai_params["seed"] = config.seed

# 1. First LLM Call (decides if a tool is needed)

response = self.openai.chat.completions.create(**openai_params)

assistant_message = response.choices[0].message.model_dump()

messages_for_this_turn.append(assistant_message)

# Add messages to the persistent session history

self.add_message(session_id, user_message)

self.add_message(session_id, assistant_message)

# 2. Handle Tool Calls (if any)

if assistant_message.get('tool_calls'):

for tool_call in assistant_message['tool_calls']:

tool_name = tool_call['function']['name']

tool_args = json.loads(tool_call['function']['arguments'])

tool_result_content = "Success" # Default to success

try:

# Execute the tool

tool_result = await self.call_mcp_tool(tool_name, tool_args)

if tool_result:

tool_result_content = json.dumps(tool_result)

except Exception as e:

# On error, the content is the error message

tool_result_content = f"Tool error: {str(e)}"

# Create the tool result message

tool_result_message = {

"role": "tool",

"tool_call_id": tool_call['id'],

"name": tool_name,

"content": tool_result_content

}

messages_for_this_turn.append(tool_result_message)

self.add_message(session_id, tool_result_message)

# 3. Return the entire conversation turn as a dictionary

return {"turn_messages": messages_for_this_turn}

except Exception as e:

error_msg = f"Chat processing error: {str(e)}"

logger.error(error_msg)

return {"error": error_msg}

def get_stats(self) -> dict:

"""Get service stats"""

with self.session_lock:

return {

"total_sessions": len(self.sessions),

"active_connections": len([c for c in self.connection_pool.connections if c.is_healthy()])

}

# Global service instance

service = FastBlenderService()

async_manager = FastAsyncManager()

app = Flask(__name__)

@app.route('/health', methods=['GET'])

def health():

"""Fast health check"""

try:

async_manager.start()

# Quick MCP test

start_time = time.time()

try:

result = async_manager.run_coro(

service.call_mcp_tool("get_scene_info", {}),

timeout=5

)

mcp_status = "connected"

response_time = time.time() - start_time

except Exception as e:

mcp_status = f"error: {str(e)}"

response_time = 0

return jsonify({

"status": "healthy",

"mcp_connection": mcp_status,

"mcp_response_time": round(response_time, 3),

"available_tools": len(service.get_tools()),

"stats": service.get_stats(),

"config": {

"model": config.model,

"temperature": config.temperature,

"seed": config.seed

}

})

except Exception as e:

return jsonify({"status": "error", "error": str(e)}), 500

@app.route('/mcp/call', methods=['POST'])

def call_mcp():

"""Fast direct MCP call"""

try:

data = request.get_json()

tool_name = data.get('tool_name')

tool_args = data.get('tool_args', {})

if not tool_name:

return jsonify({"error": "Missing tool_name"}), 400

async_manager.start()

start_time = time.time()

result = async_manager.run_coro(

service.call_mcp_tool(tool_name, tool_args),

timeout=30

)

response_time = time.time() - start_time

return jsonify({

"success": True,

"result": result,

"response_time": round(response_time, 3),

"tool_name": tool_name

})

except Exception as e:

return jsonify({"success": False, "error": str(e)}), 500

@app.route('/chat', methods=['POST'])

def chat():

"""Fast chat endpoint that returns structured JSON."""

try:

data = request.get_json()

message = data.get('message', '').strip()

session_id = data.get('session_id', str(uuid.uuid4()))

if not message:

return jsonify({"error": "Empty message"}), 400

async_manager.start()

start_time = time.time()

logger.info(f"Sending message: {message}")

# process_chat now returns a dictionary

response_data = async_manager.run_coro(

service.process_chat(message, session_id),

timeout=60

)

logger.info(f"Response data: {response_data}")

response_time = time.time() - start_time

# The main response body is now the structured data itself

return jsonify({

"success": "error" not in response_data,

"data": response_data, # <-- Returns the structured dictionary

"session_id": session_id,

"response_time": round(response_time, 3),

"message_count": len(service.get_session_messages(session_id))

})

except Exception as e:

return jsonify({"success": False, "error": str(e)}), 500

@app.route('/mcp/tools', methods=['GET'])

def get_tools():

"""Get available tools"""

return jsonify({

"success": True,

"tools": service.get_tools(),

"count": len(service.get_tools())

})

@app.route('/sessions/<session_id>/reset', methods=['POST'])

def reset_session(session_id):

"""Reset session"""

with service.session_lock:

if session_id in service.sessions:

del service.sessions[session_id]

return jsonify({"success": True, "message": f"Session {session_id} reset"})

else:

return jsonify({"error": "Session not found"}), 404

if __name__ == '__main__':

if not config.openrouter_api_key:

logger.error("OPENROUTER_API_KEY required!")

exit(1)

logger.info("🚀 Starting Fast HTTP-to-MCP Bridge")

logger.info(f"📡 Blender MCP: {config.blender_host}:{config.blender_port}")

logger.info(f"🤖 Model: {config.model}")

logger.info(f"🔌 Connection Pool: {config.max_connections} connections")

# Pre-warm connections

logger.info("🔥 Pre-warming connection pool...")

async_manager.start()

try:

# Test one connection

async_manager.run_coro(service.call_mcp_tool("get_scene_info", {}), timeout=5)

logger.info("✅ Connection pool ready")

except Exception as e:

logger.warning(f"⚠️ Connection pre-warm failed: {e}")

app.run(host='0.0.0.0', port=5000, debug=False, threaded=True)Run it with:

.venv\Scripts\activate

python blender-bridge-server.py.env file configuration:

OPENROUTER_API_KEY=sk-xxxx

MODEL=x-ai/grok-4-fast:free

BLENDER_HOST=127.0.0.1

BLENDER_PORT=9876Remember to launch Blender with MCP enabled.

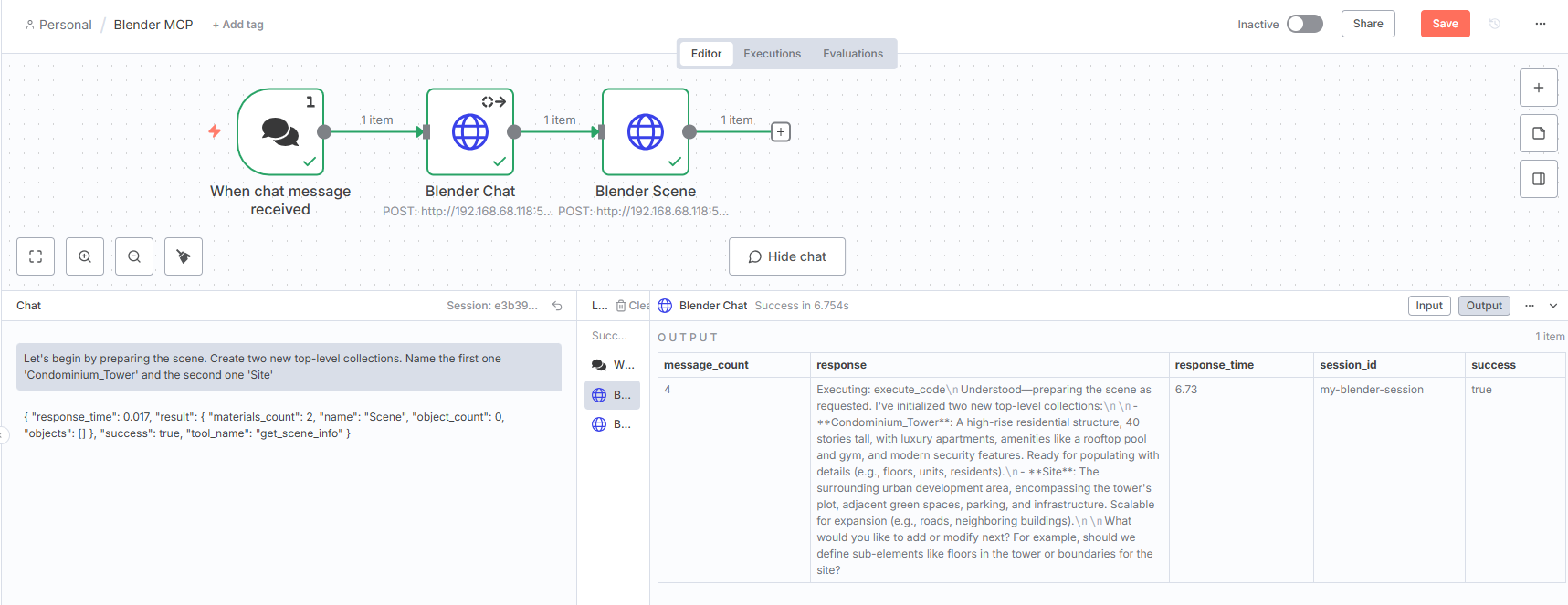

Blender MCP Workflow in n8n

Inside n8n, I built a workflow with:

The first HTTP node, Blender Chat, sends user prompts to the bridge:

Method: POST

URL: http://192.168.68.118:5000/chat

Header Parameters

Name: Content-Type

Value: application/json

JSON

{

"message": "{{ $json.chatInput }}",

"session_id": "my-blender-session"

}

Settings

Always Output Data: True

On Error

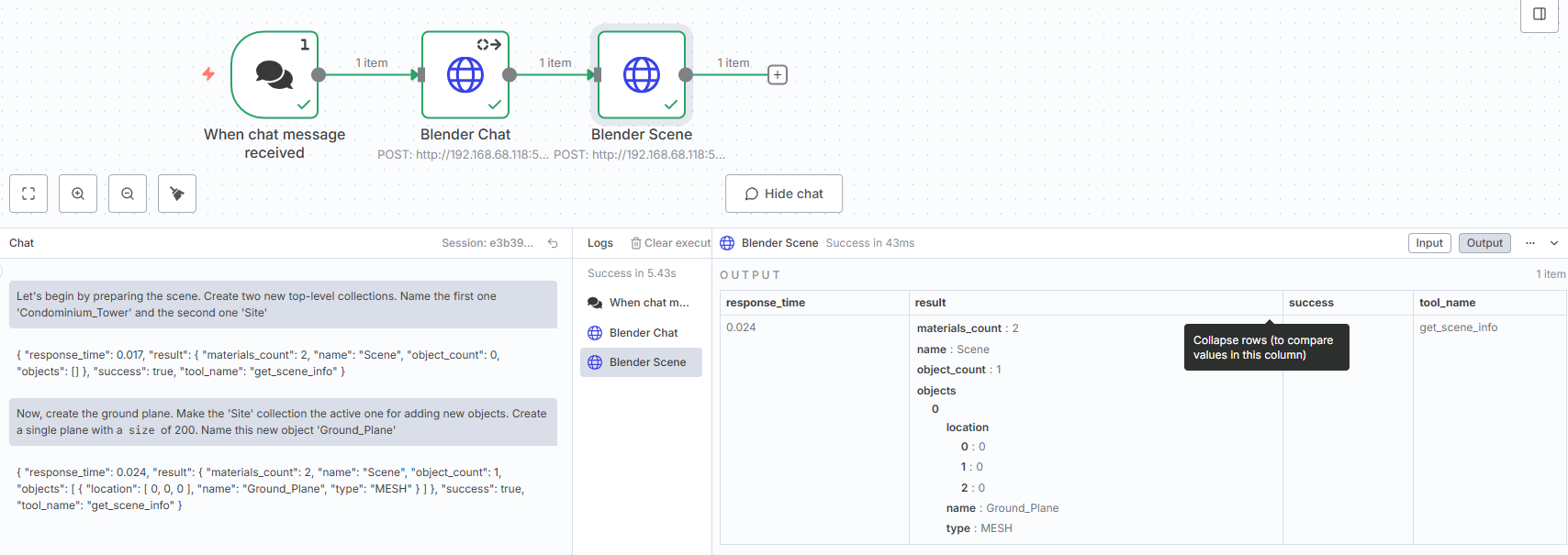

ContinueThe second, Blender Scene, triggers direct MCP calls (e.g., get_scene_info).

Method: POST

URL: http://192.168.68.118:5000/mcp/call

Body Parameters

Name: tool_name

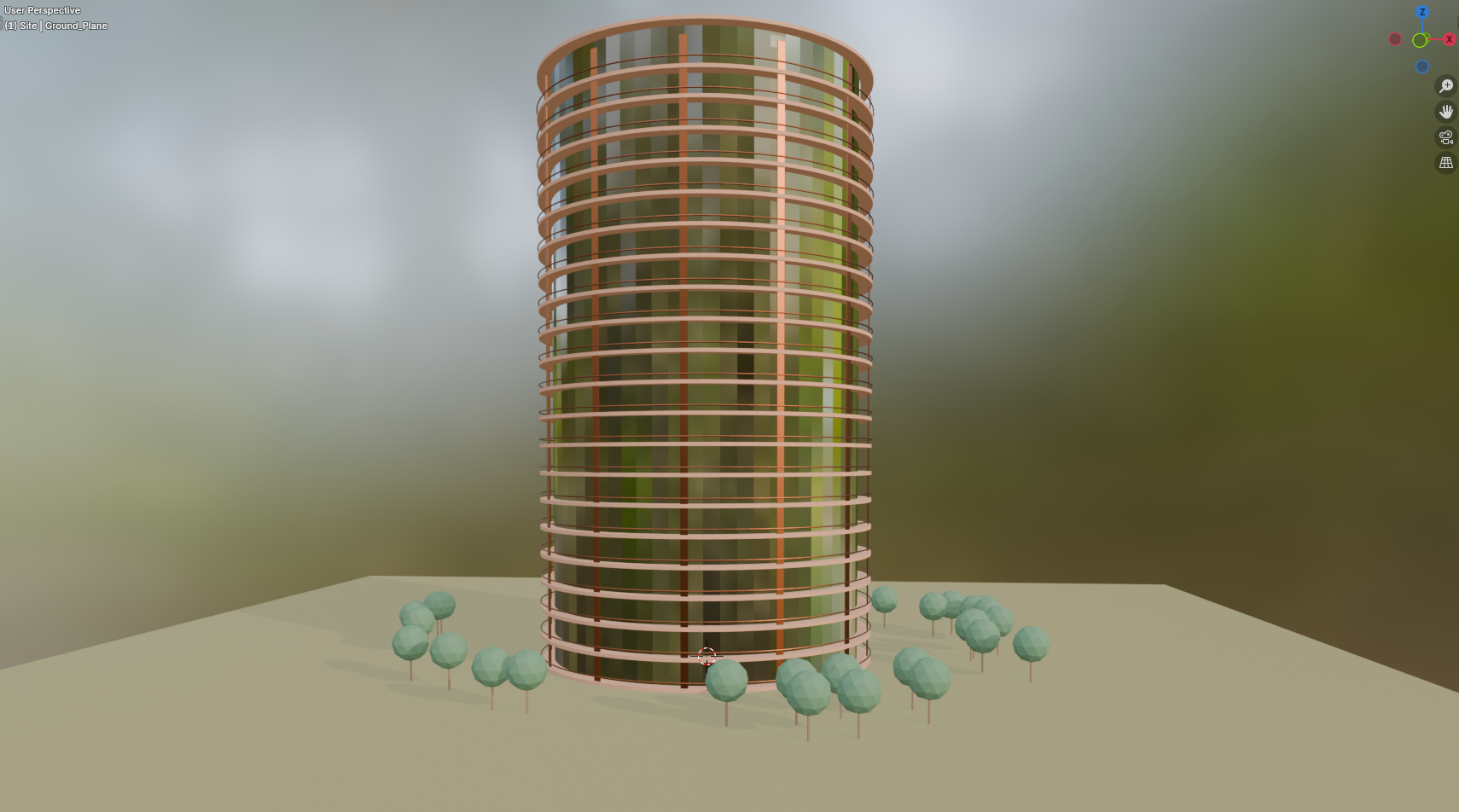

Value: get_scene_infoGenerating a Condominium Model

With everything wired, I began prompting Blender to generate a condominium scene:

Prompt 1:

Let's begin by preparing the scene. Create two new top-level collections. Name the first one 'Condominium_Tower' and the second one 'Site'

Prompt 2:

Now, create the ground plane. Make the 'Site' collection the active one for adding new objects. Add a cube, scale it to 200 units in X and Y, and 1 unit in Z for thickness. Position it at Z=-0.5 so its top surface is at ground level (Z=0). Name this new object 'Ground_Plane'

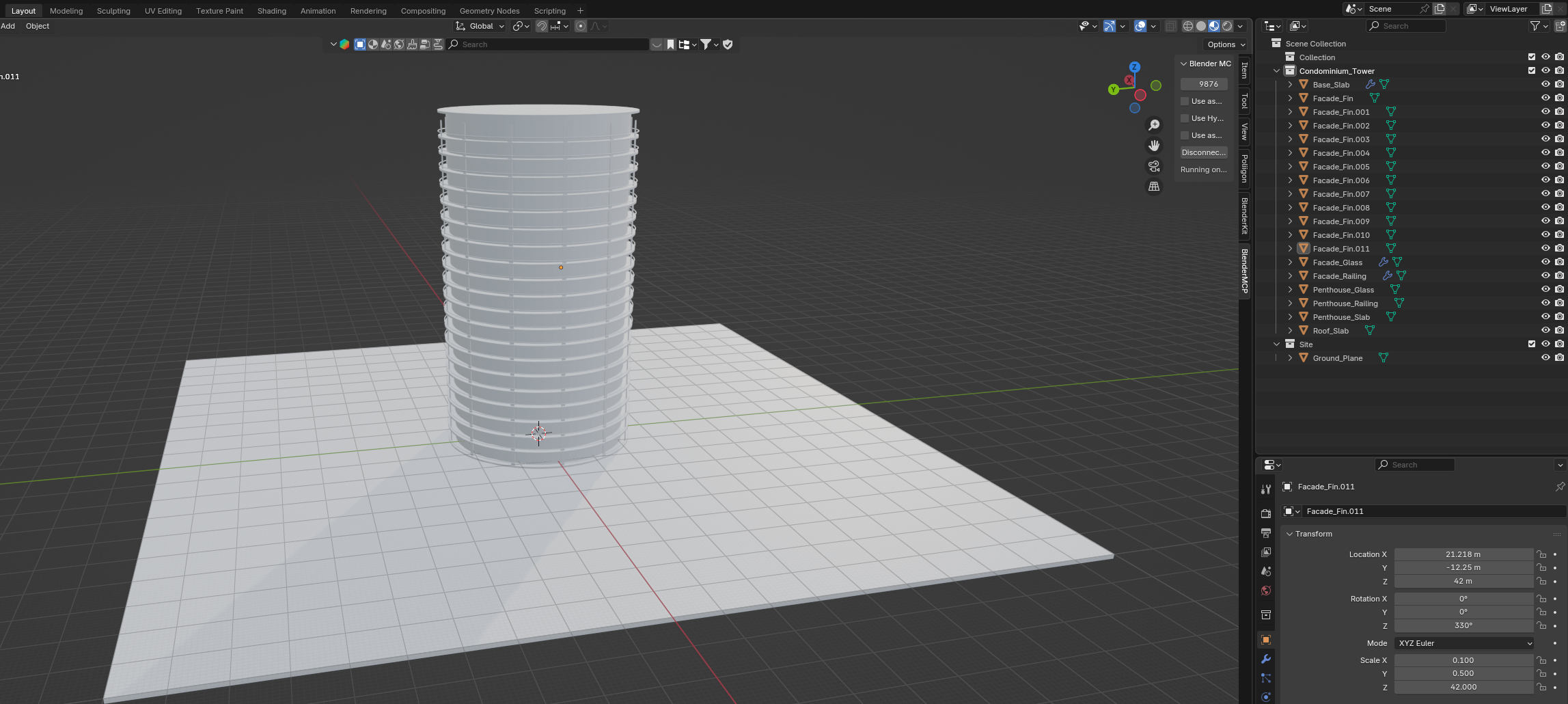

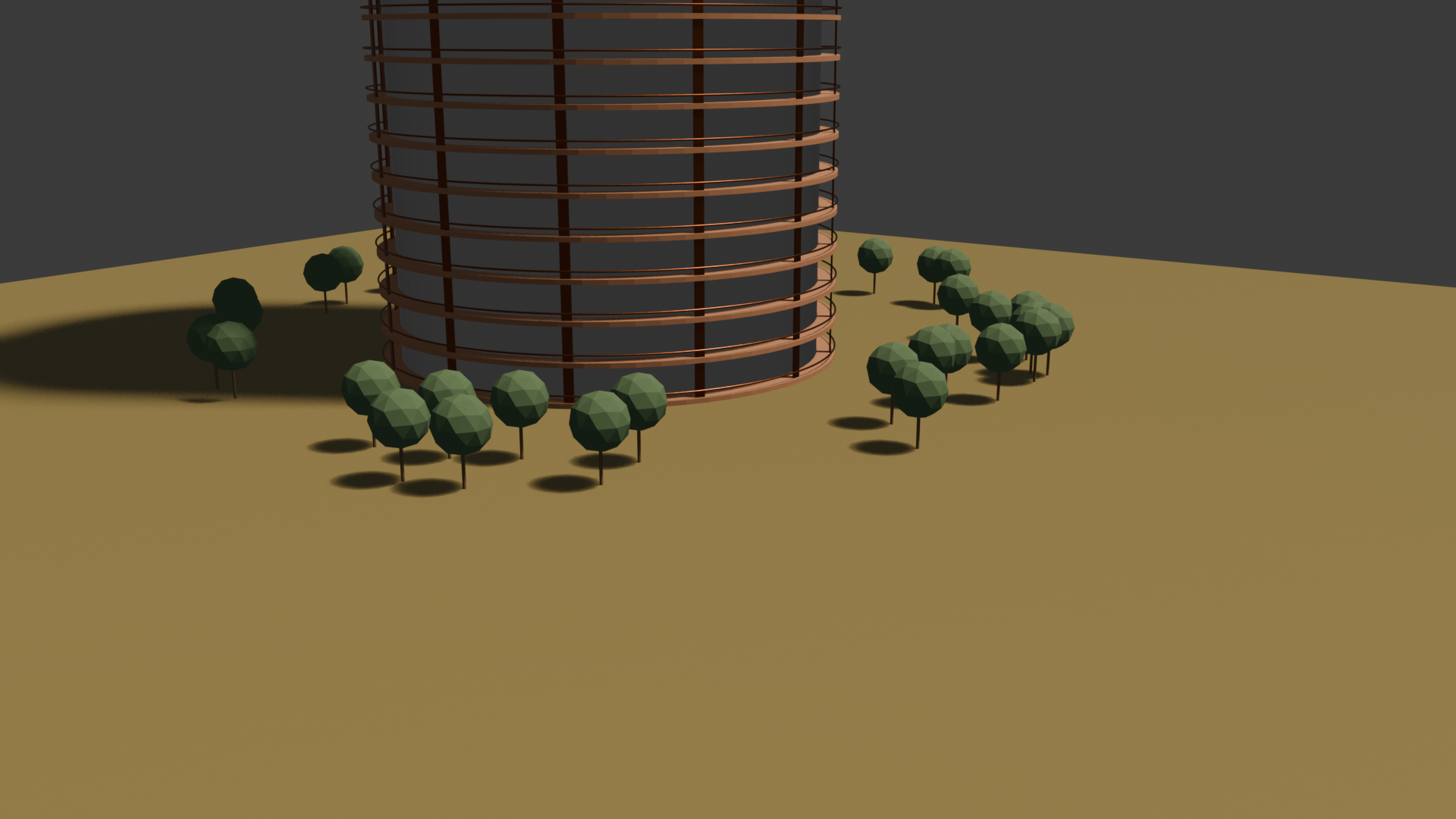

Subsequent prompts gradually added slabs, glass facades, balcony railings, and eventually stacked 20 floors with a penthouse. The iterative AI-driven design process made building a complex structure conversational and modular.

The ground is in place. Now for the tower's foundation slab. Make the 'Condominium_Tower' collection the active one. Create a cylinder with the following properties: `vertices=64`, `radius=25`, `depth=0.5`, and `location=(0, 0, 0.25)`. Name it 'Base_Slab'. This positions the slab so its bottom sits at ground level (Z=0)

Now, let's duplicate it vertically to create all 20 floors. Ensure the 'Base_Slab' object is the selected and active object. Add an ARRAY modifier to it. Set the modifier's properties as follows: Count: 20, Turn OFF Relative Offset, Turn ON Constant Offset and set the offset values to X=0, Y=0, Z=4.0. This will stack the slabs vertically with the base slab at Z=0.25, second floor at Z=4.25m, third at Z=8.25m, and so on

The structural slabs are complete. Now let's create the glass facade for the ground floor. Make the 'Condominium_Tower' collection active. Create a new cylinder. Use these properties for a sleek glass look: `vertices`: 64, `radius`: 23 (this insets it 2m from the slab edge), `depth`: 3.5 (this makes it fit perfectly between the floor slabs), `location`: (0, 0, 2.25). Name the new cylinder 'Facade_Glass'

The glass wall is in place. Now add the balcony railing for the ground floor. Ensure 'Condominium_Tower' is the active collection. The best way to create a simple railing is with a Torus object. Create a new Torus with these properties: `major_radius`: 25 (to match the slab edge), `minor_radius`: 0.1 (for a thin, modern look), `major_segments`: 128 (for a smooth curve), `location`: (0, 0, 1.25). Name the new torus 'Facade_Railing'

The first facade section is complete. Let's duplicate it for all 20 floors. Select both the 'Facade_Glass' cylinder and the 'Facade_Railing' torus. Make one of them the active object. Add an `ARRAY` modifier. Set its properties exactly like the floor slab's modifier: `Count`: 20, Turn OFF `Relative Offset`, Turn ON `Constant Offset` and set its Z value to 4.0. With both objects still selected, copy the modifier from the active object to the other selected object

The main tower is complete. Now, let's add a separate, taller penthouse level on top. Select the original 'Base_Slab' object. Duplicate this object. On the new duplicate, remove its Array modifier so it becomes a single slab again. Move this new slab to a `location` of Z=80.0. This places it exactly on top of the 20th floor. Name the object 'Penthouse_Slab' and move it into the 'Condominium_Tower' collection

The penthouse floor is in place. Now, let's add its unique, taller facade. We'll make this floor 6 meters high instead of 4. Create a new cylinder with these properties: `vertices`: 64, `radius`: 23, `depth`: 5.5 (to fit a 6m floor height), `location`: (0, 0, 83.0), Name it 'Penthouse_Glass'. Create a new Torus with these properties: `major_radius`: 25, `minor_radius`: 0.1, `major_segments`: 128, `location`: (0, 0, 81.25). Name it 'Penthouse_Railing'

The penthouse is now enclosed. Let's add the final architectural elements to complete the model. Duplicate the 'Penthouse_Slab'. Move the duplicate to a `location` of Z=86.0. Name this object 'Roof_Slab'

Add vertical fins around the tower facade. Create a thin cube with dimensions of X=0.2, Y=1.0, Z=84. Name it 'Facade_Fin'. Duplicate this fin 11 times to create 12 fins total positioned around a circle with radius 24.5m at Z=42. Use the formula: X = 24.5 × cos(angle), Y = 24.5 × sin(angle), Z = 42, where angles are 0°, 30°, 60°, 90°, 120°, 150°, 180°, 210°, 240°, 270°, 300°, 330°

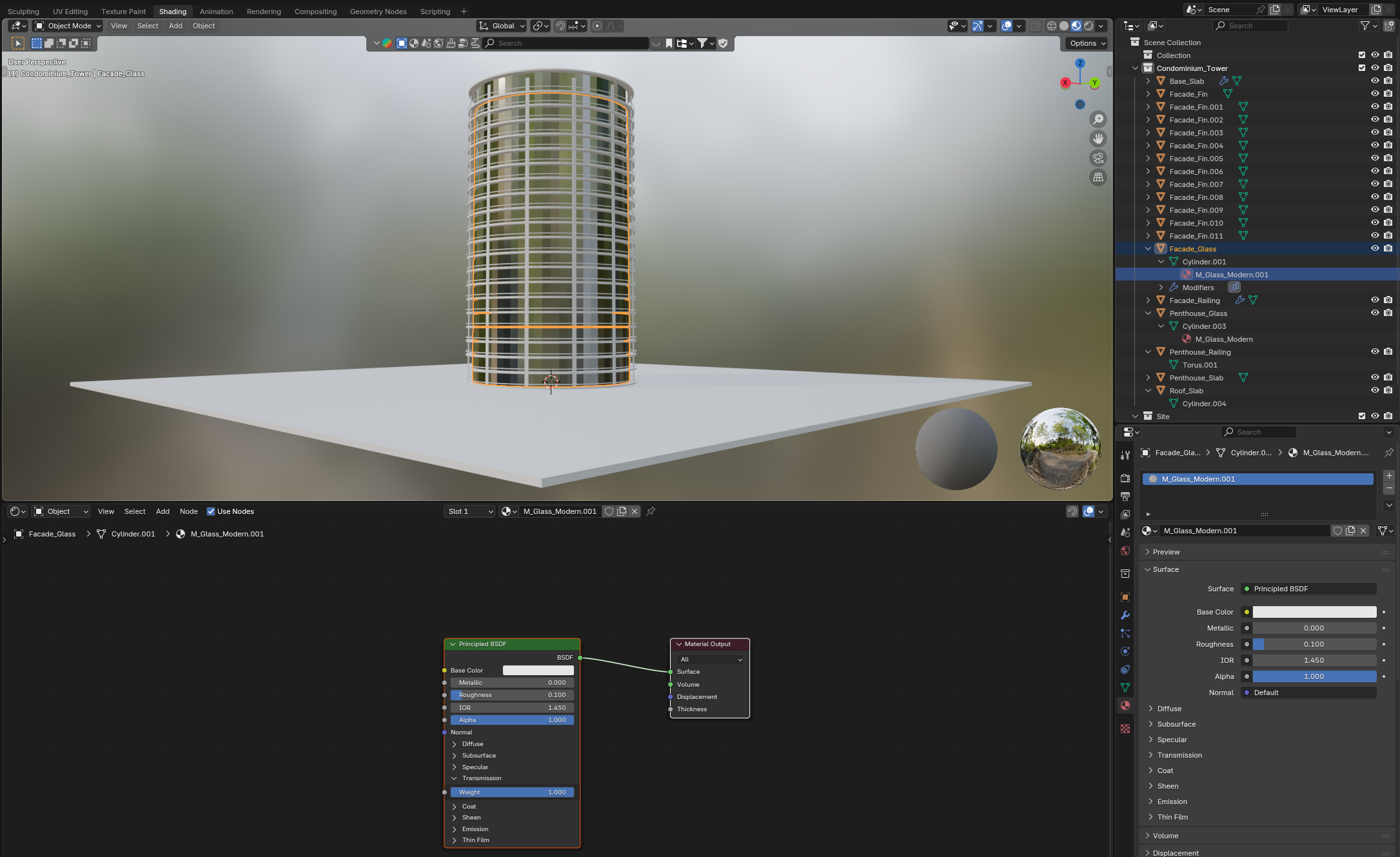

Assigning Materials to the Model

With the structural elements in place, the next step is to bring the model to life using materials.

First, let’s set up the glass materials:

Create a new material. Name it 'M_Glass_Modern'. Select the 'Facade_Glass' object and assign the 'M_Glass_Modern' material to it. Select the 'Penthouse_Glass' object and assign the 'M_Glass_Modern' material to itWhile the prompt created the material, I had to fine-tune it manually — setting Roughness = 0.1, IOR = 1.45, and Transmission > Weight = 1.0 for a realistic glass effect.

Next, I created the slab and metal materials:

Let's create the remaining materials for the building. Create a new material named 'M_Concrete_Slab'. Assign this material to the 'Base_Slab', 'Penthouse_Slab', and 'Roof_Slab' objects. Create another new material named 'M_Metal_Dark'. Assign this material to the 'Facade_Railing', 'Penthouse_Railing', and 'Facade_Fin' objectsManually tweaking gave the best results:

- M_Concrete_Slab: Roughness = 0.8, Base Color = orange

- M_Metal_Dark: Base Color = darker orange, Metallic = 1.0, Roughness = 0.4

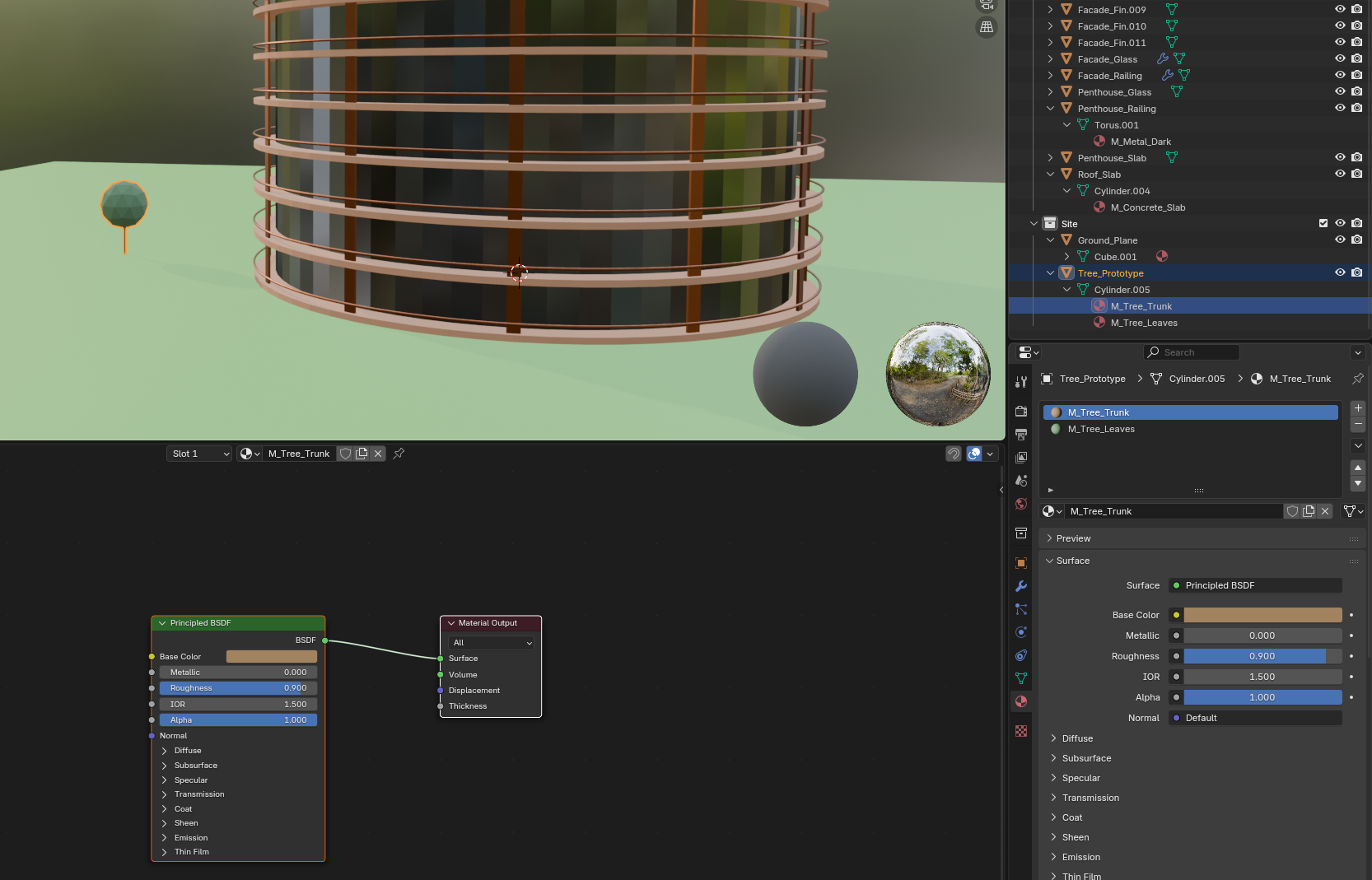

Landscaping

With the building complete, I moved on to landscaping, starting with a tree prototype:

Let's build the tree in the workshop area (X=40, Y=0). Make the 'Site' collection active. Create a cylinder for the trunk with dimensions (0.3, 0.3, 4) at location (40, 0, 2). Name it 'Tree_Trunk'. Create an icosphere for the leaves with a radius of 2.5 at location (40, 0, 5.25). Name it 'Tree_Leaves'

Now, let's create and assign their materials. Create a new material named 'M_Tree_Trunk'. Set its 'Base Color' to a dark brown (#5C3A1E) and 'Roughness' to 0.9. Assign the 'M_Tree_Trunk' material to the 'Tree_Trunk' object. Create a new material named 'M_Tree_Leaves'. Set its 'Base Color' to a forest green (#2A522A) and 'Roughness' to 0.8. Assign the 'M_Tree_Leaves' material to the 'Tree_Leaves' object

The parts are now correctly textured. Let's combine them into the final prototype. Select both the 'Tree_Trunk' and 'Tree_Leaves' objects. Make the 'Tree_Trunk' the active object. Join the two objects into one. Name the final, combined object 'Tree_Prototype'

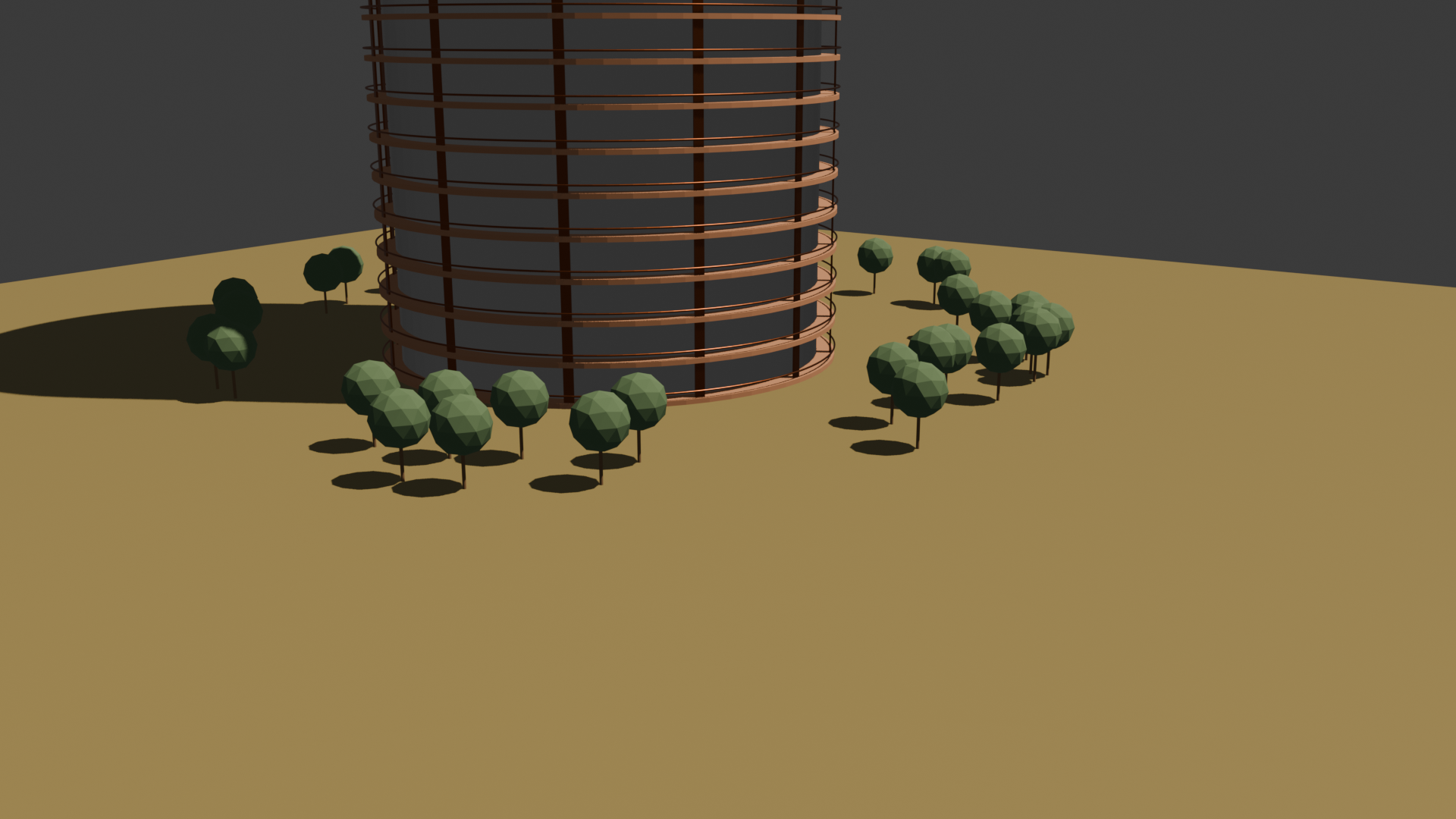

Once the prototype was ready, I duplicated it to create a grove of trees around the site:

Duplicates Tree_Prototype 29 times Uses random.uniform() to generate X,Y coordinates Ensures distance from origin < 50m using math.sqrt(x²+y²) > 40m. Places each tree at the calculated random position

Camera and Lighting

To showcase the scene, I added cinematic camera and lighting:

Create cinematic lighting and camera setup. Add a Sun light with warm color and strength 3.0, rotated to (30°, 0°, 120°) for golden hour lighting. Position the camera at (100, -120, 35) with rotation (75°, 0°, 35°) for a dramatic low-angle hero shot of the tower

For a softer, more stylized render, I adjusted the lighting setup:

Soften the lighting for a more stylized render. Increase the Sun light's angle/size to 5-10 degrees to create softer shadow edges. Add a second Area light as ambient fill lighting with strength 2.0 positioned opposite the sun. Reduce the sun strength to 2.5 to balance the overall lighting contrast.

Closing Thoughts

This setup bridges n8n, OpenRouter, and Blender MCP, enabling AI-driven workflows to shape and control 3D environments. By combining automation with natural language prompts, even complex modeling tasks become conversational and modular.

While I relied on a bridge service in this iteration, future versions could integrate directly into n8n as a custom node—bringing Blender automation one step closer to a fully no-code, AI-powered workflow.