As part of my journey through the Convolutional Neural Networks course (part of the Deep Learning Specialization), I’ve been implementing foundational concepts such as skip connections in deep ResNet architectures using Keras. One of the labs also introduced transfer learning to create an Alpaca/Not Alpaca classifier using a pre-trained CNN.

To further reinforce what I’ve learned, I decided to apply transfer learning using MobileNetV2 for a binary classification task—Cats vs. Dogs. This post walks through the full process: from data preprocessing to model training, evaluation, and visualization.

Prerequiste Setup

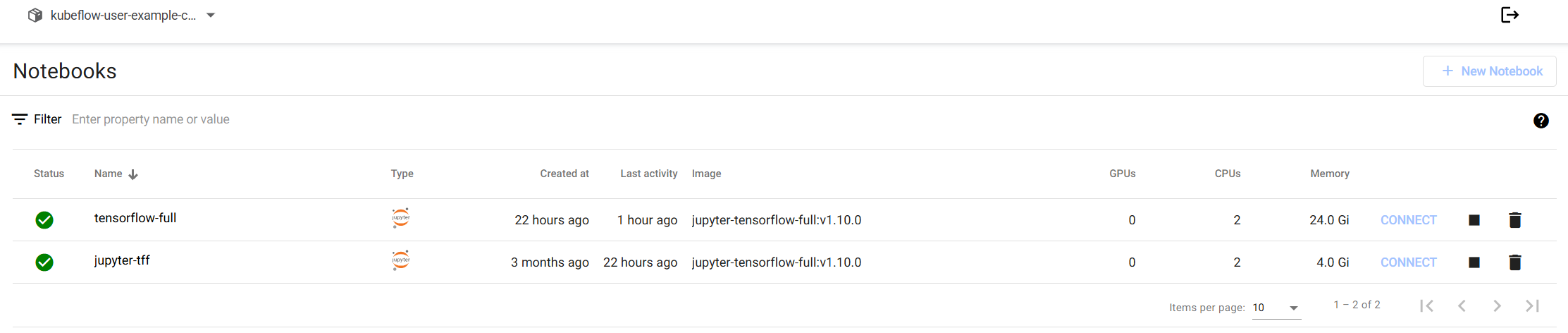

For this experiment, I set up a new tensorflow-full notebook environment in Kubeflow, running in my homelab. The notebook is configured with:

- 2 CPUs

- 24Gi memory

This environment comes pre-installed with TensorFlow and commonly used ML libraries, making it an ideal starting point for deep learning workflows. To load datasets from TensorFlow Datasets (TFDS), simply install:

pip install tensorflow_datasets

By leveraging Kubeflow Notebooks, I can easily scale experiments, persist environments, and integrate with other ML components (like Pipelines or Katib) when needed—all within a self-hosted setup.

Introduction

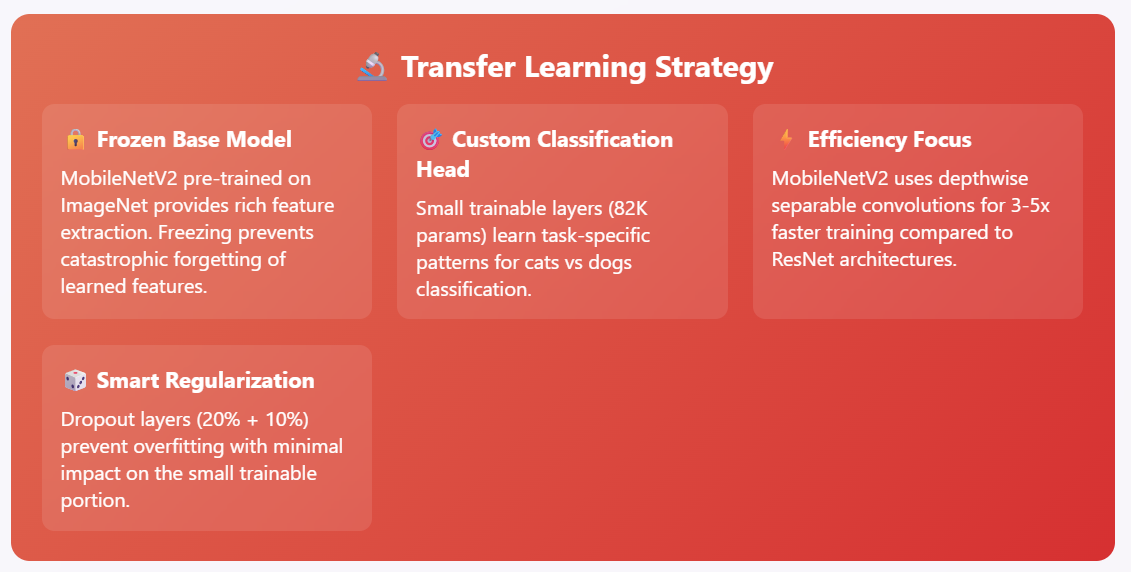

Keras Applications offer a collection of pre-trained models, such as MobileNetV2 and ResNet50, trained on the ImageNet dataset. These are highly useful in transfer learning, where I reuse parts of a pre-trained model for a new but related task.

According to the Keras Transfer Learning Guide , the typical workflow includes:

- Load a pre-trained model.

- Freeze its layers to preserve learned features.

- Add new trainable layers tailored for the new task.

- Train only the new layers with your dataset.

In this example, I’ll classify images as either cats or dogs using this approach with MobileNetV2.

Setup & Imports

import tensorflow as tf

import tensorflow_datasets as tfds

from tensorflow.keras.applications import MobileNetV2

from tensorflow.keras.layers import Dense, GlobalAveragePooling2D, Dropout

from tensorflow.keras.models import Model

from tensorflow.keras.optimizers import Adam

from tensorflow.keras.callbacks import ModelCheckpoint, EarlyStopping, ReduceLROnPlateau

import matplotlib.pyplot as plt

import numpy as np

# For reproducibility

tf.random.set_seed(42)

np.random.seed(42)Data Loading & Preprocessing

I use the cats_vs_dogs dataset from TensorFlow Datasets (TFDS), splitting it into 80% training and 20% validation. Images are resized to 224x224 and normalized. To improve generalization, I apply basic augmentations to training data such as flipping, brightness, contrast adjustments, and rotation.

def preprocess_data(image, label):

image = tf.image.resize(image, [224, 224])

image = tf.cast(image, tf.float32) / 255.0

return image, label

def augment_data(image, label):

image = tf.image.random_flip_left_right(image)

image = tf.image.random_brightness(image, max_delta=0.2)

image = tf.image.random_contrast(image, lower=0.8, upper=1.2)

image = tf.image.rot90(image, k=tf.random.uniform([], 0, 4, dtype=tf.int32))

return image, label

def load_cats_vs_dogs_dataset(batch_size=32):

print("Downloading cats_vs_dogs dataset...")

(ds_train, ds_test), ds_info = tfds.load(

'cats_vs_dogs',

split=['train[:80%]', 'train[80%:]'],

as_supervised=True,

with_info=True,

)

print(f"Training samples: {ds_info.splits['train'].num_examples * 0.8:.0f}")

print(f"Validation samples: {ds_info.splits['train'].num_examples * 0.2:.0f}")

ds_train = ds_train.map(preprocess_data).map(augment_data).cache().shuffle(1000).batch(batch_size).prefetch(tf.data.AUTOTUNE)

ds_test = ds_test.map(preprocess_data).cache().batch(batch_size).prefetch(tf.data.AUTOTUNE)

return ds_train, ds_test, ds_infoModel Architecture

I use MobileNetV2 as the base model, excluding its top classification layer and freezing its weights. On top of it, I add:

- Global Average Pooling

- Dense + ReLU

- Dropout for regularization

- A final Dense layer with sigmoid for binary output

def create_cats_dogs_classifier(input_shape=(224, 224, 3)):

base_model = MobileNetV2(weights='imagenet', include_top=False, input_shape=input_shape)

base_model.trainable = False

inputs = tf.keras.Input(shape=input_shape)

x = base_model(inputs, training=False)

x = GlobalAveragePooling2D()(x)

x = Dropout(0.2)(x)

x = Dense(64, activation='relu')(x)

x = Dropout(0.1)(x)

outputs = Dense(1, activation='sigmoid')(x)

model = Model(inputs, outputs)

return model, base_model

Training Setup

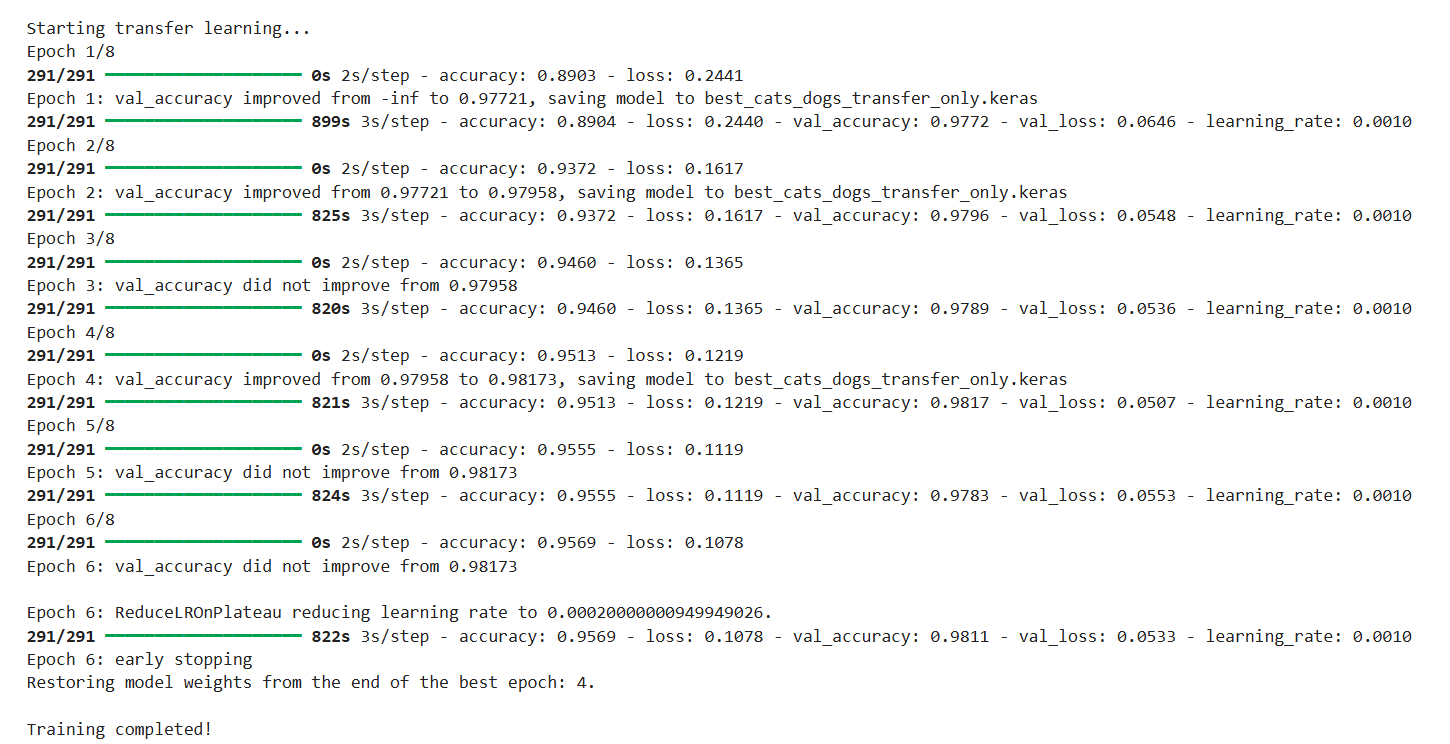

I train only the top classification layers, leaving the pre-trained base frozen. I use binary_crossentropy as the loss function and include callbacks to manage training:

ModelCheckpointto save the best-performing model.EarlyStoppingto halt training when no improvement is observed.ReduceLROnPlateauto adapt learning rate.

def train_cats_dogs_classifier_transfer_only(epochs=8, batch_size=64, patience=2):

ds_train, ds_test, ds_info = load_cats_vs_dogs_dataset(batch_size)

model, base_model = create_cats_dogs_classifier()

base_model.trainable = False

model.compile(optimizer=Adam(learning_rate=0.001), loss='binary_crossentropy', metrics=['accuracy'])

model.summary()

callbacks = [

ModelCheckpoint('best_cats_dogs_transfer_only.keras', monitor='val_accuracy', save_best_only=True, mode='max', verbose=1),

EarlyStopping(monitor='val_accuracy', patience=patience, restore_best_weights=True, verbose=1),

ReduceLROnPlateau(monitor='val_loss', factor=0.2, patience=2, min_lr=1e-7, verbose=1)

]

history = model.fit(ds_train, epochs=epochs, validation_data=ds_test, callbacks=callbacks, verbose=1)

plot_training_history(history)

visualize_predictions(model, ds_test)

loss, accuracy = model.evaluate(ds_test, verbose=0)

print(f"Test Accuracy: {accuracy:.4f}")

print(f"Test Loss: {loss:.4f}")

return model, historyVisualizing Results

I visualize both the training process and prediction results to evaluate the model performance.

Training History

def plot_training_history(history):

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(12, 4))

# Plot accuracy

ax1.plot(history.history['accuracy'], label='Training Accuracy')

ax1.plot(history.history['val_accuracy'], label='Validation Accuracy')

ax1.set_title('Model Accuracy')

ax1.set_xlabel('Epoch')

ax1.set_ylabel('Accuracy')

ax1.legend()

ax1.grid(True)

# Plot loss

ax2.plot(history.history['loss'], label='Training Loss')

ax2.plot(history.history['val_loss'], label='Validation Loss')

ax2.set_title('Model Loss')

ax2.set_xlabel('Epoch')

ax2.set_ylabel('Loss')

ax2.legend()

ax2.grid(True)

plt.tight_layout()

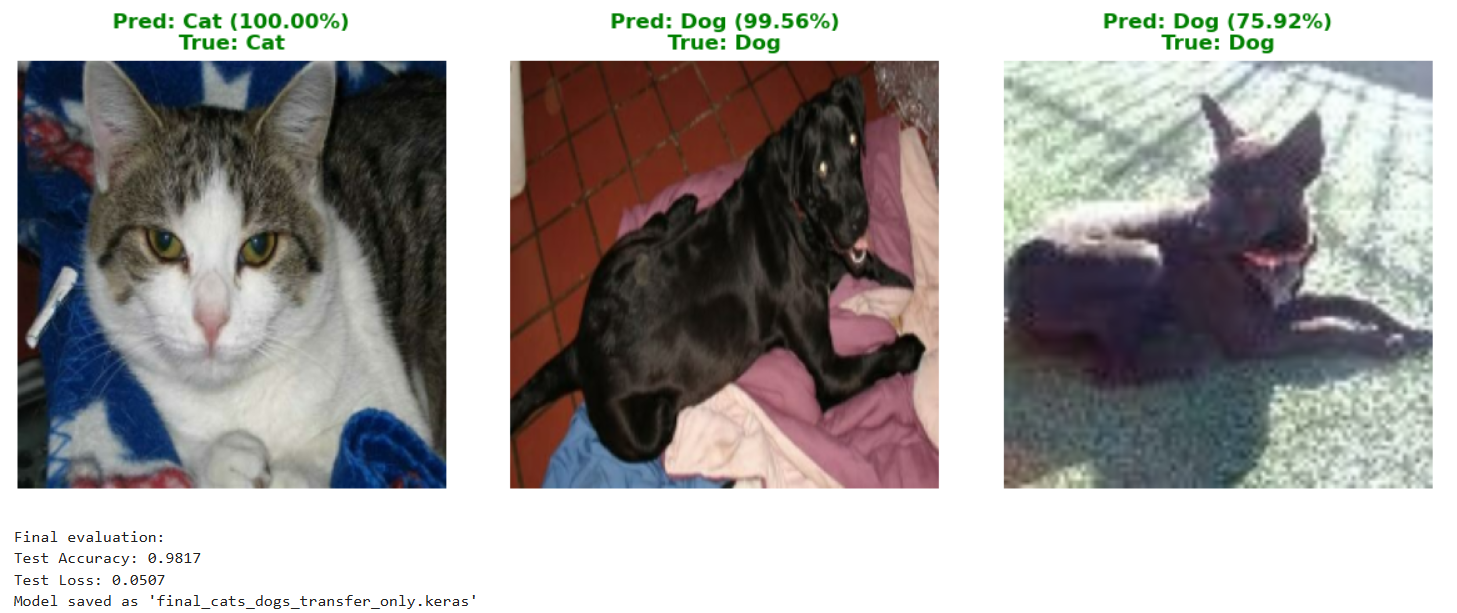

plt.show()Prediction Samples

def visualize_predictions(model, ds_test, num_samples=9):

# Get a batch of test data

for images, labels in ds_test.take(1):

predictions = model.predict(images[:num_samples])

fig, axes = plt.subplots(3, 3, figsize=(12, 12))

axes = axes.ravel()

for i in range(num_samples):

# Display image

axes[i].imshow(images[i])

axes[i].axis('off')

# Get prediction

pred_class = "Dog" if predictions[i] > 0.5 else "Cat"

true_class = "Dog" if labels[i] == 1 else "Cat"

confidence = predictions[i][0] if predictions[i] > 0.5 else 1 - predictions[i][0]

# Set title with color coding

color = 'green' if pred_class == true_class else 'red'

axes[i].set_title(f'Pred: {pred_class} ({confidence:.2%})\nTrue: {true_class}', color=color, fontweight='bold')

plt.tight_layout()

plt.show()

breakMain Execution Block

# Updated main execution

if __name__ == "__main__":

print("Starting Cats vs Dogs Classification with MobileNetV2")

print("This will download ~800MB of data on first run")

print("Optimized for transfer learning only - much faster training!")

model, history = train_cats_dogs_classifier_transfer_only(

epochs=8,

batch_size=64,

patience=2

)

model.save('final_cats_dogs_transfer_only.keras')

print("Model saved as 'final_cats_dogs_transfer_only.keras'")Sample output:

Starting Cats vs Dogs Classification with MobileNetV2

This will download ~800MB of data on first run

Optimized for transfer learning only - much faster training!

Loading and preprocessing data...

Downloading cats_vs_dogs dataset...

Training samples: 18610

Validation samples: 4652

Creating model...

Model created successfully!

Predictions

Here are some of the visualized predictions after training:

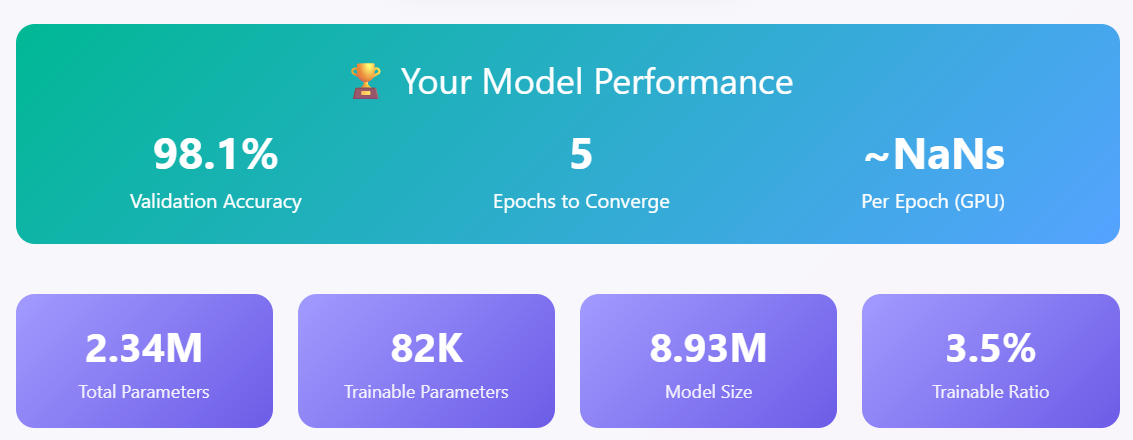

Final Performance

Let me know if you’d like to fine-tune the base model as a next step, or try with a different architecture like EfficientNet or ResNet50!