In this post, we’ll deploy the HDB Price Predictor model to Oracle Cloud’s Kubernetes Engine (OKE) using KServe. The setup includes provisioning an OKE cluster, configuring Istio for networking, and serving the model using KServe.

Prerequistes

First, install the required tools:

choco install kubernetes-helm

choco install wgetStep 1: Create Oracle Kubernetes Engine (OKE)

Start by creating a new compartment.

- Navigate to Identity & Security > Compartments and click Create Compartment.

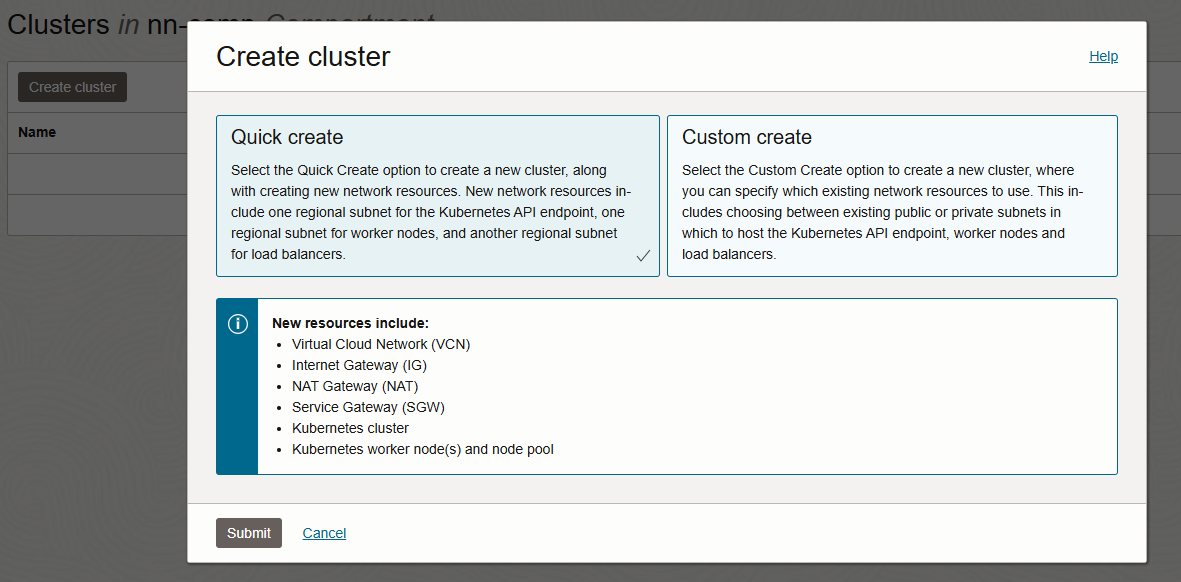

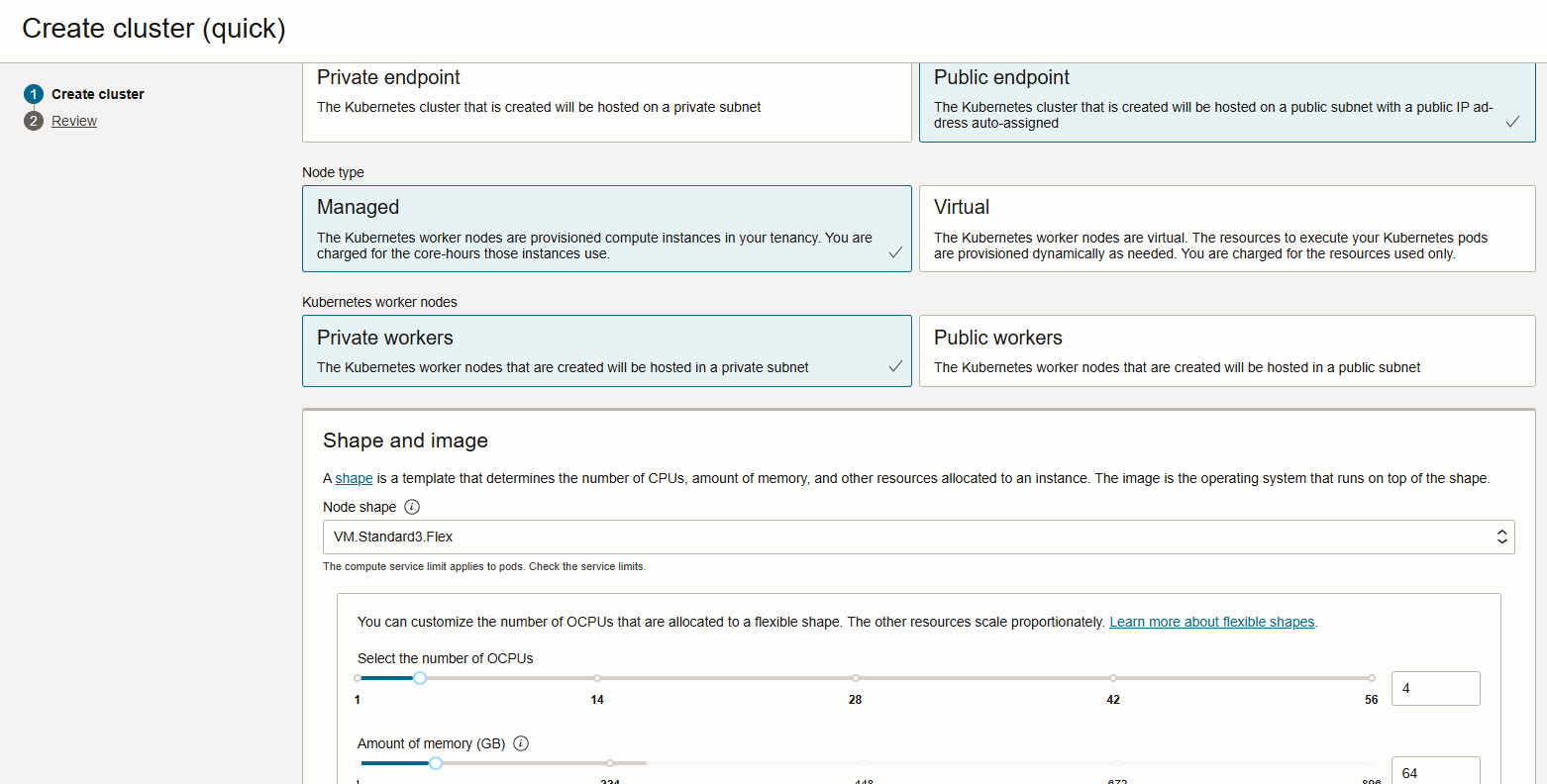

- Next, go to Developer Services > Kubernetes Clusters (OKE) and click Create Cluster.

For this demo, I created a 1-node basic cluster and waited until the node status changed to Ready and Active:

Step 2: OCI Command Line Interface (CLI)

Follow the

official OCI CLI guide

to install the CLI. I extracted the

oci-cli-3.55.0.zip

to F:\oci-cli. The API key setup is covered in the Oracle documentation, so I’ll skip it here.

Set up the environment:

F:

python -m venv oracle-cli

oracle-cli\Scripts\activate

cd \oci-cli

pip install oci_cli-3.55.0-py3-none-any.whlConfigure kubectl to access the cluster:

oci ce cluster create-kubeconfig --cluster-id ocid1.cluster.oc1.ap-singapore-2.aaaaaaaaayk5sgnphyr7rxdnwpdwfji25zcoodj52zeqlk2r3cpfpp64csya --file %USERPROFILE%/.kube/config --region ap-singapore-2 --token-version 2.0.0 --kube-endpoint PUBLIC_ENDPOINT

set KUBECONFIG=%USERPROFILE%/.kube/config

kubectl get no

# (oracle-cli) F:\oci-cli>kubectl get no

# NAME STATUS ROLES AGE VERSION

# 10.0.10.184 Ready node 40s v1.33.0Step 3: Install KServe and Dependencies

3.1 Install Cert Manager

Follow the KServe 0.15 setup guide :

kubectl apply -f https://github.com/cert-manager/cert-manager/releases/download/v1.17.2/cert-manager.yaml

kubectl get deployments -n cert-manager3.2 Install Istio via Helm

Referencing Istio’s Helm installation guide :

helm repo add istio https://istio-release.storage.googleapis.com/charts

helm repo update

helm install istio-base istio/base -n istio-system --set defaultRevision=default --create-namespace

helm install istiod istio/istiod -n istio-system --wait

helm ls -n istio-system

helm install istio-ingressgateway istio/gateway -n istio-system --wait

helm status istio-ingressgateway -n istio-systemApply the IngressClass:

# istio-ingressclass.yaml

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

name: istio

spec:

controller: istio.io/ingress-controllerkubectl apply -f istio-ingressclass.yaml3.3 Install KServe

helm install kserve-crd oci://ghcr.io/kserve/charts/kserve-crd --version v0.15.0 -n kserve --create-namespace

helm install kserve oci://ghcr.io/kserve/charts/kserve --version v0.15.0 --set kserve.controller.deploymentMode=RawDeployment --set kserve.controller.gateway.ingressGateway.className=istio -n kserve --create-namespaceStep 4: Deploy Your First InferenceService

We’ll use the KServe getting started example :

# sklearn-iris.yaml

apiVersion: "serving.kserve.io/v1beta1"

kind: "InferenceService"

metadata:

name: "sklearn-iris"

namespace: kserve-test

spec:

predictor:

model:

modelFormat:

name: sklearn

storageUri: "gs://kfserving-examples/models/sklearn/1.0/model" kubectl create namespace kserve-test

kubectl apply -n kserve-test -f sklearn-iris.yaml

kubectl get inferenceservices sklearn-iris -n kserve-testCheck the external IP of the Istio ingress gateway:

kubectl get svc istio-ingressgateway -n istio-systemThen run a prediction request:

curl -v -H "Host: sklearn-iris-kserve-test.example.com" -H "Content-Type: application/json" "http://217.142.185.27:80/v1/models/sklearn-iris:predict" -d @iris-input.jsonTo automate, extract necessary variables:

# INGRESS_HOST

kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.status.loadBalancer.ingress[0].ip}'

# INGRESS_PORT

kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.spec.ports[?(@.name=="http2")].port}'

# SERVICE_HOSTNAME

kubectl get inferenceservice sklearn-iris -n kserve-test -o jsonpath='{.status.url}' | cut -d "/" -f 3Step 5: Deploy HDB Price Predictor

With the model files downloaded from my homelab MinIO, as shared in my

previous post

, we now upload the price-predictor-model.bst to an Oracle Cloud Object Storage bucket. Once uploaded, define an InferenceService to deploy the XGBoost model:

# price-predictor-xgboost.yaml

apiVersion: "serving.kserve.io/v1beta1"

kind: "InferenceService"

metadata:

name: "hdb-resale-xgb"

namespace: kserve-test

spec:

predictor:

model:

modelFormat:

name: xgboost

runtime: "kserve-xgbserver"

protocolVersion: v2

storageUri: "https://objectstorage...oraclecloud.com/.../price-predictor-model.bst" Apply the manifest and check the deployment status:

kubectl apply -f price-predictor-xgboost.yaml

kubectl get inferenceservices hdb-resale-xgb -n kserve-testUse the following input JSON for prediction:

{"inputs":[

{"name":"input-0",

"shape":[2,59],

"datatype":"FP32",

"data":[

0.43119266629219055,0.6242774724960327,0.1875,0.125,0.6363636255264282,0.0,0.0,0.0,0.0,1.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,1.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,1.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,

0.2844036817550659,0.8858381509780884,0.125,0.5,0.8181818127632141,0.0,0.0,0.0,1.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,1.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,1.0,0.0,0.0,0.0,0.0,0.0

]}

]}To send the inference request, first retrieve the service hostname:

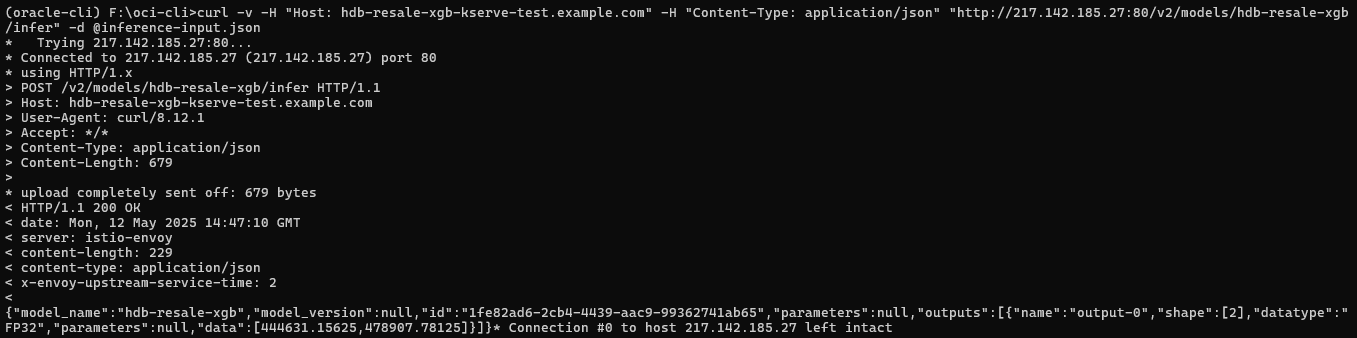

kubectl get inferenceservice hdb-resale-xgb -n kserve-test -o jsonpath='{.status.url}' | cut -d "/" -f 3Then send the prediction using curl:

curl -v -H "Host: hdb-resale-xgb-kserve-test.example.com" -H "Content-Type: application/json" "http://217.142.185.27:80/v2/models/hdb-resale-xgb/infer" -d @inference-input.json

Step 6: Connect Streamlit App to KServe Inference Endpoint

Instead of deploying the frontend app in-cluster, the HDB Resale Price Predictor is hosted on Streamlit Community Cloud . It interacts with the XGBoost model served on Oracle Kubernetes Engine (OKE) via KServe and Istio.

The app sends prediction requests to the public inference endpoint exposed through Istio Gateway. The request includes appropriate headers to route traffic correctly using the Host field, as shown below:

KSERVE_URL = os.environ.get("KSERVE_URL", "http://<public-ip>:80/v2/models/hdb-resale-xgb/infer")

KSERVE_HOST = os.environ.get("KSERVE_HOST", "hdb-resale-xgb-kserve-test.example.com")

headers = {"Content-Type": "application/json"}

if KSERVE_HOST:

headers["Host"] = KSERVE_HOST

response = requests.post(KSERVE_URL, headers=headers, json=payload, timeout=30)You can explore the complete Streamlit source code on GitHub: https://github.com/seehiong/hdb-price-predictor