Building on my exploration of text generation with NVIDIA Jetson Orin NX , this post delves into the audio generation capabilities of the Jetson platform.

Transcribing Audio with Whisper

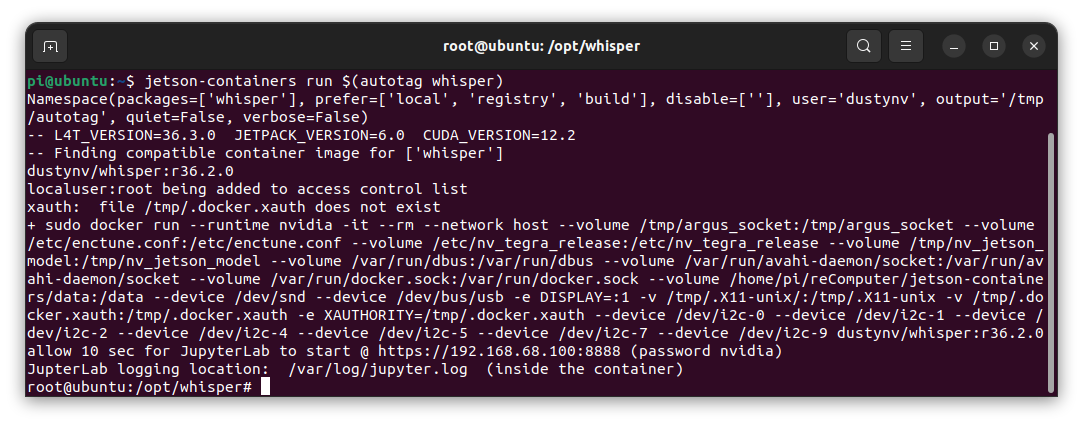

Following the Tutorial Whisper , after starting the container with the command below, you can access Jupyter Lab at https://192.168.68.100:8888 (password: nvidia):

jetson-containers run $(autotag whisper)

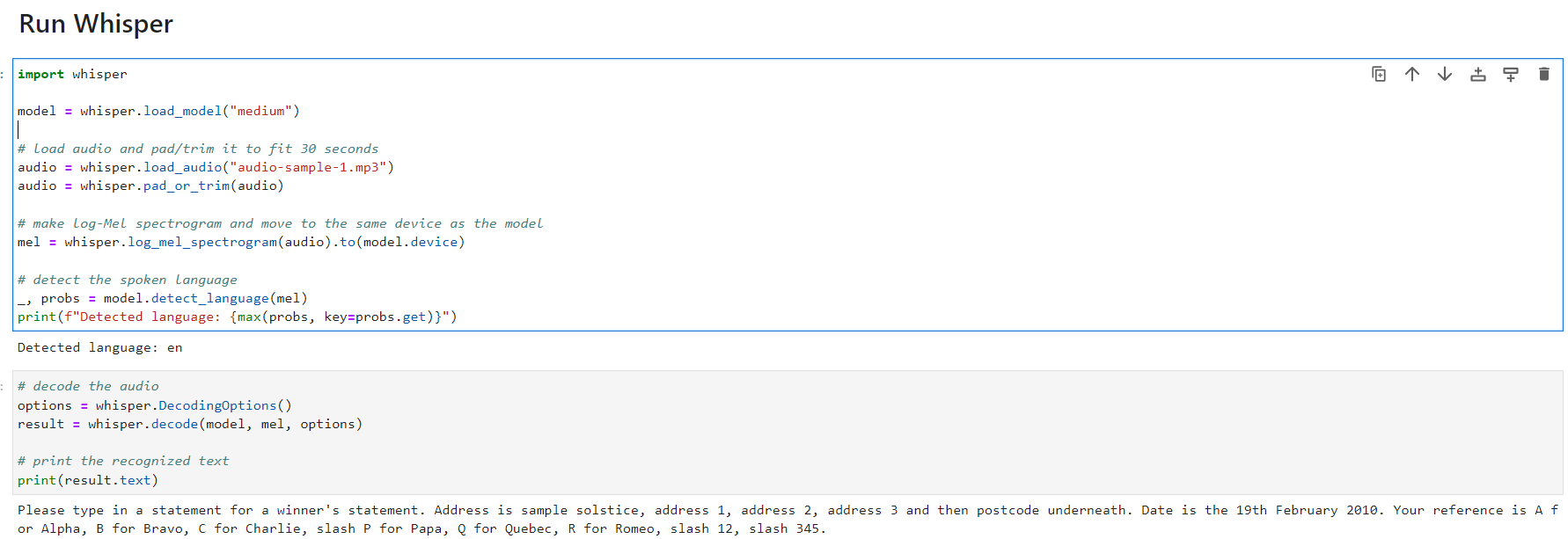

Instead of recording my own audio, I used the Free Transcription Example Files .

After downloading the necessary models, here is the transcription output for the first 30 seconds:

Text LLM and ASR/TTS with Llamaspeak

To start Llamaspeak with text LLM and ASR/TTS enabled, use the following command. Make sure your Hugging Face token is correctly set; I do this by adding HF_TOKEN=hf_xyz123abc456 to my .bashrc file.

Start the nano_llm with:

jetson-containers run --env HUGGINGFACE_TOKEN=$HF_TOKEN \

$(autotag nano_llm) \

python3 -m nano_llm.agents.web_chat --api=mlc \

--model meta-llama/Meta-Llama-3-8B-Instruct \

--asr=riva --tts=piperThat’s all for this post. I’ll continue my journey with the Two Days to a demo series, an introductory set of deep learning tutorials for deploying AI and computer vision in the field using NVIDIA Jetson Orin!

Optional - Preparing RIVA Server

Following the Speech AI Tutorial , you will need to sign up for NGC, generate an API key, and configure the NGC setup. Here are the commands to get started:

sudo gedit /etc/docker/daemon.json

# Add the line:

“default-runtime”: “nvidia”

sudo systemctl restart docker

# Add your user to the Docker group

sudo usermod -aG docker $USER

newgrp docker

# Download the NGC CLI

wget --content-disposition https://ngc.nvidia.com/downloads/ngccli_arm64.zip && unzip ngccli_arm64.zip && chmod u+x ngc-cli/ngc

find ngc-cli/ -type f -exec md5sum {} + | LC_ALL=C sort | md5sum -c ngc-cli.md5

# Add the NGC CLI to your PATH in .bashrc and source it

export PATH=$PATH:/home/pi/ngc-cli

# Configure NGC

ngc config set

# Download RIVA Quickstart

ngc registry resource download-version nvidia/riva/riva_quickstart_arm64:2.12.0

cd riva_quickstart_arm64_v2.12.0

sudo bash riva_init.sh

# Start the RIVA server in a Docker container

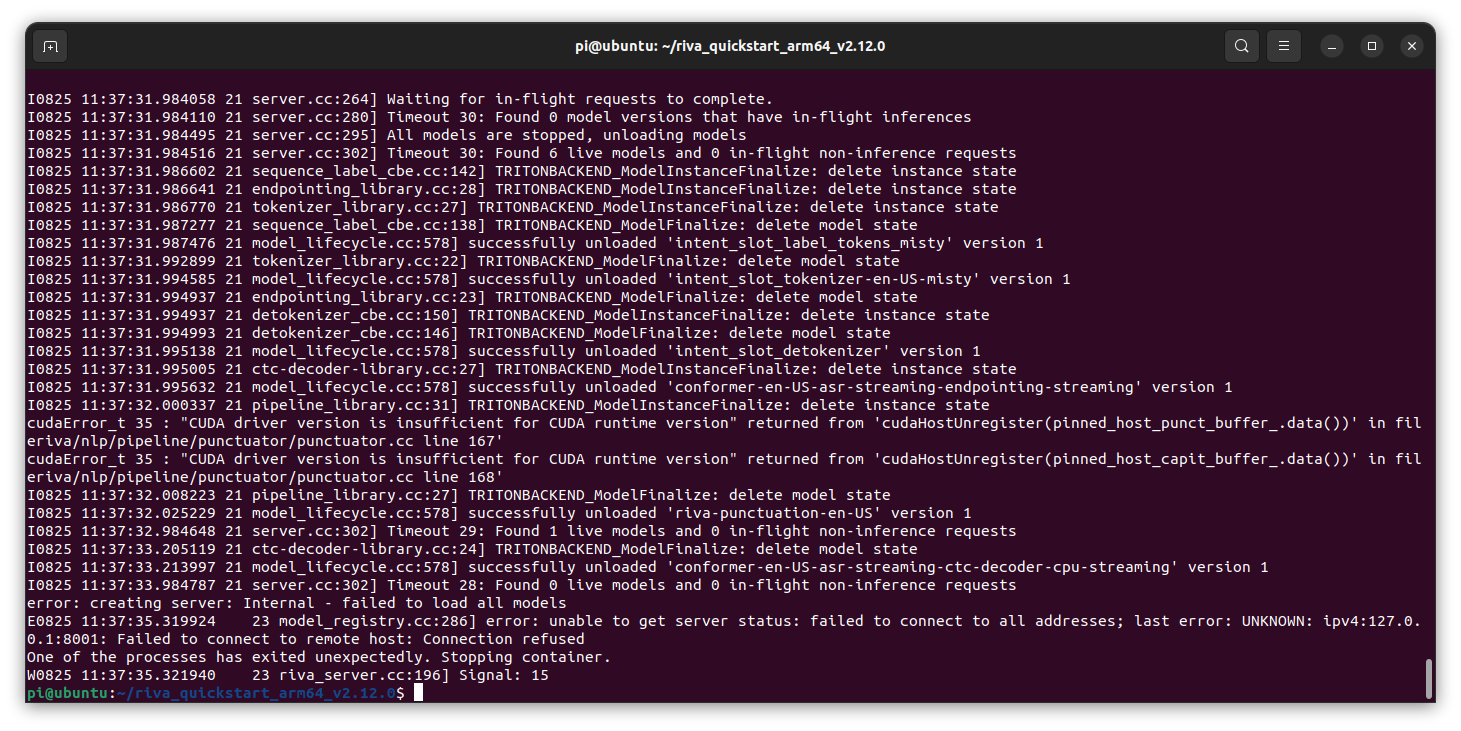

bash riva_start.shThis section was intended for Llamaspeak, but due to a CUDA driver version error, I was unable to proceed with the speech testing. The error occurred when running the command docker logs riva-speech: