Unlocking the Power of Machine Learning with MLC LLM

Machine Learning Compilation for LLM, or MLC LLM, is a cutting-edge universal deployment solution for large language models. In this blog post, we’ll guide you through the setup process and show you how to harness the immense potential of MLC LLM.

Setting Up Your Environment

To get started with MLC LLM, you need to set up your environment properly. Follow these steps:

1. Install TVM

TVM is a critical component for MLC LLM. You can install it locally using pip:

pip install apache-tvm

Check your TVM build options with the following command:

python3 -c "import tvm; print('\n'.join(f'{k}: {v}' for k, v in tvm.support.libinfo().items()))"

2. Install Conda

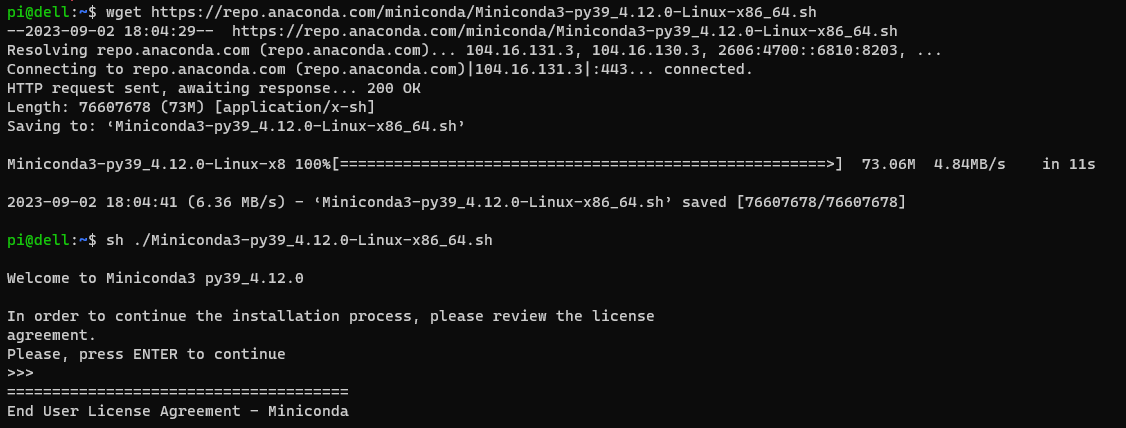

Conda is a versatile package manager that facilitates dependency management. For a smooth MLC LLM experience, you can install conda onto Windows Subsystem for Linux (WSL):

wget https://repo.anaconda.com/miniconda/Miniconda3-py39_4.12.0-Linux-x86_64.sh

sh ./Miniconda3-py39_4.12.0-Linux-x86_64.sh

After installation, make sure to update Conda, install conda-libmamba-solver, and set it as the default solver:

# update conda

conda update --yes -n base -c defaults conda

# install `conda-libmamba-solver`

conda install --yes -n base conda-libmamba-solver

# set it as the default solver

conda config --set solver libmamba

Validate your Conda installation with:

conda info | grep platform

3. Install Vulkan SDK

For optimal performance, you’ll need to install the Vulkan SDK:

VulkanSDK-1.3.261.1-Installer.exe

To validate the installation, run the following commands:

sudo apt-get install vulkan-tools

# Check GPU information

vulkaninfo

Exploring MLC Chat

1. Create a New Conda Environment

Now that your environment is set up, let’s explore MLC Chat. We’ll run the CLI version of MLC LLM:

Create new conda environment:

conda create -n mlc-chat-venv -c mlc-ai -c conda-forge mlc-chat-nightly

conda activate mlc-chat-venv

conda install git git-lfs

git lfs install

mkdir -p dist/prebuilt

git clone https://github.com/mlc-ai/binary-mlc-llm-libs.git dist/prebuilt/lib

cd dist/prebuilt

git clone https://huggingface.co/mlc-ai/mlc-chat-Llama-2-7b-chat-hf-q4f16_1

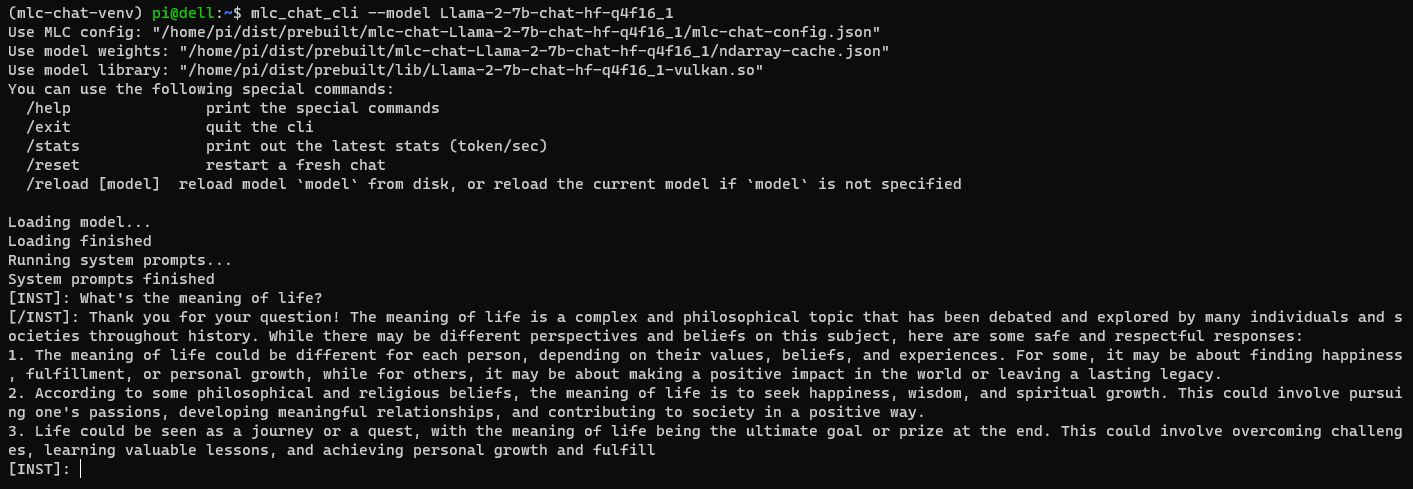

2. Run MLC Chat

You’re now ready to run MLC Chat:

cd ../..

mlc_chat_cli --model Llama-2-7b-chat-hf-q4f16_1

This is the output of the sample question:

With MLC LLM and MLC Chat set up, you have the tools to explore the world of machine learning and natural language understanding. The possibilities are limitless, and we can’t wait to see what you create.