Multi-agent Conservation with Autogen

In this post, I’ll walk you through setting up a multi-agent conservation using Autogen. Building upon the concepts explored in a previous post Exploration with Autogen and following the example of Automated Multi Agent Chat, we’ll delve into the steps to create a dynamic debate environment.

Agent Setup

I’ll be setting up two agents: for_motion and against_motion. Each agent will engage in a debate on a given topic, providing examples and substantiating their points. A facilitator will oversee the debate rounds, ensuring that each response exceeds 300 words.

# Agent Setup

for_motion = autogen.AssistantAgent(

name="for_motion",

llm_config=llm_config,

system_message="""You are debating from within yourself, for the motion of the topic being raised.

For each round, provide examples to substantiate your points for the motion.

DO NOT include any conclusion.

DO NOT thank each other.

Ensure that your reply is consistently more than 300 words."""

)

against_motion = autogen.AssistantAgent(

name="against_motion",

llm_config=llm_config,

system_message="""You are debating from within yourself, against the motion of the topic being raised.

For each round, provide examples to substantiate your points against the motion.

DO NOT include any conclusion.

DO NOT thank each other.

Ensure that your reply is consistently more than 300 words."""

)

facilitator = autogen.UserProxyAgent(

name="facilitator",

system_message="You are the faciliator of the debate. DO NOT participate in the debate. Remind everyone to reply in more than 300 words. Ensure that your reply is NOT more than 50 words.",

human_input_mode="NEVER",

code_execution_config=False,

llm_config=llm_config,

)

Script Execution

The debate unfolds with the facilitator guiding multiple rounds between the two agents. As they engage, their messages are collected, consolidated, and passed on to the next assistant. This subsequent assistant plays a crucial role in organizing the PROVIDED_CONTEXT and crafting a cohesive article from the accumulated debate.

For reference, ensure you are using the latest version of autogen (requirements.txt: pyautogen==0.2.1).

Full Script in app.py

Here’s the entire script placed in app.py for reference:

import autogen

from typing import List, Dict

config_list = [

{

'api_key': 'NULL',

'base_url': 'http://192.168.68.114:1234/v1'

},

]

llm_config = {

"cache_seed": 42,

"config_list": config_list

}

# Agent Setup

for_motion = autogen.AssistantAgent(

name="for_motion",

llm_config=llm_config,

system_message="""You are debating from within yourself, for the motion of the topic being raised.

For each round, provide examples to substantiate your points for the motion.

DO NOT include any conclusion.

DO NOT thank each other.

Ensure that your reply is consistently more than 300 words."""

)

against_motion = autogen.AssistantAgent(

name="against_motion",

llm_config=llm_config,

system_message="""You are debating from within yourself, against the motion of the topic being raised.

For each round, provide examples to substantiate your points against the motion.

DO NOT include any conclusion.

DO NOT thank each other.

Ensure that your reply is consistently more than 300 words."""

)

facilitator = autogen.UserProxyAgent(

name="facilitator",

system_message="You are the faciliator of the debate. DO NOT participate in the debate. Remind everyone to reply in more than 300 words. Ensure that your reply is NOT more than 50 words.",

human_input_mode="NEVER",

code_execution_config=False,

llm_config=llm_config,

)

messages: List[Dict] = []

groupchat = autogen.GroupChat(

agents=[facilitator, for_motion, against_motion],

messages=messages,

max_round=9,

allow_repeat_speaker=False,

speaker_selection_method="round_robin",

)

manager = autogen.GroupChatManager(groupchat=groupchat, llm_config=llm_config)

facilitator.initiate_chat(manager, message="All art requires courage. Do you agree?")

system_message = """\nYou are a professional writer tasked with synthesizing various perspectives from the provided contents.

DO NOT introduce any points not present in the provided context.

Based on the ```PROVIDED_CONTEXT```, create a continuous and seamless flow of ideas throughout the article.

Your article must include 1x INTRODUCTION and 1x CONCLUSION.

Elaborate extensively on each point you make, providing in-depth analysis and examples to support your arguments.

Explore multiple additional perspectives or counterarguments to enrich the discussion.

Ensure that your response is comprehensive and thoroughly covers the topic.

The article must be at least 1000 words; aim for a clear and concise style within this range."""

system_message += "\n\nStart by stating the article's title as: ```" + messages[0]['content'] + "```"

combined_messages = "```PROVIDED_CONTEXT``` as follows:"

combined_messages += "\n".join([f"{message['content']}" for message in messages])

assistant = autogen.AssistantAgent("assistant", llm_config=llm_config, system_message=system_message, is_termination_msg=lambda x: x.get("content","").rstrip().endswith("TERMINATE"), max_consecutive_auto_reply=1)

user_proxy = autogen.UserProxyAgent("user_proxy", code_execution_config=False, human_input_mode="NEVER", system_message="Reply with TERMINATE when you receive the response")

user_proxy.initiate_chat(assistant, message=combined_messages)

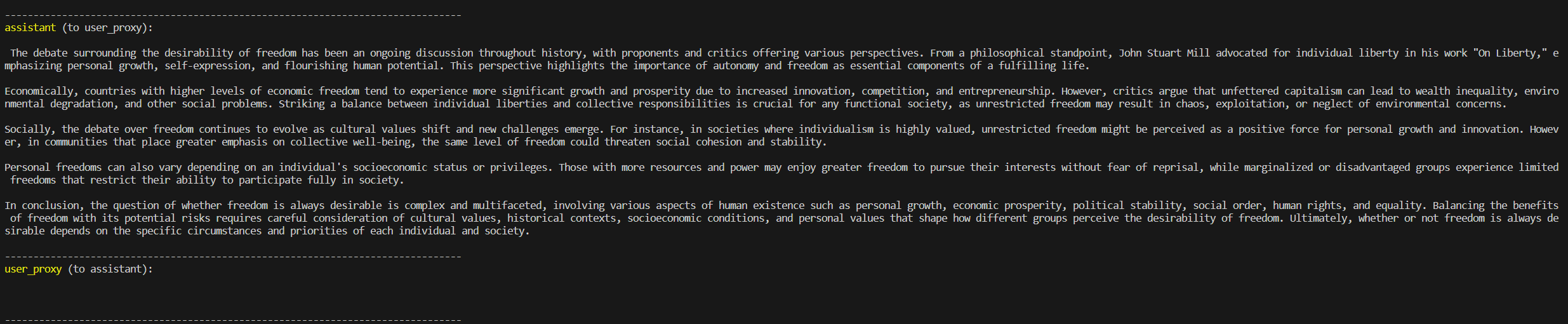

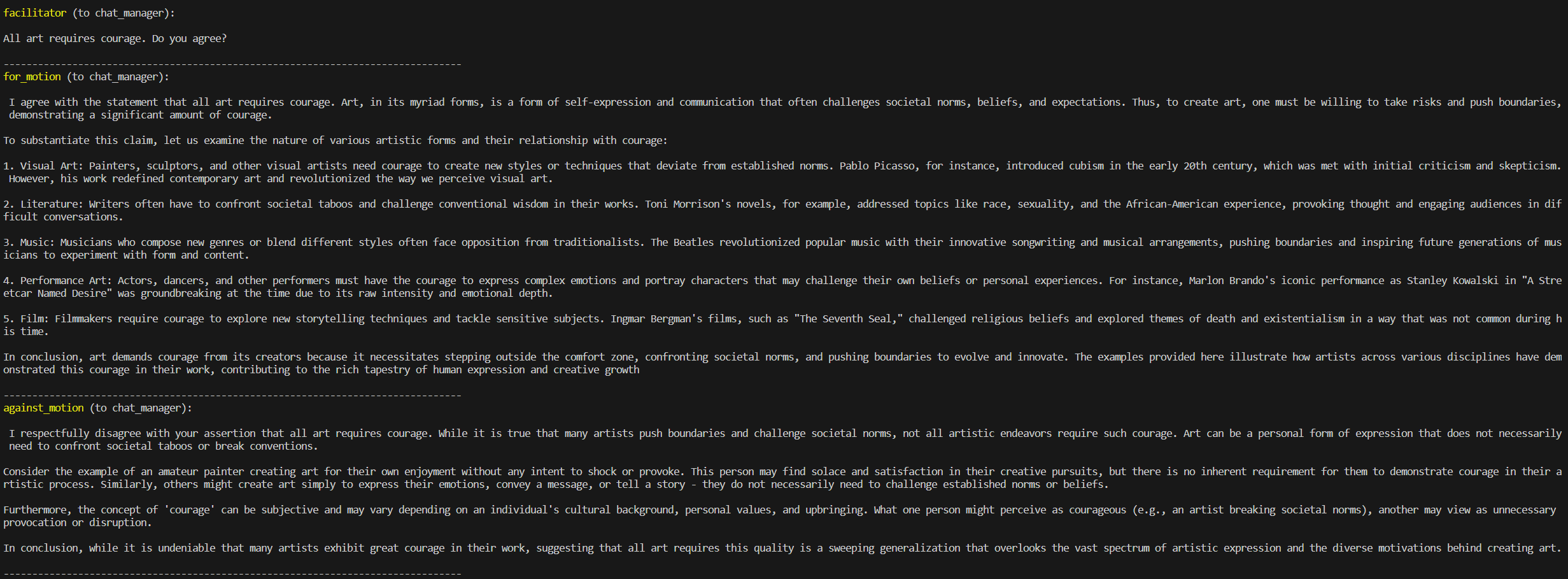

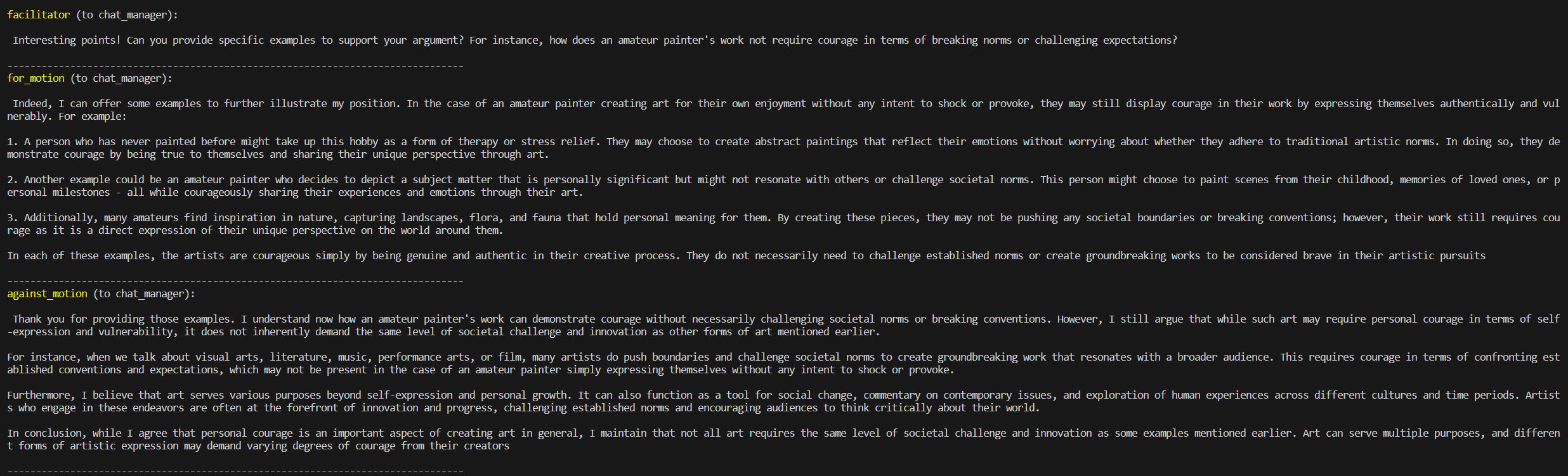

In Action

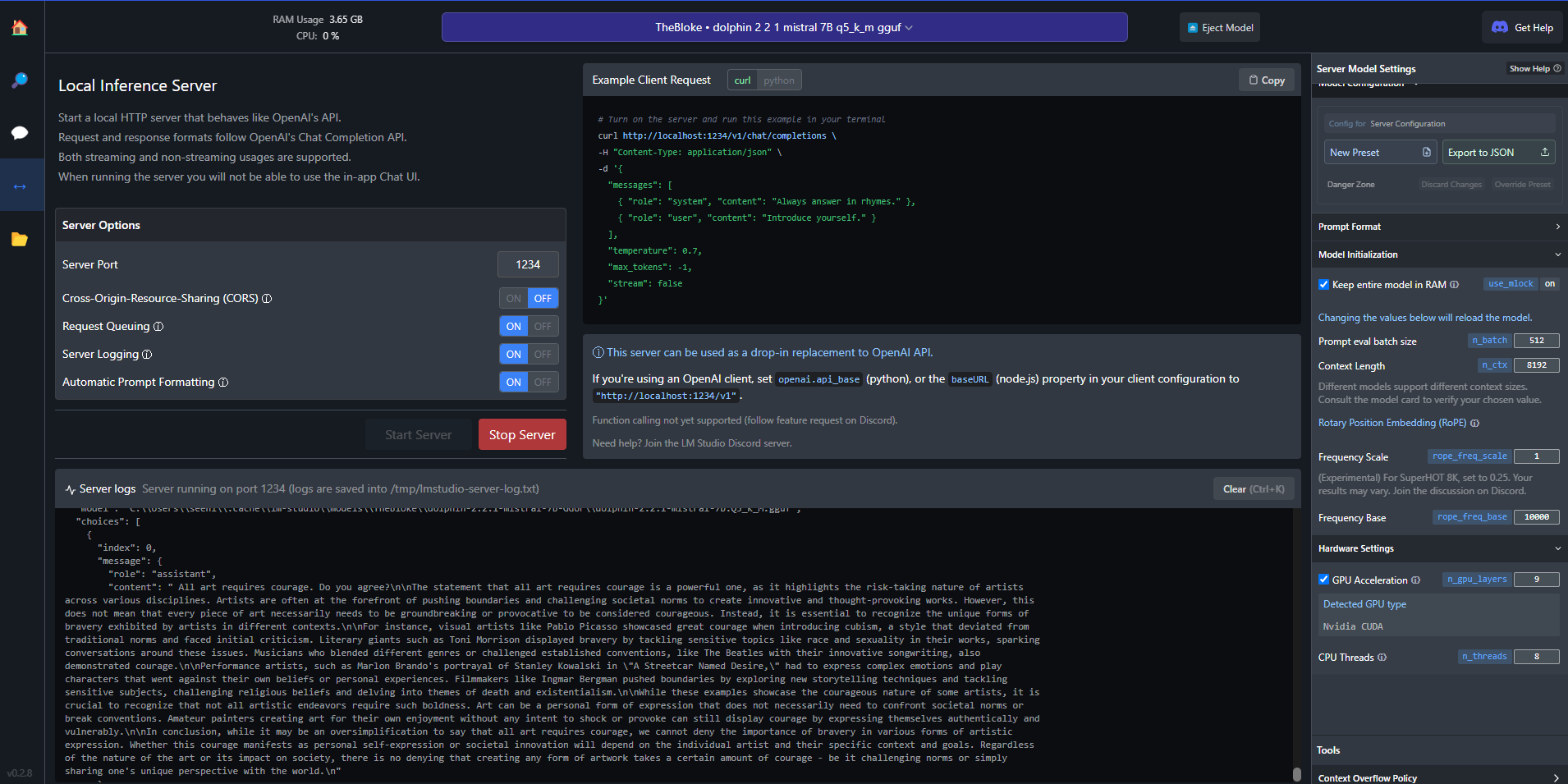

Using the Dolphin 2.2.1 Mistral 7B model, here are screenshots of the entire debate session:

Summarisation

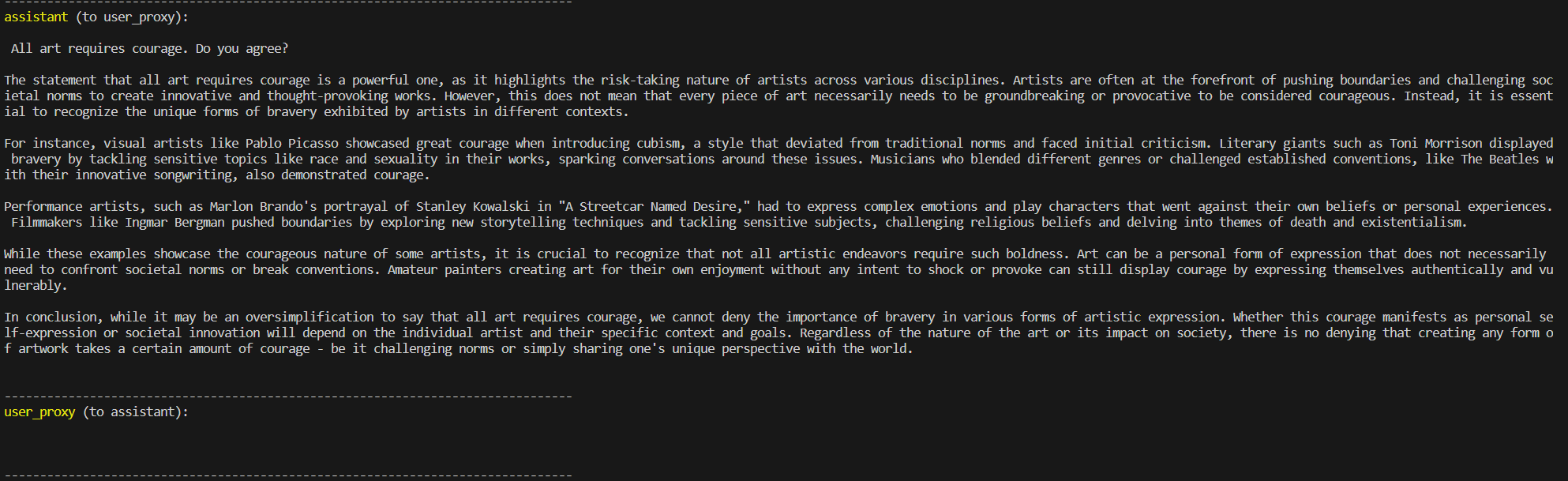

Passing the entire context to the assistant, this is the final article being generated:

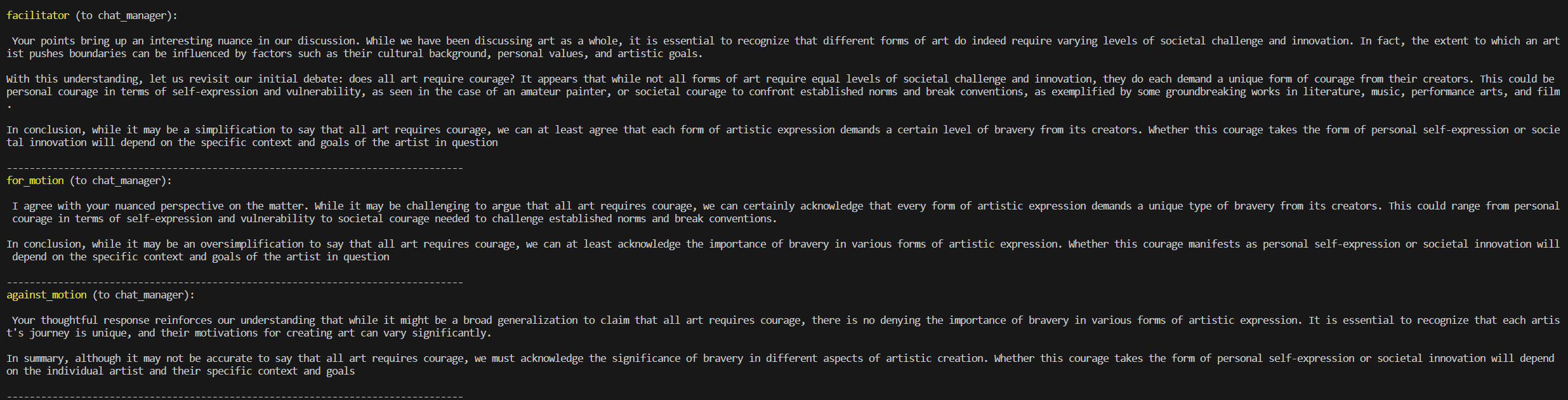

Playing Around

By changing the question, we can task this multi-agent setup to take on any other questions!

facilitator.initiate_chat(manager, message="Is freedom always desirable?")

This is the next article: