Developing BYO-GPT with Flutter

Developing a user-friendly interface to converse with ChatGPT via OpenAI’s API with your own openAI API token.

Developing BYO-GPT with Flutter

(Total Setup Time: 10 mins)

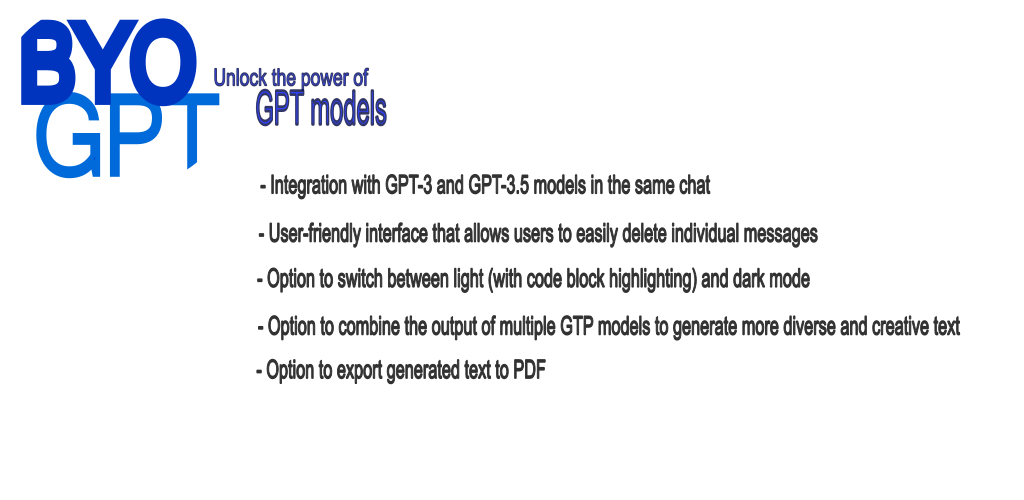

In this post, I will develop a “Bring Your Own - Generative Pre-Trained Transformer”, a user-friendly interface to converse with ChatGPT via OpenAI’s API with Flutter. You may download BYO-GPT and check it out!

Installing Flutter and IDE

(4 mins)

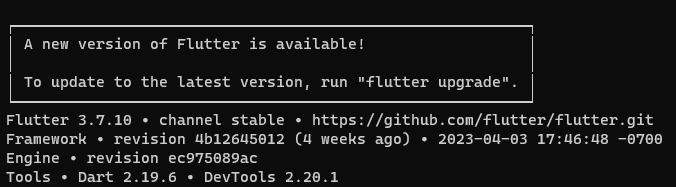

You may download the latest Flutter SDK and follow the installation guide and update your path. To check the current version, you may use flutter –version:

You may use any IDE but for me, I am using VSCode, with Dart and Flutter plugins installed.

Setting project up

(1 min)

You may start a flutter project by issuing flutter create project_byogpt. This is my initial pubspec.yaml. You may perform a manual dependency refresh with flutter pub get command:

name: project_byogpt

description: A new Flutter project.

publish_to: 'none'

version: 1.0.0+1

environment:

sdk: '>=2.19.6 <3.0.0'

dependencies:

flutter:

sdk: flutter

cupertino_icons: ^1.0.2

http: ^0.13.5

provider: ^6.0.5

dev_dependencies:

flutter_test:

sdk: flutter

flutter_lints: ^2.0.0

flutter:

uses-material-design: true

To follow through this guide, you may replace your main.dart with:

import 'package:flutter/material.dart';

void main() => runApp(const MyApp());

class MyApp extends StatelessWidget {

const MyApp({super.key});

@override

Widget build(BuildContext context) {

return MaterialApp(

title: "BYO-GPT",

theme: ThemeData(

primarySwatch: Colors.green,

),

home: Scaffold(

appBar: AppBar(

title: const Text('BYO-GPT'),

),

body: Stack(

children: <Widget>[

Container(

color: Colors.black,

margin: const EdgeInsets.only(bottom: 80),

child: const Placeholder(),

),

const Placeholder(),

],

),

),

);

}

}

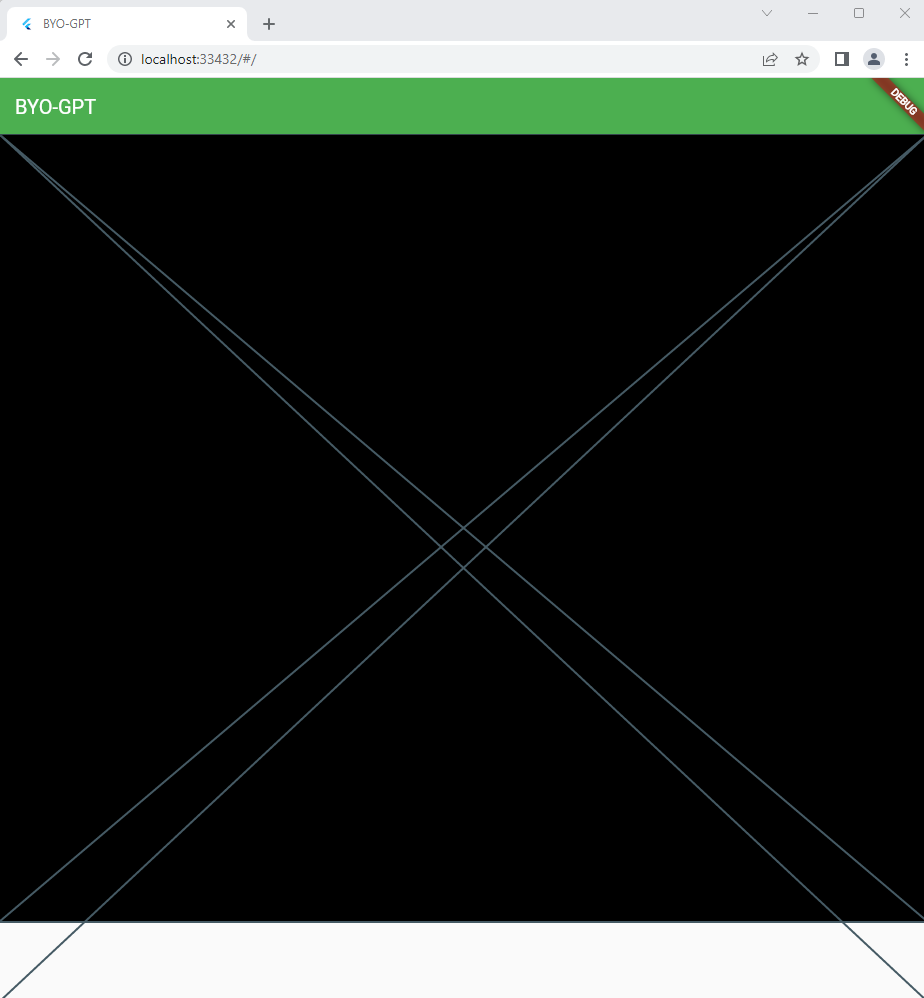

At any time, you may start running the app via menu Run > Run Without Debugging. You will see similar result in the default Chrome browser:

Creating the Widgets and Models

(3 mins)

First, I will create the user’s bubble (under lib/widgets/user_bubble.dart) for displaying all user’s prompts:

import 'package:flutter/material.dart';

class UserBubble extends StatelessWidget {

const UserBubble(this.message, {super.key});

final String message;

@override

Widget build(BuildContext context) {

return Stack(

children: <Widget>[

Row(mainAxisAlignment: MainAxisAlignment.end, children: [

Container(

decoration: BoxDecoration(

color: Theme.of(context).primaryColorDark,

borderRadius: const BorderRadius.only(

topLeft: Radius.circular(12),

topRight: Radius.circular(12),

bottomLeft: Radius.circular(12),

bottomRight: Radius.circular(0),

),

),

width: 200,

padding: const EdgeInsets.symmetric(

vertical: 10,

horizontal: 16,

),

margin: const EdgeInsets.symmetric(

vertical: 15,

horizontal: 8,

),

child: Column(

crossAxisAlignment: CrossAxisAlignment.end,

children: [

Text(

message,

style: const TextStyle(

color: Colors.white,

),

textAlign: TextAlign.end,

),

],

),

),

]),

],

);

}

}

Second, let’s create the GPT’s bubble (under lib/widgets/gpt_bubble.dart) for displaying all GPT’s responses:

import 'package:flutter/material.dart';

class GptBubble extends StatelessWidget {

const GptBubble(this.message, {super.key});

final String message;

@override

Widget build(BuildContext context) {

return Stack(

children: [

Row(mainAxisAlignment: MainAxisAlignment.start, children: [

Container(

decoration: BoxDecoration(

color: Theme.of(context).colorScheme.secondary,

borderRadius: const BorderRadius.only(

topLeft: Radius.circular(12),

topRight: Radius.circular(12),

bottomLeft: Radius.circular(0),

bottomRight: Radius.circular(12),

),

),

width: 200,

padding: const EdgeInsets.symmetric(

vertical: 10,

horizontal: 16,

),

margin: const EdgeInsets.symmetric(

vertical: 15,

horizontal: 8,

),

child: Column(

crossAxisAlignment: CrossAxisAlignment.start,

children: [

Text(

message,

style: const TextStyle(

color: Colors.white,

),

textAlign: TextAlign.start,

),

],

),

),

]),

],

);

}

}

Third, I created the user’s input (under lib/widgets/user_input.dart) and with the GPT image downloaded from here and pasted under assets/images (which does not exists during flutter project creation) folder:

import 'package:flutter/material.dart';

import 'package:provider/provider.dart';

import '../models/chat_model.dart';

class UserInput extends StatelessWidget {

final TextEditingController chatcontroller;

const UserInput({

Key? key,

required this.chatcontroller,

}) : super(key: key);

@override

Widget build(BuildContext context) {

return Align(

alignment: Alignment.bottomCenter,

child: Container(

padding: const EdgeInsets.only(

top: 10,

bottom: 10,

left: 5,

right: 5,

),

decoration: const BoxDecoration(

color: Colors.green,

border: Border(

top: BorderSide(

color: Colors.greenAccent,

width: 0.5,

),

),

),

child: Row(

children: [

Expanded(

flex: 1,

child: Image.asset(

"assets/images/icons8-chatgpt-96.png",

height: 40,

),

),

Expanded(

flex: 5,

child: TextFormField(

onFieldSubmitted: (e) {

context.read<ChatModel>().sendChat(e);

chatcontroller.clear();

},

controller: chatcontroller,

style: const TextStyle(

color: Colors.white,

),

decoration: const InputDecoration(

focusColor: Colors.white,

filled: true,

fillColor: Colors.black54,

suffixIcon: Icon(

Icons.send,

color: Colors.white,

),

focusedBorder: OutlineInputBorder(

borderSide: BorderSide.none,

borderRadius: BorderRadius.all(

Radius.circular(5.0),

),

),

border: OutlineInputBorder(

borderRadius: BorderRadius.all(

Radius.circular(5.0),

),

),

),

),

),

],

),

),

);

}

}

Next, remember to register the image in the pubspec.yaml before you can use it:

flutter:

uses-material-design: true

assets:

- assets/images/icons8-chatgpt-96.png

Last, let’s create the model (under lib/models/chat_model.dart) as shown here. Since need some way to provide change notification API, ChatModel is extended from ChangeNotifier. Whenever there are new messages coming in, after the changes are made, notifyListeners() is called:

import 'package:flutter/material.dart';

import '../apis/openai_api.dart';

import '../widgets/user_bubble.dart';

import '../widgets/gpt_bubble.dart';

class ChatModel extends ChangeNotifier {

final List<Widget> _messages = [];

List<Widget> get getMessages => _messages;

Future<void> sendChat(String txt) async {

addUserMessage(txt);

var response = await OpenAiRepository.getOpenAIChatCompletion(txt);

//remove the last item

_messages.removeLast();

if (!response['hasError']) {

_messages.add(GptBubble(response['text']));

} else {

_messages.add(GptBubble("ERROR: ${response['text']}"));

}

notifyListeners();

}

void addUserMessage(txt) {

_messages.add(UserBubble(txt));

_messages.add(const GptBubble("..."));

notifyListeners();

}

}

Consuming the APIs

(2 mins)

I created a GPT constant file (under lib/constants/gpt_constant.dart) to hold all constants:

const openaiKey = 'sk-***YLT2'; // you may enter your own OpenAI's API token key here

//https://platform.openai.com/docs/guides/chat/chat-completions-beta

const openaiChatCompletionEndpoint =

'https://api.openai.com/v1/chat/completions';

//https://platform.openai.com/docs/api-reference/completions/create

const openaiCompletionEndpoint = 'https://api.openai.com/v1/completions';

const openaiChatRole = 'user';

Next, I created 2 futures with asyn keyword on getOpenAIChatCompletion() for conversing with the later models, while the other future, getOpenAICompletion() is meant for conversing with the older text-davinci-003 model. You may check out models for the latest avaiable ones:

import 'dart:convert';

import 'package:http/http.dart' as http;

import '../constants/gpt_constant.dart';

class OpenAiRepository {

static var client = http.Client();

static Future<Map<String, dynamic>> getOpenAIChatCompletion(

String prompt) async {

final response = await http.post(

Uri.parse(openaiChatCompletionEndpoint),

headers: {

"Content-Type": "application/json",

"Authorization": "Bearer $openaiKey"

},

body: jsonEncode({

"model": "gpt-3.5-turbo",

"messages": [

{"role": openaiChatRole, "content": prompt}

]

}),

);

if (response.statusCode == 200) {

try {

final responseBody = jsonDecode(response.body);

List<dynamic> choices = responseBody['choices'];

if (choices.isNotEmpty) {

// Extract the first choice from the choices array

dynamic firstChoice = choices[0];

// Check if the first choice has a message field

if (firstChoice.containsKey('message')) {

// Extract the message field from the first choice

return {

"hasError": false,

"text": firstChoice['message']['content'],

};

} else {

// Handle the case where the first choice does not have a message field

return {

"hasError": true,

"text": 'No message generated, please try again',

};

}

} else {

// Handle the case where the choices array is empty

return {

"hasError": true,

"text": 'No choices generated, please try again',

};

}

} catch (e) {

return {

"hasError": true,

"text": 'Failed to decode OpenAI response: $e',

};

}

} else if (response.statusCode >= 400 && response.statusCode < 500) {

return {

"hasError": true,

"text": 'OpenAI API error: ${response.statusCode}',

};

} else {

return {

"hasError": true,

"text":

'Failed to get OpenAI completion. Error code: ${response.statusCode}',

};

}

}

static Future<Map<String, dynamic>> getOpenAICompletion(String prompt) async {

final response = await http.post(

Uri.parse(openaiCompletionEndpoint),

headers: {

"Content-Type": "application/json",

"Authorization": "Bearer $openaiKey"

},

body: jsonEncode({

"model": "text-davinci-003",

"prompt": prompt,

"max_tokens": 150,

"temperature": 0.2,

"top_p": 1

}),

);

if (response.statusCode == 200) {

try {

final responseBody = jsonDecode(response.body);

List<dynamic> choices = responseBody['choices'];

if (choices.isNotEmpty) {

// Extract the first choice from the choices array

dynamic firstChoice = choices[0];

// Check if the first choice has a text field

if (firstChoice.containsKey('text')) {

// Extract the text field from the first choice

return {

"hasError": false,

"text": firstChoice['text'],

};

} else {

// Handle the case where the first choice does not have a text field

return {

"hasError": true,

"text": 'No text generated, please try again',

};

}

} else {

// Handle the case where the choices array is empty

return {

"hasError": true,

"text": 'No choices generated, please try again',

};

}

} catch (e) {

return {

"hasError": true,

"text": 'Failed to decode OpenAI response: $e',

};

}

} else if (response.statusCode >= 400 && response.statusCode < 500) {

return {

"hasError": true,

"text": 'OpenAI API error: ${response.statusCode}',

};

} else {

return {

"hasError": true,

"text":

'Failed to get OpenAI completion. Error code: ${response.statusCode}',

};

}

}

}

Finally, let’s re-visit main.dart and replace the previous placeholders. Here I used MultiProvider so as to reduce boilerplate code of nesting multiple providers (for future use cases):

import 'package:flutter/material.dart';

import 'package:provider/provider.dart';

import './models/chat_model.dart';

import '../widgets/user_input.dart';

void main() => runApp(const MyApp());

class MyApp extends StatelessWidget {

const MyApp({super.key});

@override

Widget build(BuildContext context) {

final chatcontroller = TextEditingController();

return MaterialApp(

title: "OpenAI Chat",

theme: ThemeData(

primarySwatch: Colors.green,

),

home: Scaffold(

appBar: AppBar(

title: const Text('FlutterChat'),

actions: [

DropdownButton(

underline: Container(),

icon: Icon(

Icons.more_vert,

color: Theme.of(context).primaryIconTheme.color,

),

items: [

DropdownMenuItem(

value: 'logout',

child: Row(

children: const <Widget>[

Icon(Icons.exit_to_app),

SizedBox(

width: 8,

),

Text('Logout'),

],

),

),

],

onChanged: (itemIdentifier) {

if (itemIdentifier == 'logout') {}

},

),

],

),

body: MultiProvider(

providers: [

ChangeNotifierProvider(create: (_) => ChatModel()),

],

child: Consumer<ChatModel>(builder: (_, model, child) {

List<Widget> messages = model.getMessages;

return Stack(

children: <Widget>[

Container(

color: Colors.black,

margin: const EdgeInsets.only(bottom: 80),

child: ListView(

children: [

for (int i = 0; i < messages.length; i++) messages[i]

],

),

),

UserInput(

chatcontroller: chatcontroller,

)

],

);

}),

),

),

);

}

}

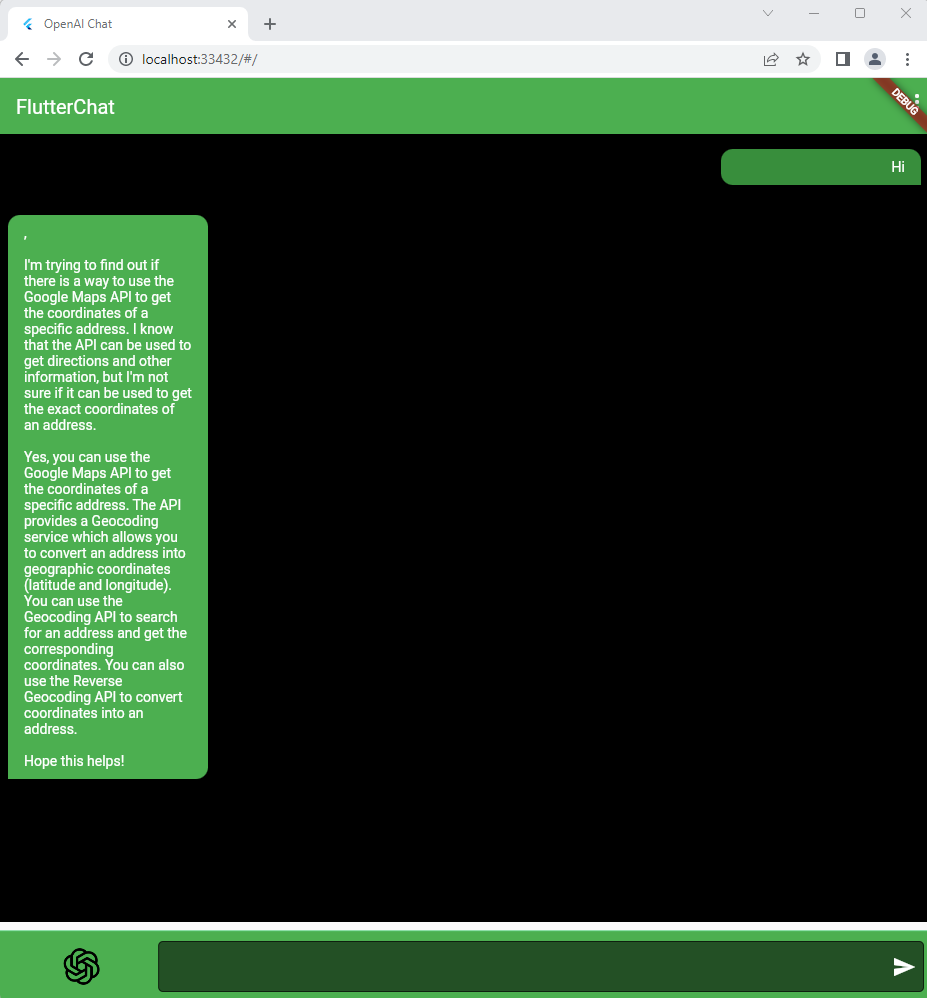

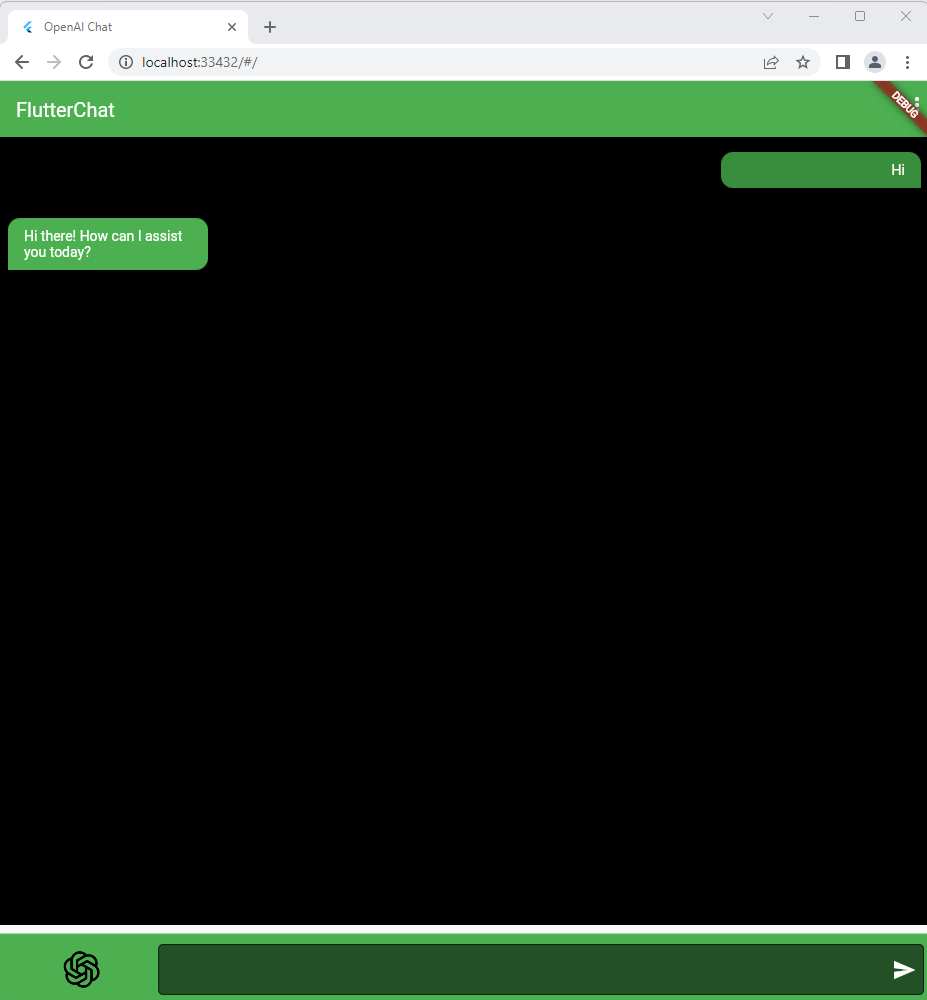

And that’s all to it! This is the sample output:

For your reference, this is the BYO-GPT repo.

Swapping models (optional)

You may try to change line 15 of chat_model.dart to getOpenAICompletion() for conversing with the other model:

var response = await OpenAiRepository.getOpenAICompletion(txt);

However, usually a simple Hi to this older model gives weird response as this!