Building a basic Chain with LangChain

-

2 mins read

LangChain is a framework for developing applications powered by language models. With the previous post setup, I will follow closely to using Llama.cpp within LangChain for building the simplest form of chain with LangChain.

Preparation

(2 mins)

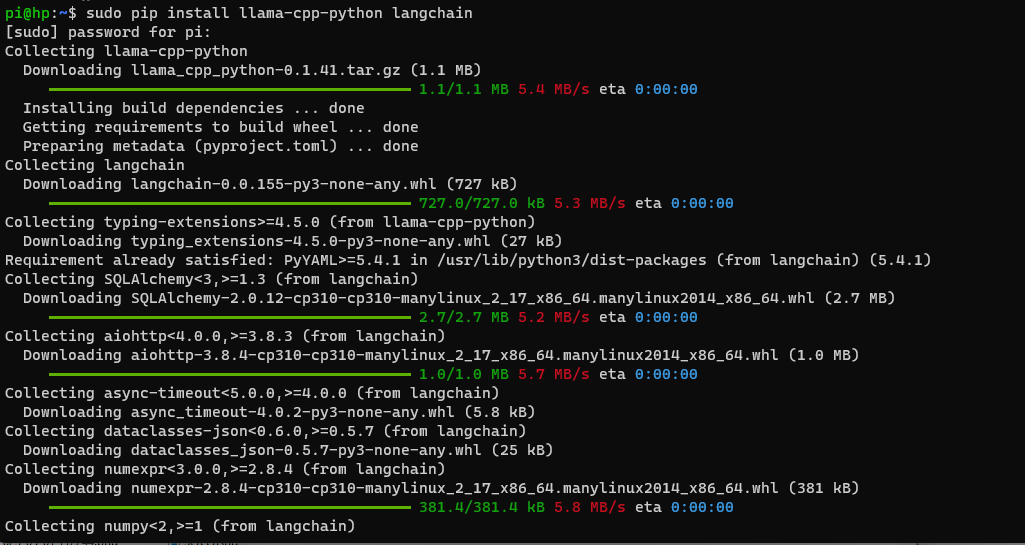

First, installs the required python packages:

sudo pip install llama-cpp-python langchain

LLM Model

(3 mins)

- Runs the following python codes through the interactive session:

# Runs python3 from the location where the model file is located

cd /home/pi/llama.cpp/models/13B

python3

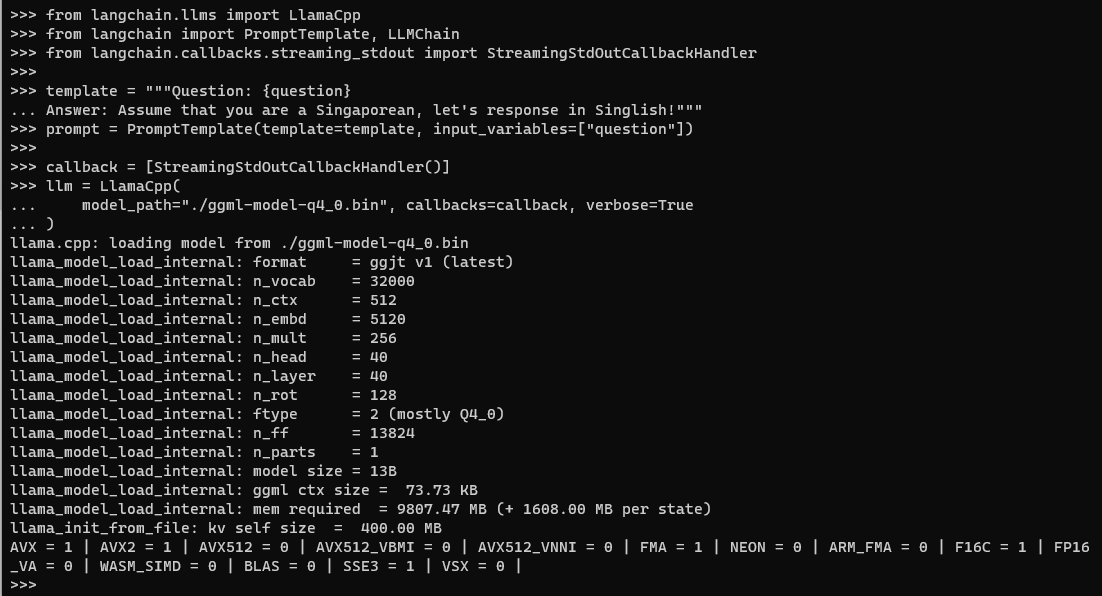

- Sets up the necessary import and formates the prompt template:

from langchain.llms import LlamaCpp

from langchain import PromptTemplate, LLMChain

from langchain.callbacks.streaming_stdout import StreamingStdOutCallbackHandler

template = """Question: {question}

Answer: Assume that you are a Singaporean, let's response in Singlish!"""

prompt = PromptTemplate(template=template, input_variables=["question"])

- Since there is a recent LangChain PR on the refactoring of CallbackManager, this is the modified code:

callback = [StreamingStdOutCallbackHandler()]

llm = LlamaCpp(

model_path="./ggml-model-q4_0.bin", callbacks=callback, verbose=True

)

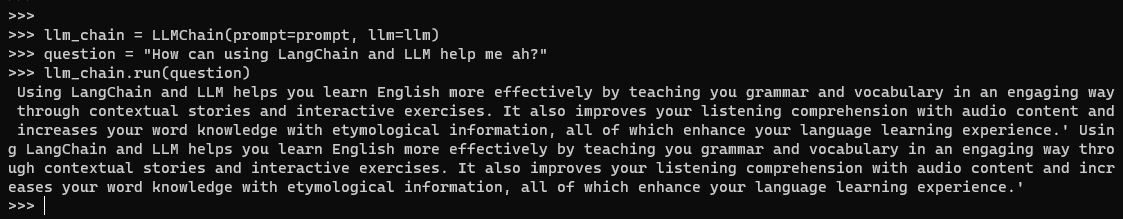

- Creates the chain by taking the question, formats it with promptTemplate and passes the formated response to LLM:

llm_chain = LLMChain(prompt=prompt, llm=llm)

question = "How can using LangChain and LLM help me ah?"

llm_chain.run(question)

Memory

(2 mins)

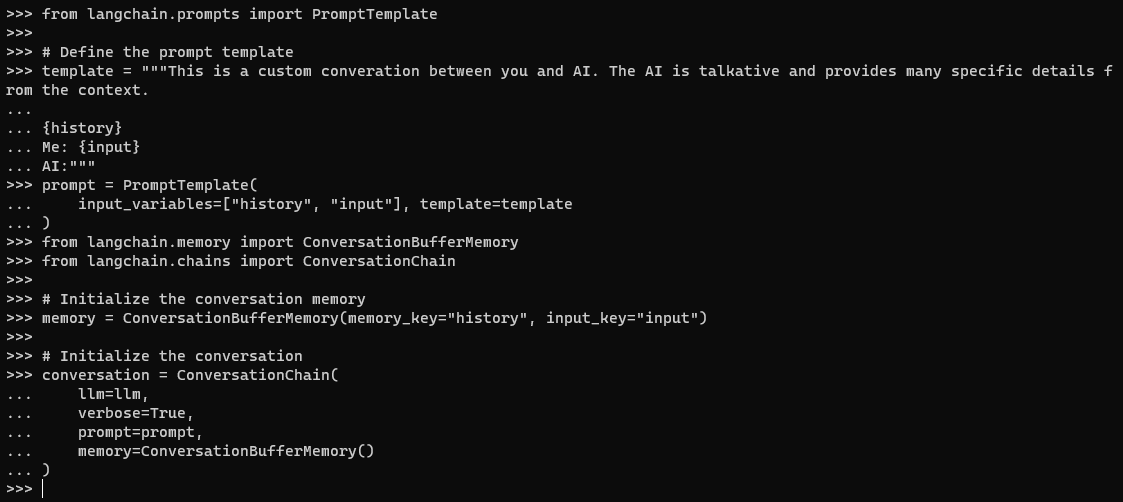

- Adds conversation buffer memory to the chain. This is the modified prompt:

from langchain.prompts import PromptTemplate

# Define the prompt template

template = """This is a custom converation between you and AI. The AI is talkative and provides many specific details from the context.

{history}

Me: {input}

AI:"""

prompt = PromptTemplate(

input_variables=["history", "input"], template=template

)

- Sets up the memory as such:

from langchain.memory import ConversationBufferMemory

from langchain.chains import ConversationChain

# Initialize the conversation memory

memory = ConversationBufferMemory(memory_key="history", input_key="input")

# Initialize the conversation

conversation = ConversationChain(

llm=llm,

verbose=True,

prompt=prompt,

memory=ConversationBufferMemory()

)

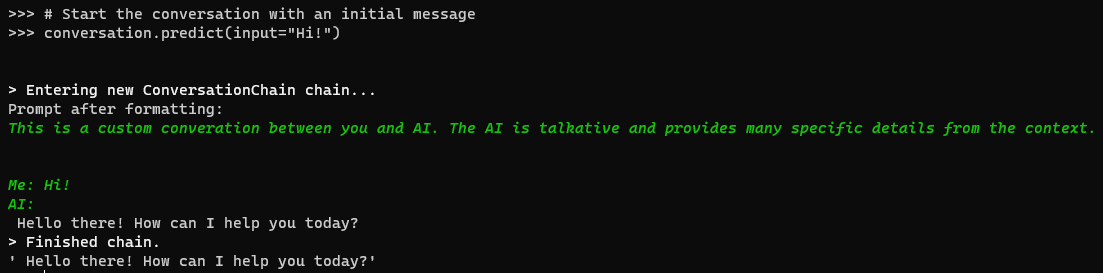

- Chats with the AI:

# Start the conversation with an initial message

conversation.predict(input="Hi!")

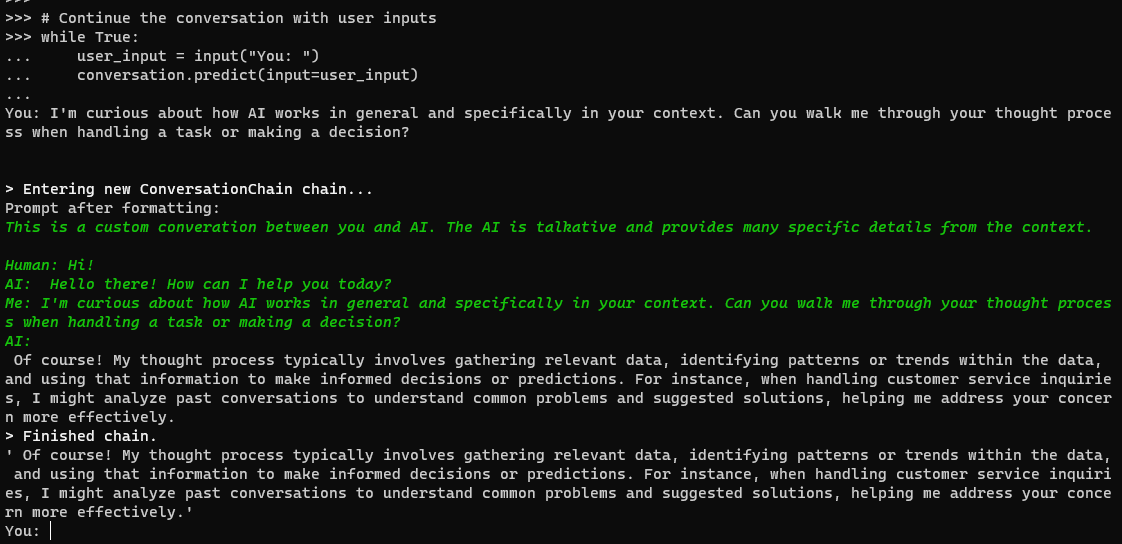

- Continues with user inputs:

# Continue the conversation with user inputs

while True:

user_input = input("You: ")

conversation.predict(input=user_input)

- And that’s all for this post! Will be exploring into the different areas in the upcoming posts. Stay tuned!