K8s Pi Cluster with Ansible

By provisioning a Kubernetes PI Cluster with Ansible, you can easily spin off a Raspberry PI cluster

Kubernetes Cluster with Ansible

(Total Setup Time: 50 mins)

In this guide, I will configure a Kubernetes Cluster using Ansible. This guide follows closely to the Raspbernetes Cluster Installation. I will be using 3x Raspberry Pi 4 Model B 8GB as the master nodes and 1x Raspberry Pi 3 Model B as the only worker node.

You need to install ansible, flash and kubectl. I installed both Ansible and Flash onto my elementary OS host by:

#Installing Ansible

sudo apt update

sudo apt install software-properties-common

sudo add-apt-repository --yes --update ppa:ansible/ansible

sudo apt install ansible

#Installing Flash

curl -LO https://github.com/hypriot/flash/releases/download/2.7.0/flash

cdmod +x flash

sudo mv flash /usr/local/bin/flash

sudo apt-get install -y pv curl python-pip unzip hdparm

sudo pip install awscli

Preparation

(20 mins)

Firstly, downloads the Ubuntu 20.04 image and unzips it:

curl -L "http://cdimage.ubuntu.com/releases/20.04.2/release/ubuntu-20.04.2-preinstalled-server-arm64+raspi.img.xz" -o ~/Downloads/ubuntu-20.04.2-preinstalled-server-arm64+raspi.img.xz

unxz -T 0 ~/Downloads/ubuntu-20.04.2-preinstalled-server-arm64+raspi.img.xz

Secondly, clones the Raspbernetes repository and makes the necessary changes (hostname, users.name, ssh_authorized_keys, etho0.addresses and gateway4) to the cloud-init file. I have created 4x cloud-config.yml for each of the 64GB SD card:

git clone https://github.com/raspbernetes/k8s-cluster-installation.git

cd k8s-cluster-installation

vi setup/cloud-config.yml

#Create the 4x SD cards ubuntu image using Flash

sudo flash --userdata cloud-config.yml ~/Downloads/ubuntu-20.04.2-preinstalled-server-arm64+raspi.img

Thirdly, ensures that Ansible inventory is changed according to hostname, username and IP addresses for each SD card.

vi ansible/inventory

#My setup as reference

[masters]

master1 hostname=master1 ansible_host=192.168.100.180 ansible_user=ubuntu

master2 hostname=master2 ansible_host=192.168.100.181 ansible_user=ubuntu

master3 hostname=master3 ansible_host=192.168.100.182 ansible_user=ubuntu

[workers]

worker1 hostname=worker1 ansible_host=192.168.100.188 ansible_user=ubuntu

#Ensures that you are able to ping all nodes

env ANSIBLE_CONFIG=ansible/ansible.cfg ansible all -m ping

Fourthly, prior to the cluster setup, I ssh into each of the nodes and run the following.

ssh ubuntu@master1

sudo -i

systemctl stop apt-daily.timer

systemctl disable apt-daily.timer

systemctl mask apt-daily.service

systemctl daemon-reload

reboot

These are some of my customizations:

# group_vars/all.yml

26: keepalived_vip: '192.168.100.200'

32: cri_plugin: docker

38: cni_plugin: 'calico'

# group_var/masters.yml

16: cluster_kube_proxy_enabled:true

23: cni_plugin: 'calico'

# roles/cluster/defaults/main/main.yml

101: docker: 'unix:///run/containerd/containerd.sock'

# roles/clusters/templates/kubeadm-config.yaml.j2

25: criSocket: unix:///run/containerd/containerd.sock

# roles/clusters/templates/kubeadm-join.yaml.j2

26: criSocket: unix:///run/containerd/containerd.sock

# roles/cni/defaults/main.yml

3: cni_bgp_peer_address: 192.168.100.1

# roles/common/tasks/main.yml (added to line 1)

- name: Bugfix for Ubuntu 20.04

set_fact:

ansible_os_family: "debian"

# roles/container-runtime/containerd/defaults/main.yml

9: containerd_use_docker_repo: true

# roles/container-runtime/containerd/defaults/main.yml (added to line 1)

# Instructions: https://docs.docker.com/engine/install/ubuntu/#install-using-the-repository

- name: Add Docker’s official GPG key

apt_key:

url: https://download.docker.com/linux/ubuntu/gpg

state: present

- name: Add apt repository for Docker

apt_repository:

repo: deb [arch=amd64 signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu focal stable

state: present

regiser: docker_repository

until: docker_repository is success

# roles/container-runtime/defaults/main.yml

2: cri_plugin: 'docker'

# roles/container-runtime/docker/defaults/main.yml

12: #- docker-ce

13: #- docker-ce-cli

14: #- containerd.io

15: - docker.io

# roles/container-runtime/vars/main.yml

23: docker: 'unix:///run/containerd/containerd.sock'

# roles/haproxy/templates/haproxy.cfg.j2

22: server {{hostvars[host]['hostname'] }} {{hostvars[host]['ansible_host'] }}:6433 check

Installation

(30 mins)

First, you may start the Kubernetes cluster setup by running Ansible playbook:

env ANSIBLE_CONFIG=ansible/ansible.cfg ansible-playbook ansible/playbooks/all.yml

Second, when installation completes, you may copy contents of ansible/playbooks/output/k8s-config.yaml to your local machine:

# Create a kube folder in host machine

mkdir ~/.kube

# Paste copied content into config file

vi ~/.kube/config

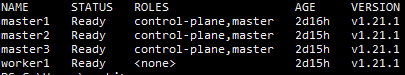

kubectl get nodes

Finally, the Kubernetes PI Cluster with Ansible is ready for play! If you are interested in creating a HA cluster, you may refer to my previous post

Troubleshooting

if BLKRRPART failed: Device or resource busy

If you face similar error as above, you may comment out line 410 in flash script:

vi /usr/local/bin/flash

#sudo hdparm -z "$1"

sleep 1m

msg: Only python3 is supported, you’re running 2.7.17 locally

If you encouter this issue, you may install Python 3 and try re-running the playbook with the interpreter setting:

env ANSIBLE_CONFIG=ansible/ansible.cfg ansible-playbook ansible/playbooks/all.yml -e ansible_python_interpreter=/usr/bin/python3

Please ensure that: The cluster has a stable controlPlaneEndpoint address. The certificates that must be shared among control plane instances are provided.

If you face this issue during ansible setup, you may need to ssh ubuntu@master1:

sudo -i

vi /etc/kubernetes/kubeadm-join.yaml

#modify apiServerEndpoint to

192.168.100.180:6443