Gitea for K8s Cluster

Having Gitea on Kubernetes Pi cluster, you will have full control over your personal Git repositories

Gitea for Kubernetes Cluster on Pi

(Total Setup Time: 40 mins)

Similar to the previous post on Gitea for MicroK8s Cluster, I will be setting up Git in the newly created Kubernetes Cluster.

Setup MySQL

(15 mins)

First, I download the mysql-server docker image:

docker pull mysql/mysql-server:latest

docker images

Second, with reference from here, I created the required path on my external HDD:

sudo mkdir -p /mnt/hdd/master1k8s/app/mysql/data

sudo vi /mnt/hdd/master1k8s/app/mysql/mysql-pv.yaml

Add the following to mysql-pv.yaml file:

apiVersion: v1

kind: PersistentVolume

metadata:

name: mysql-pv

labels:

type: local

spec:

storageClassName: manual

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/mnt/hdd/master1k8s/app/mysql/data"

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mysql-pvc

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

Next, apply the above yaml file to create mysql persistent volumes with this command:

kubectl apply -f /mnt/hdd/master1k8s/app/mysql/mysql-pv.yaml

Third, add the following to /mnt/hdd/master1k8s/app/mysql/mysql-deployment.yaml:

apiVersion: v1

kind: Service

metadata:

name: mysql

spec:

ports:

- port: 3306

selector:

app: mysql

clusterIP: None

---

apiVersion: apps/v1 # for versions before 1.9.0 use apps/v1beta2

kind: Deployment

metadata:

name: mysql

spec:

selector:

matchLabels:

app: mysql

strategy:

type: Recreate

template:

metadata:

labels:

app: mysql

spec:

containers:

- image: mysql/mysql-server:latest

imagePullPolicy: IfNotPresent

name: mysql

env:

# Use secret in real usage

- name: MYSQL_ROOT_PASSWORD

value: password

ports:

- containerPort: 3306

name: mysql

volumeMounts:

- name: mysql-persistent-storage

mountPath: /var/lib/mysql

volumes:

- name: mysql-persistent-storage

persistentVolumeClaim:

claimName: mysql-pvc

Next, apply the above yaml file to create mysql service and deployment:

kubectl apply -f /mnt/hdd/master1k8s/app/mysql/mysql-deployment.yaml

Lastly, you may use the following to check on your deployment:

kubectl describe deployment mysql

kubectl get pods

kubectl logs mysql-56c7cb9d76-w4snt

Preparing MySQL

(5 mins)

To prepare mysql for Gitea, I need to get the pod name:

kubectl get pods

First, ssh into mysql pod:

kubectl exec -it mysql-56c7cb9d76-w4snt -- /bin/bash

Second, connect to mysql (default is password):

mysql -u root -p

Last, prepare the database (user=gitea, password=gitea, database=giteadb):

CREATE USER 'gitea'@'%' IDENTIFIED BY 'gitea';

CREATE DATABASE giteadb;

GRANT ALL PRIVILEGES ON giteadb.* TO 'gitea';

FLUSH PRIVILEGES;

Preparing Gitea with Docker

(15 mins)

You may start to install Gitea with docker by preparing the docker-compose.yml:

mkdir -p /mnt/hdd/master1k8s/docker

sudo vi /mnt/hdd/master1k8s/docker/docker-compose.yml

Next, with reference from here, add the following to docker-compose.yml:

version: "2"

networks:

gitea:

external: false

services:

server:

image: gitea/gitea:latest

environment:

- USER_UID=1000

- USER_GID=1000

- DB_TYPE=mysql

- DB_HOST=mysql:3306

- DB_NAME=giteadb

- DB_USER=gitea

- DB_PASSWD=gitea

restart: always

networks:

- gitea

volumes:

- ./gitea:/data

- /etc/timezone:/etc/timezone:ro

- /etc/localtime:/etc/localtime:ro

ports:

- "3000:3000"

- "222:22"

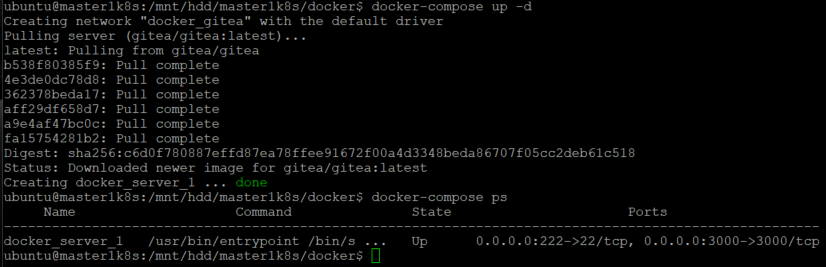

You will get Gitea running with these docker-compose commands:

# Builds, creates, starts containers

docker-compose up -d

# Lists containers

docker-compose ps

# Displays log output

docker-compose logs

# Stops, removes containers

docker-compose down

Serving Gitea

(5 mins)

To access Gitea on Kubernetes Pi Cluster from my laptop, I need to expose it to external using NodePort:

sudo mkdir -p /mnt/hdd/master1k8s/app/gitea/data

sudo vi /mnt/hdd/master1k8s/app/gitea/gitea-deployment.yaml

Next, insert the following into gitea-deployment.yaml:

apiVersion: v1

kind: Service

metadata:

name: gitea

spec:

ports:

- port: 3000

targetPort: 3000

nodePort: 30000

name: gitea-http

- port: 2222

targetPort: 2222

nodePort: 32222

name: gitea-ssh

selector:

app: gitea

type: NodePort

---

apiVersion: apps/v1 # for versions before 1.9.0 use apps/v1beta2

kind: Deployment

metadata:

name: gitea

spec:

replicas: 1

selector:

matchLabels:

app: gitea

strategy:

type: Recreate

template:

metadata:

labels:

app: gitea

spec:

containers:

- image: gitea/gitea:latest

imagePullPolicy: IfNotPresent

name: gitea

ports:

- containerPort: 3000

name: gitea-http

- containerPort: 22

name: gitea-ssh

volumeMounts:

- name: gitea-data

mountPath: /data

volumes:

- name: gitea-data

hostPath:

path: /mnt/hdd/master1k8s/app/gitea

type: Directory

nodeSelector:

git-data-storage: "true"

Apply the above yaml file to create Gitea service and deployment:

kubectl apply -f /mnt/hdd/master1k8s/app/gitea/gitea-deployment.yaml

Since Gitea has a nodeSelector which requires git-data-storage flag, the below label (replace with your node name) is needed for the pod to deploy successfully:

# Labels node

kubectl label nodes master1k8s git-data-storage=true

# Checks gitea deployment

kubectl describe deployment gitea

# Deletes deployment and service, if needed

kubectl delete deployment,svc gitea

# Another way to delete

kubectl delete -f /mnt/hdd/master1k8s/app/gitea/gitea-deployment.yaml

Get the IP address of mysql server from this command:

kubectl get svc -o wide

This is my query results:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

gitea NodePort 10.110.40.142 <none> 3000:30000/TCP,2222:32222/TCP 4m6s app=gitea

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 105m <none>

mysql ClusterIP None <none> 3306/TCP 31m app=mysql

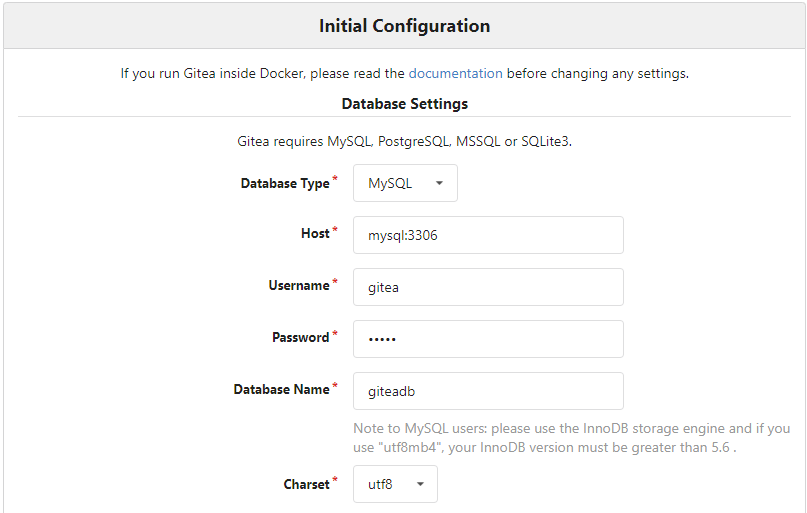

Next, follows the gitea installation (host=mysql:3306, username=gitea, password=gitea, database=giteadb):

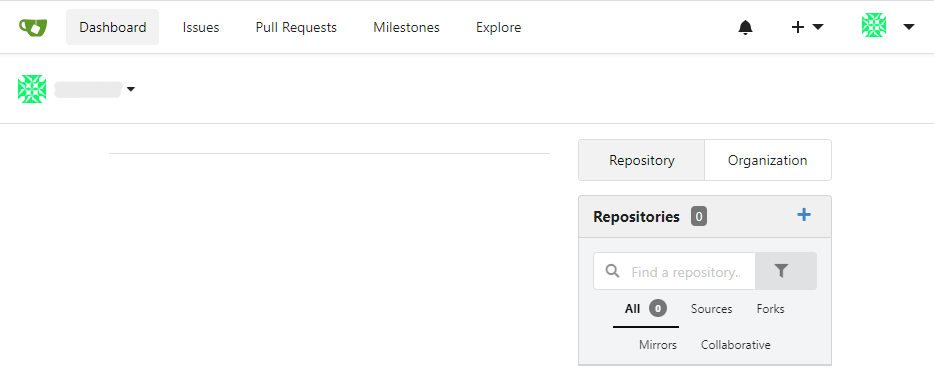

Finally, the Gitea on Kubernetes Pi Cluster is properly setup and you may proceed to register new account and login!

Troubleshooting

ERROR 1045 (28000): Access denied for user ‘root’@’localhost’

When access mysql from within mysql pod, I encountered this. Afer some troubleshooting, I realized that during the few rounds of mysql setup, the previous folder, /mnt/hdd/master1k8s/app/mysql/data, was not properly cleaned up.