Porting Llama3.java to Micronaut

Building on my previous post on Micronaut-Llama2, I’ve undertaken a similar project—porting llama3.java into a Micronaut application. This initiative is designed to simplify the integration of any large language model (LLM) in the GGUF format into Micronaut-based Java microservices or applications, enabling seamless adoption of cutting-edge AI in production-ready environments.

Getting Started

Below is the environment setup I used for this project:

java --version

# java 23.0.1 2024-10-15

# Java(TM) SE Runtime Environment Oracle GraalVM 23.0.1+11.1 (build 23.0.1+11-jvmci-b01)

# Java HotSpot(TM) 64-Bit Server VM Oracle GraalVM 23.0.1+11.1 (build 23.0.1+11-jvmci-b01, mixed mode, sharing)

mn --version

# Micronaut Version: 4.7.1

Creating a Micronaut Project with GraalVM Support

To get started, I created a new Micronaut application with built-in support for GraalVM, Gradle as the build tool, and Java as the programming language:

mn create-app example.micronaut.llama3 --features=graalvm --build=gradle --lang=java --test=junit

Project Structure

Below is the resulting project structure, modularized for scalability and clarity:

micronuat-llama3/

├── src/

│ └── main/

│ ├── java/

│ │ └── example/

│ │ └── micronaut/

│ │ ├── controller/

│ │ ├── gguf/

│ │ ├── model/

│ │ │ └── tensor/

│ │ ├── service/

│ │ ├── utils/

│ │ └── Application.java

│ └── resources/

│ ├── application.properties

│ └── logback.xml

└── build.gradle

Application Configuration

Key configurations for the application are defined in application.properties:

micronaut.application.name=llama3

llama.BatchSize=16

llama.VectorBitSize=0

llama.PreloadGGUF=Llama-3.2-1B-Instruct-Q4_0.gguf

options.model_path=Llama-3.2-1B-Instruct-Q4_0.gguf

options.temperature=0.1f

options.topp=0.95f

options.seed=-1

options.max_tokens=512

options.stream=true

options.echo=true

Gradle Customization

To enable GraalVM native-image builds and optimize runtime performance, the build.gradle file was enhanced as follows:

dependencies {

annotationProcessor("org.projectlombok:lombok")

compileOnly("org.projectlombok:lombok")

compileOnly("io.projectreactor:reactor-core")

}

application {

mainClass = "example.micronaut.Application"

applicationDefaultJvmArgs = [

'--enable-preview',

'--add-modules', 'jdk.incubator.vector',

]

}

java {

sourceCompatibility = JavaVersion.toVersion("23")

targetCompatibility = JavaVersion.toVersion("23")

}

tasks.withType(JavaCompile) {

options.compilerArgs += [

'--enable-preview',

'--add-modules', 'jdk.incubator.vector'

]

}

tasks.withType(JavaExec) {

jvmArgs += [

'--enable-preview',

'--add-modules', 'jdk.incubator.vector'

]

}

graalvmNative {

toolchainDetection = true

binaries {

main {

imageName = "application"

mainClass = "example.micronaut.Application"

buildArgs.addAll([

'--enable-preview',

'--add-modules=jdk.incubator.vector',

'-O3',

'-march=x86-64',

'--initialize-at-build-time=com.example.Application',

'--enable-monitoring=heapdump,jfr',

'-H:+UnlockExperimentalVMOptions',

'-H:+ForeignAPISupport',

'-H:+ReportExceptionStackTraces',

])

}

}

}

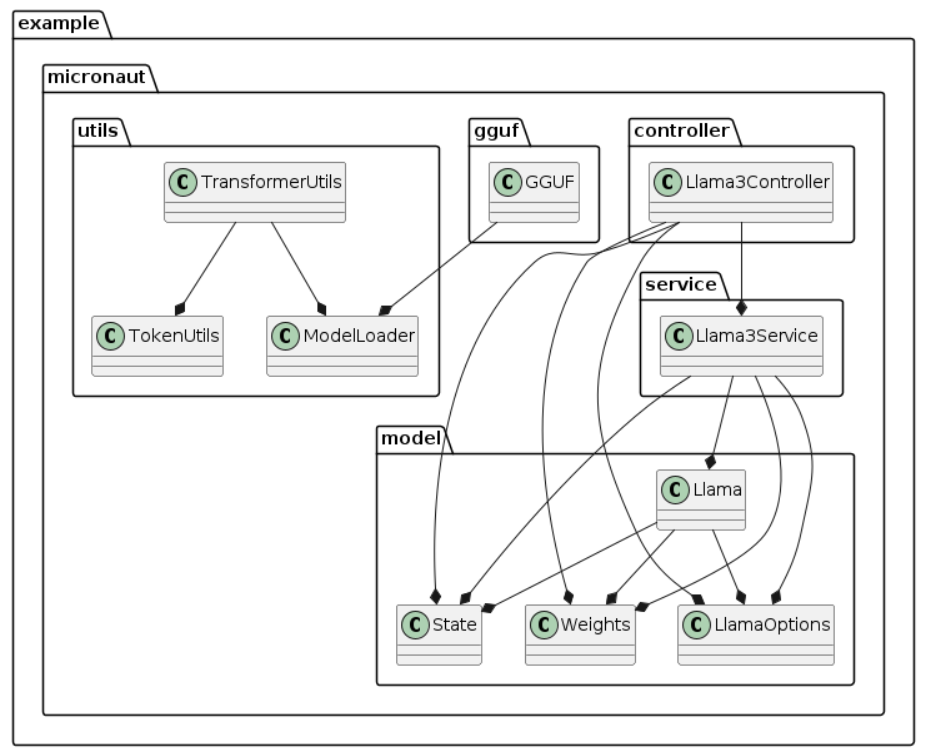

Class Diagram

The class diagram illustrates the high-level structure of the Llama3 Micronaut application, showcasing the relationships between core components.

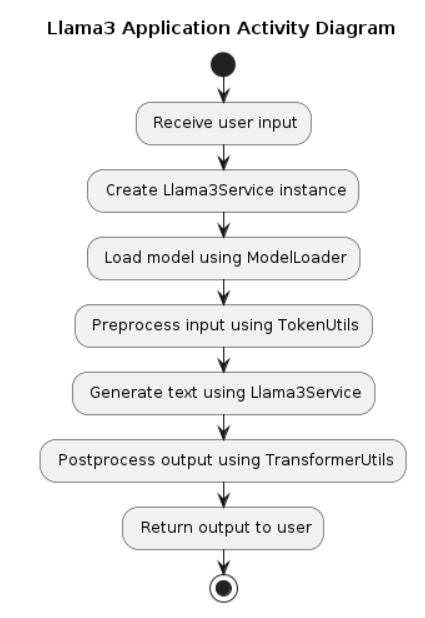

Activity Diagram

The activity diagram outlines the typical workflow of the Llama3 Micronaut application from user input to model inference:

Refactoring llama3.java

Building upon the original llama3.java, this project refactors and modularizes the codebase into well-defined, logical packages to enhance maintainability and integration within a Micronaut application. Below are the highlights of each package:

GGUF Package

GGUF is a binary format for efficient model storage and inference. The GGUF package encapsulates all related data structures, enhancing the modularity of the codebase.

Model Package

The model.tensor subpackage focuses on tensor operations, sampling techniques, and token processing, which are critical for efficient model inference. The main model package encompasses all core model definitions and associated records required for the project.

Utils Package

Utility classes centralize helper methods for model loading, token generation, and runtime performance tracking, streamlining development.

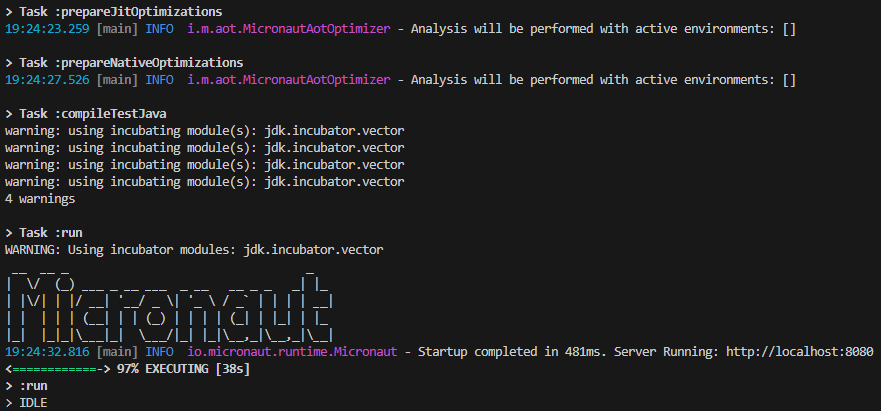

Running the Application

To build and run the application:

.\gradlew clean build run

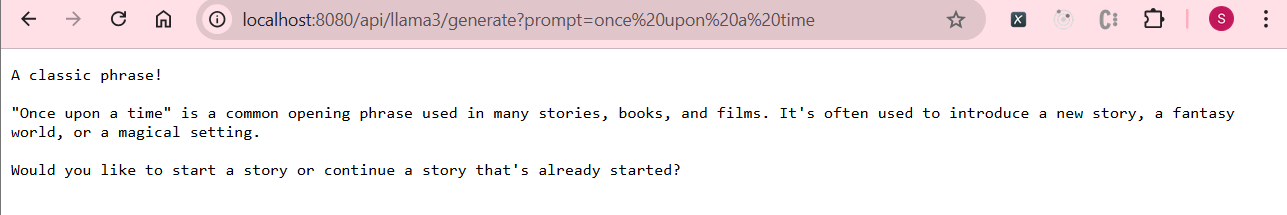

You can test the API by sending a request to:

http://localhost:8080/api/llama3/generate?prompt=once%20upon%20a%20time

Sample Response:

Code Repository

The complete implementation is available on GitHub: Micronaut-Llama3. Feel free to explore, clone, and integrate it into your projects!