OpenVINO represents an open-source toolkit designed for the optimization and deployment of deep learning models. Acting as the interface between the Transformers and Diffusers libraries, Optimum-Intel seamlessly integrates with various Intel tools and libraries, facilitating the acceleration of end-to-end pipelines on Intel architectures. This post documents my journey as I set up and execute example code on my aging laptop, exploring the application of Quantization-aware Training (QAT) and the Token Merging method to optimize the UNet model within the Stable Diffusion pipeline.

Due to limitations in my aging graphics card [GeForce GT 750M], the provided code is specifically tailored for CPU-based inference. The GPU resources are insufficient for handling GPU-intensive tasks.

Environment Setup

Commencing with the installation of conda, the following commands pave the way for the subsequent procedures:

wget https://repo.anaconda.com/archive/Anaconda3-2022.05-Linux-x86_64.sh

chmod +x Anaconda3-2022.05-Linux-x86_64.sh

bash Anaconda3-2022.05-Linux-x86_64.sh

# Identify your shell name using `echo $0`, e.g., shell.bash

eval "$(/home/pi/anaconda3/bin/conda shell.YOUR_SHELL_NAME hook)"

conda init

conda create -n openvino python=3.10

Subsequently, the installation of prerequisites involves:

sudo apt install vim git

Referencing the guidelines provided in Optimum-Intel’s GitHub repository, the repository is cloned using the following commands:

git clone https://github.com/huggingface/optimum-intel.git

cd optimum-intel/examples/openvino/stable-diffusion

# Install Optimum-Intel for OpenVINO

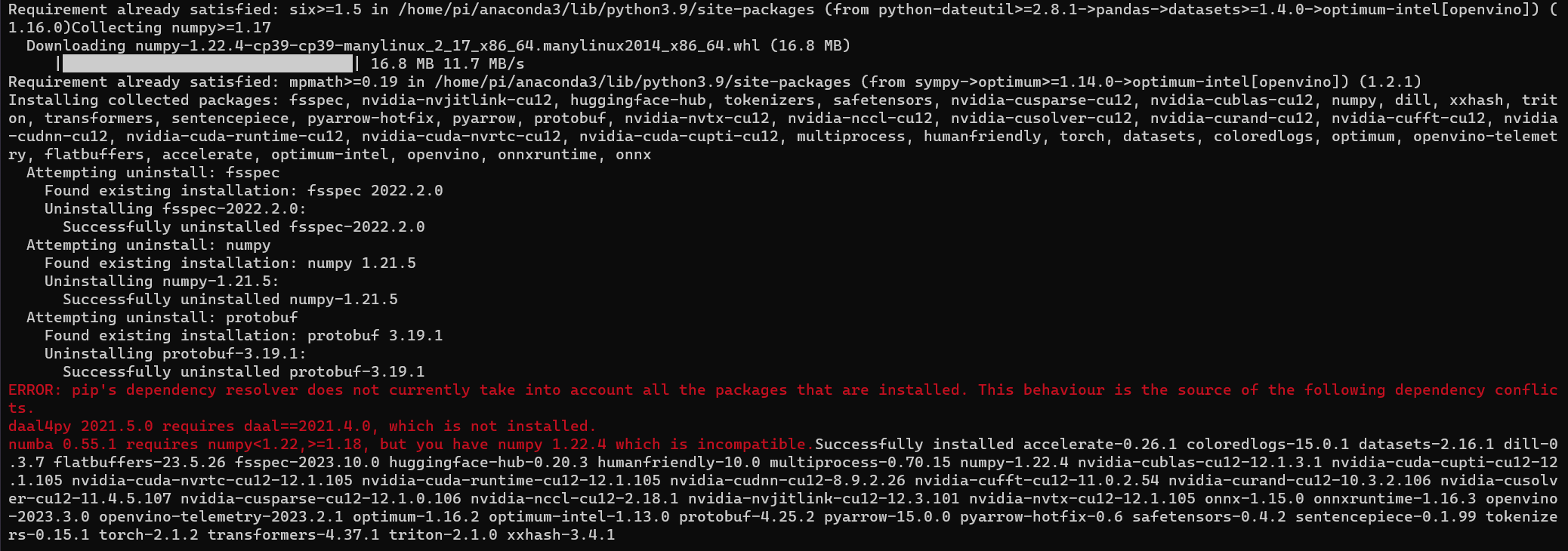

pip install optimum-intel[openvino]

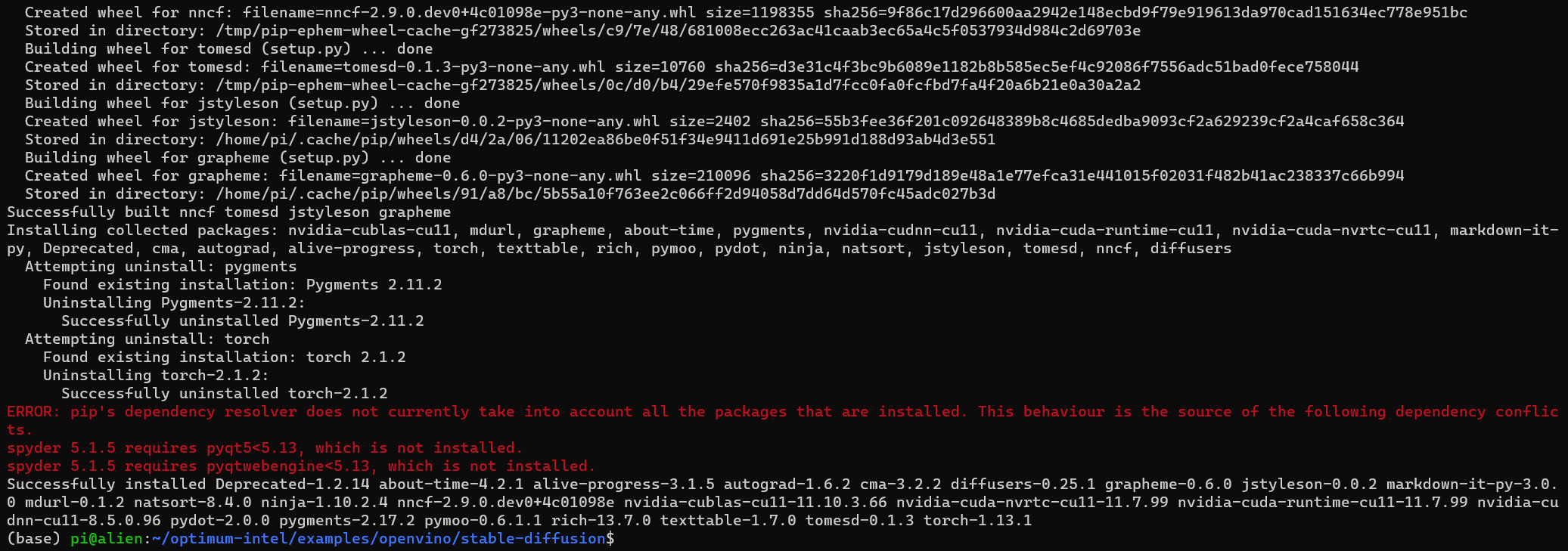

pip install -r requirements.txt

A correction is necessary in the requirements.txt file:

tomesd @ git+https://github.com/AlexKoff88/tomesd.git@openvino

Execution of Pre-Optimized Models

To demonstrate the capabilities of the pre-optimized models, consider the following Python scripts:

General-purpose image generation model:

from optimum.intel.openvino import OVStableDiffusionPipeline

pipe = OVStableDiffusionPipeline.from_pretrained("OpenVINO/stable-diffusion-2-1-quantized", compile=False)

pipe.reshape(batch_size=1, height=512, width=512, num_images_per_prompt=1)

pipe.compile()

prompt = "sailing ship in storm by Rembrandt"

output = pipe(prompt, num_inference_steps=50, output_type="pil")

output.images[0].save("sailing-ship.png")

Pokemon generation:

from optimum.intel.openvino import OVStableDiffusionPipeline

pipe = OVStableDiffusionPipeline.from_pretrained("OpenVINO/Stable-Diffusion-Pokemon-en-quantized", compile=False)

pipe.reshape(batch_size=1, height=512, width=512, num_images_per_prompt=1)

pipe.compile()

prompt = "cartoon bird"

output = pipe(prompt, num_inference_steps=50, output_type="pil")

output.images[0].save("cartoon-bird.png")

The result appears to be a blend of both Pidgey and Swellow.

prompt = "cartoon insert"

output = pipe(prompt, num_inference_steps=50, output_type="pil")

output.images[0].save("cartoon-insect.png")

This iteration resembles Butterfree.

Have great fun in your own Pokemon creation and evolution!