Building Your First Kubeflow Pipeline: A Step-by-Step Guide

Series: Machine Learning Operations

Kubeflow Pipelines (KFP) is a powerful platform for creating and deploying scalable machine learning (ML) workflows using Docker containers. It enables data scientists and ML engineers to author workflows in Python, manage and visualize pipeline runs, and efficiently utilize compute resources. KFP supports custom ML components, leverages existing ones, and ensures cross-platform portability with a platform-neutral IR YAML definition. In this post, I’ll share my learnings about KFP v2.

pip list | grep kfp

# Current versions

kfp 2.8.0

kfp-pipeline-spec 0.3.0

kfp-server-api 2.0.5

Creating a Pipeline

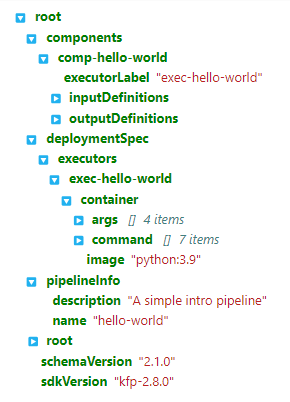

Referencing the Hello World sample code, here is the result of running the hello_world.py notebook:

Download the hello_world_pipeline.json to your local drive:

Running the Pipeline

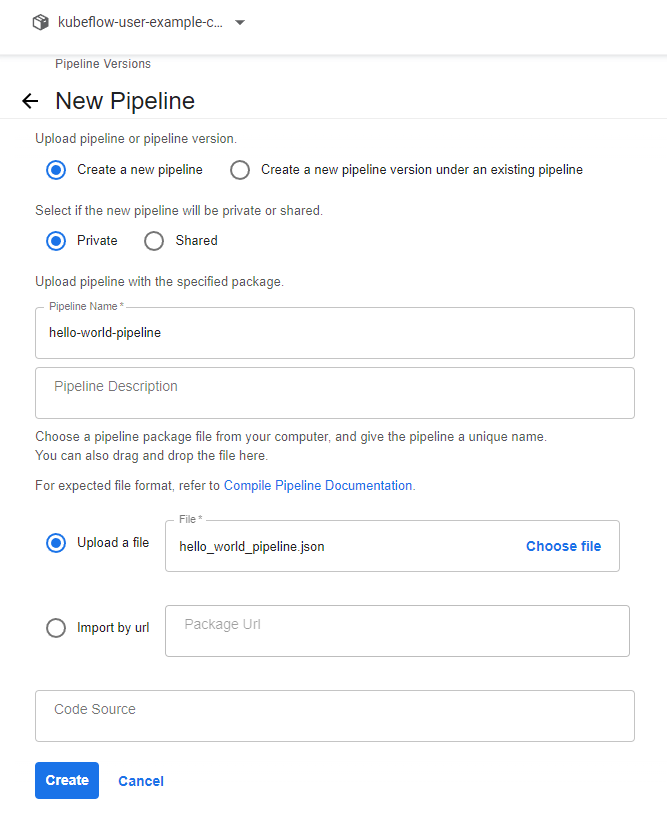

Following the official Run a Pipeline guide, I will run the pipeline by submitting it via the Upload pipeline button:

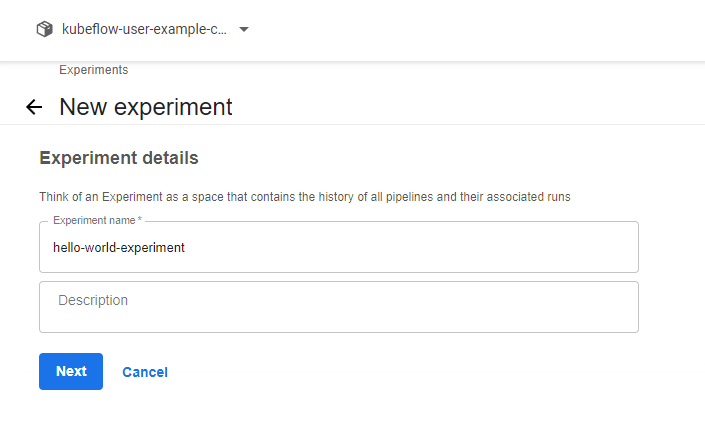

Next, from the hello-world-pipeline pipelines, click on the Create experiment button.

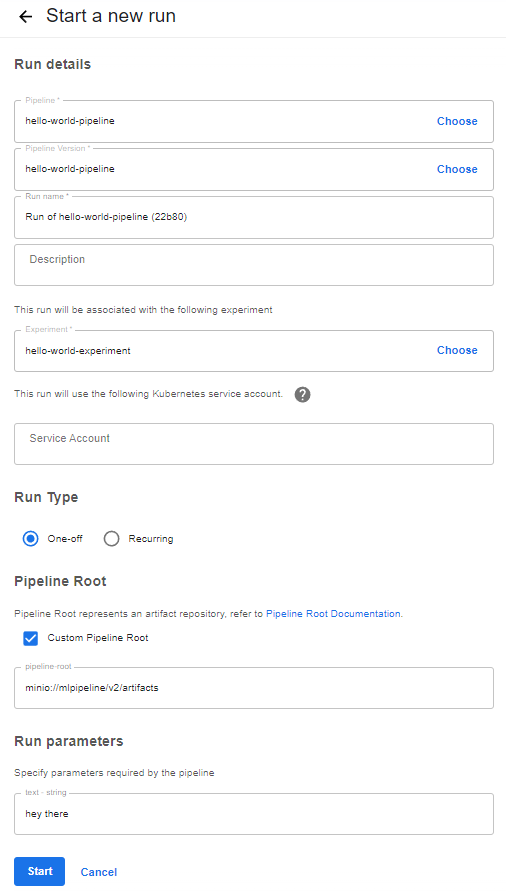

After a new run is triggered, select the pipeline and change the default pipeline-root according to your setup:

minio://mlpipeline/v2/artifacts

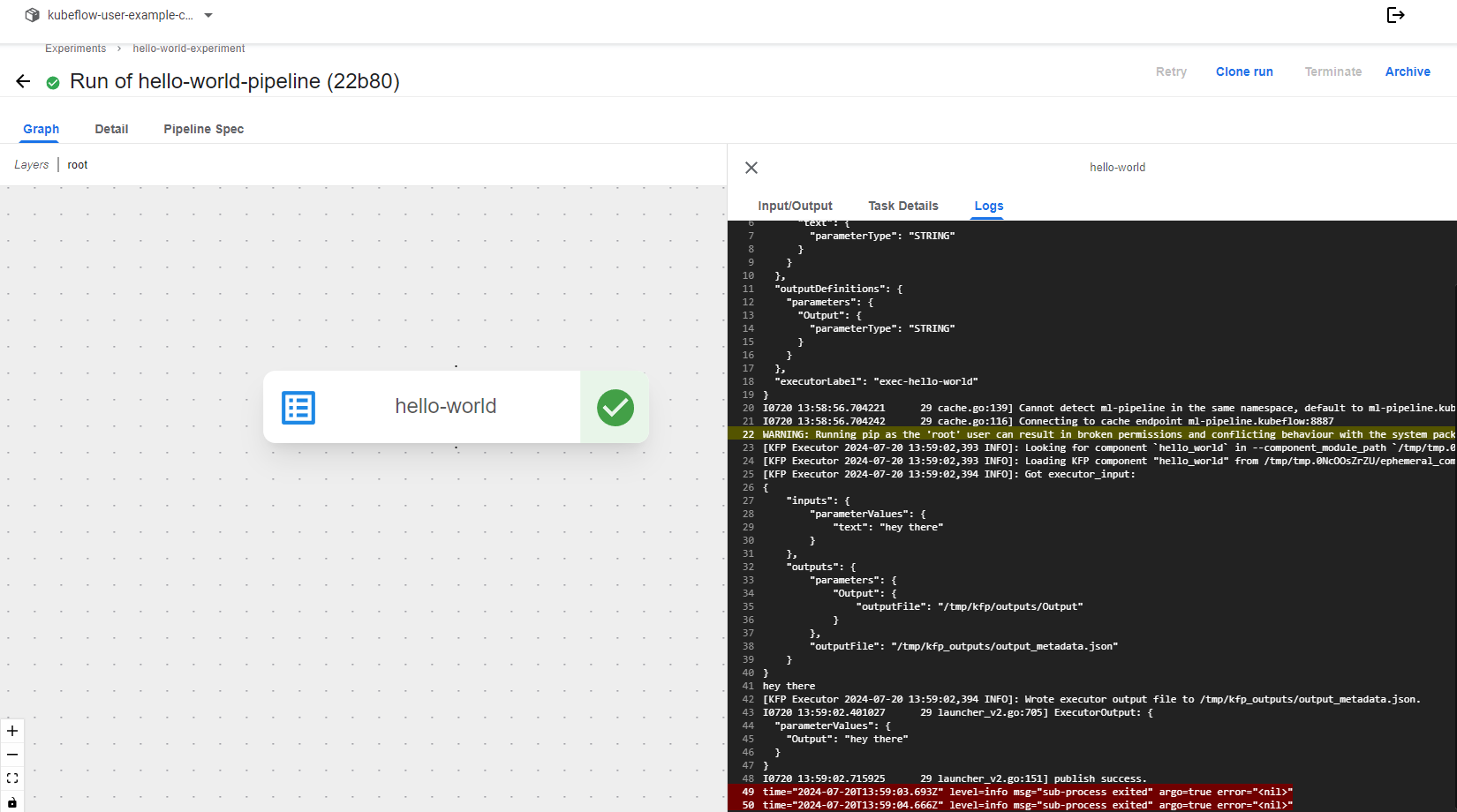

Here is the result of the run:

Sequential Pipeline

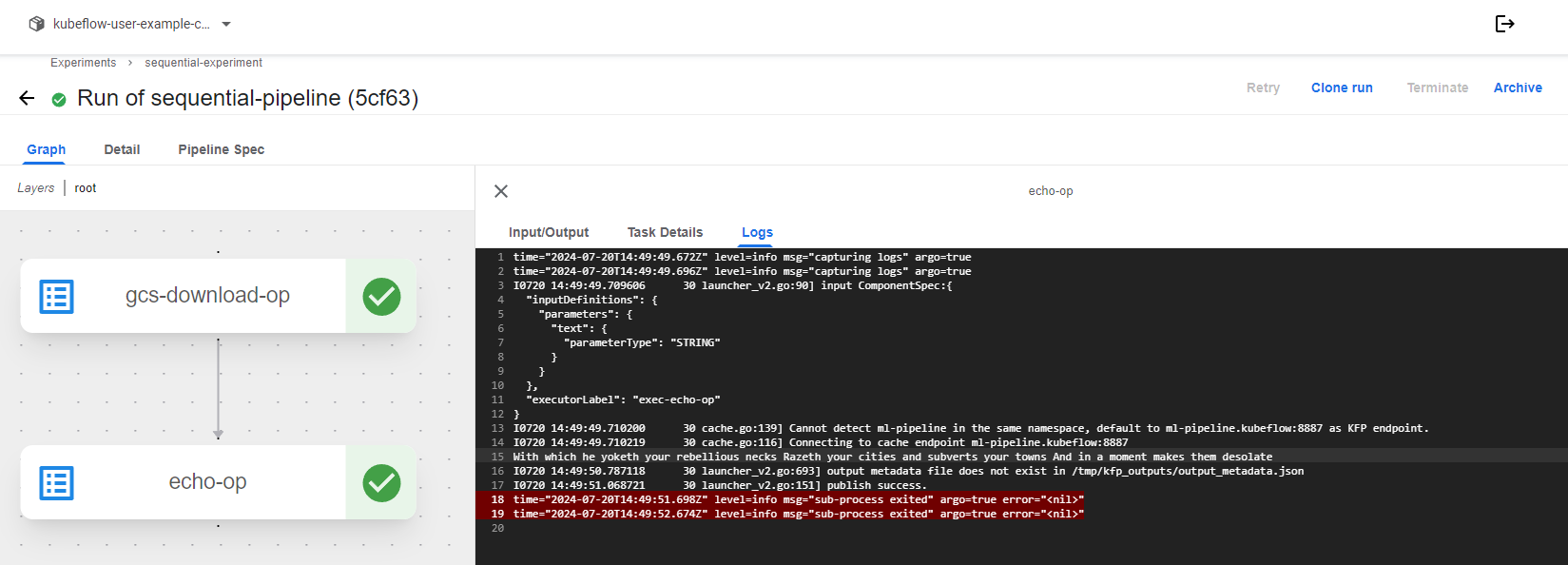

Referencing the Sequential sample code, here is the final run result:

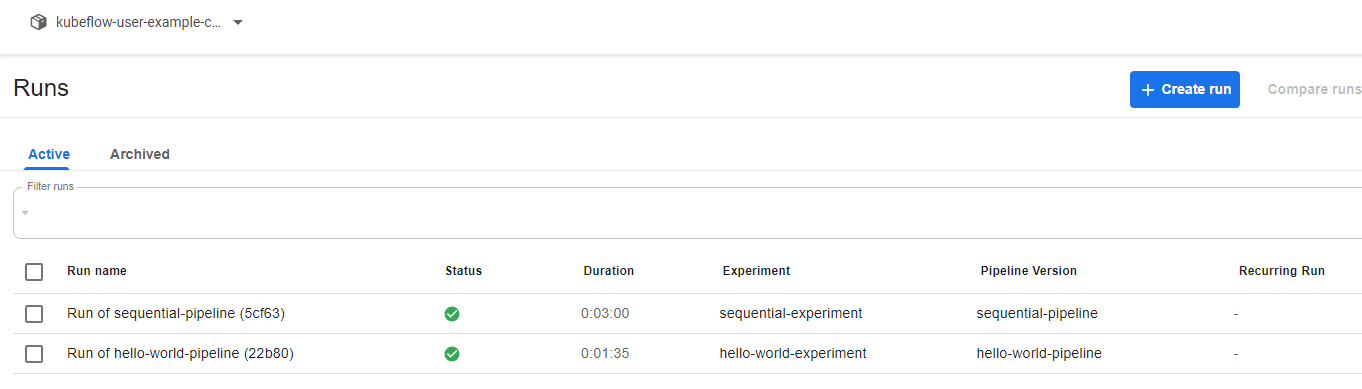

Here is a view of all the runs:

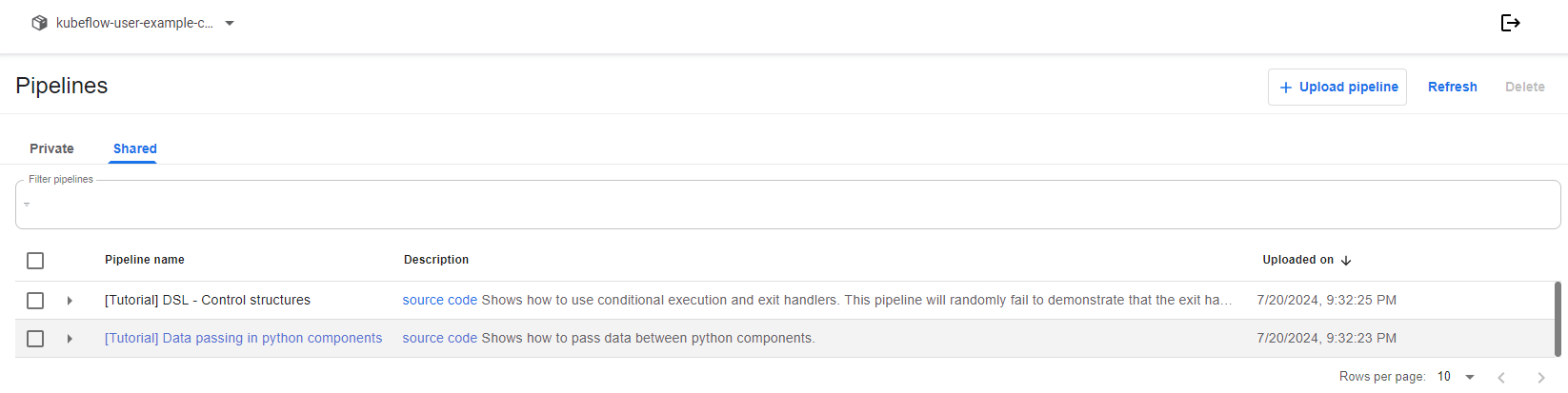

Shared Pipelines

To run the shared pipleine, click on the Data passing in python components pipeline:

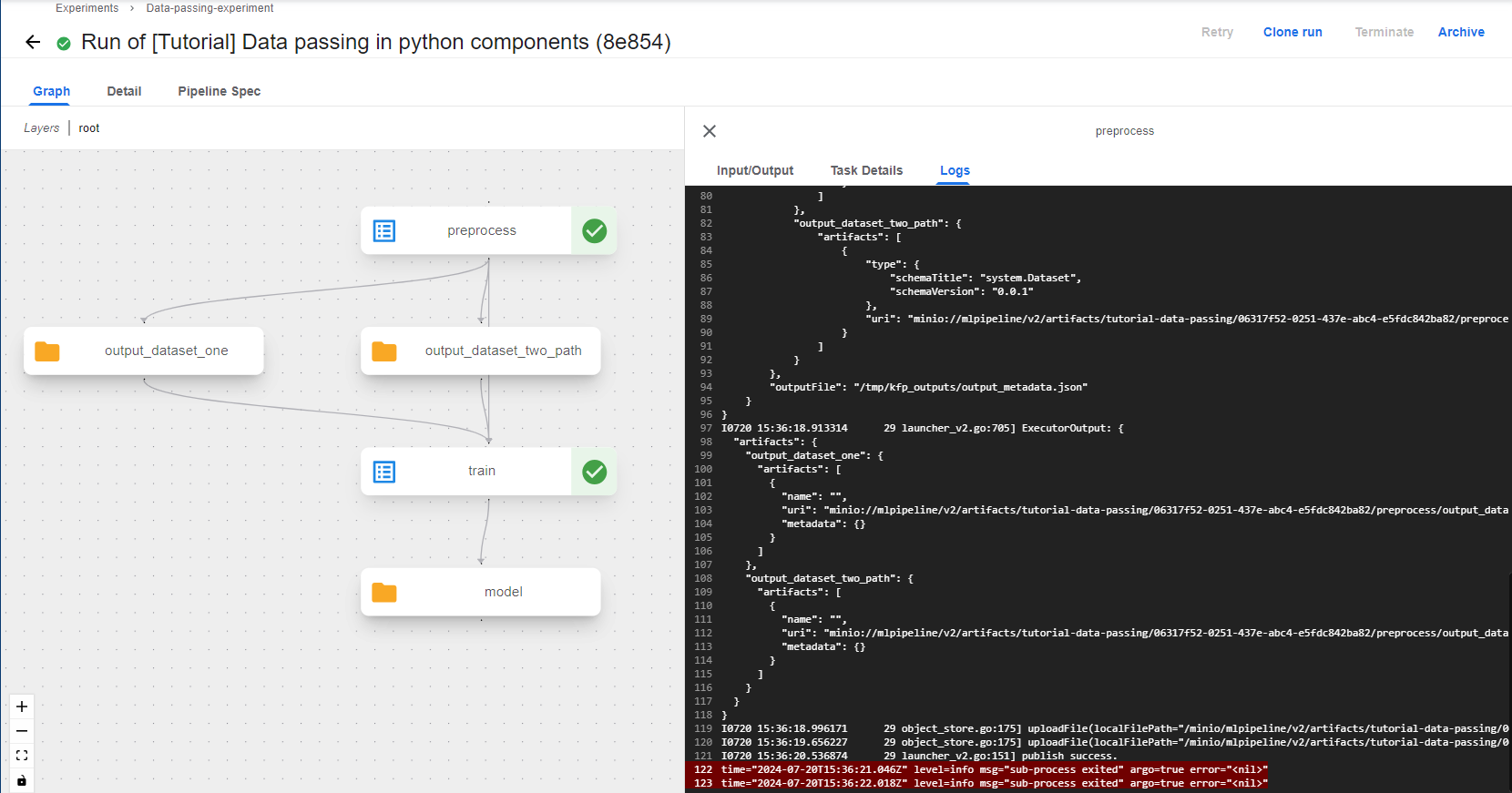

Similar to our own pipelines, after clicking on the Create experiment button, start a new run. Here is the sample run result:

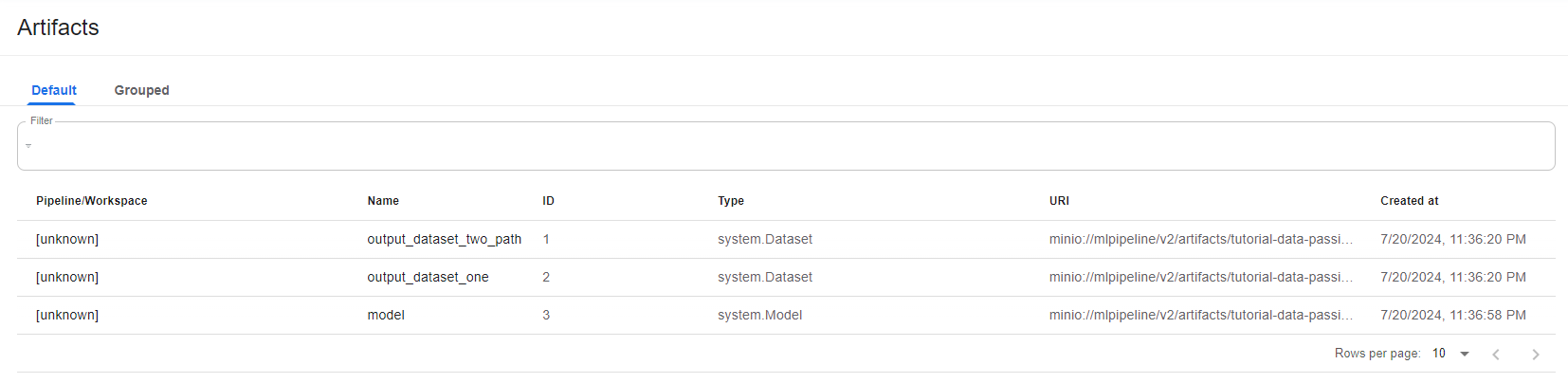

Artifacts

As expected, the artifacts are stored in the default MinIO path:

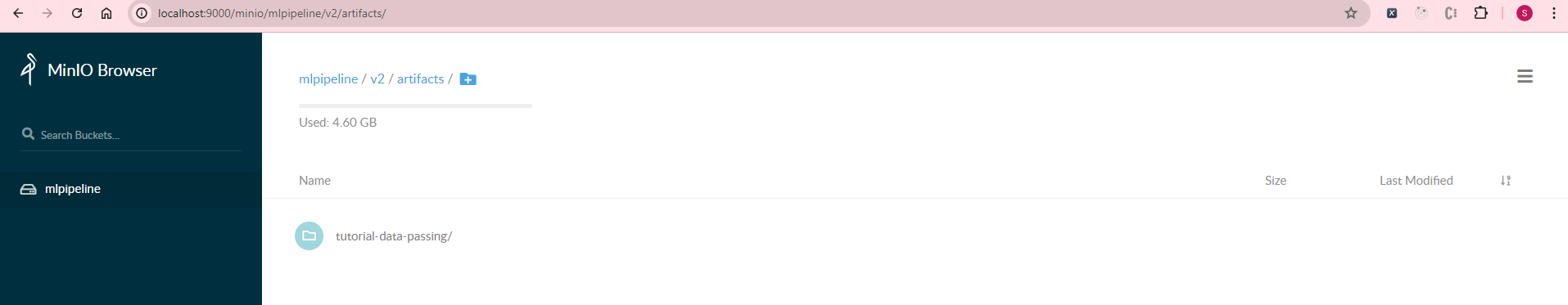

MinIO Browser

To open the MinIO browser, you can do a port-forward and navigate to http://localhost:9000/. The default username is minio and password is minio123:

kubectl port-forward -n kubeflow svc/minio-service 9000:9000

Here is the view from the MinIO Browser:

K9s

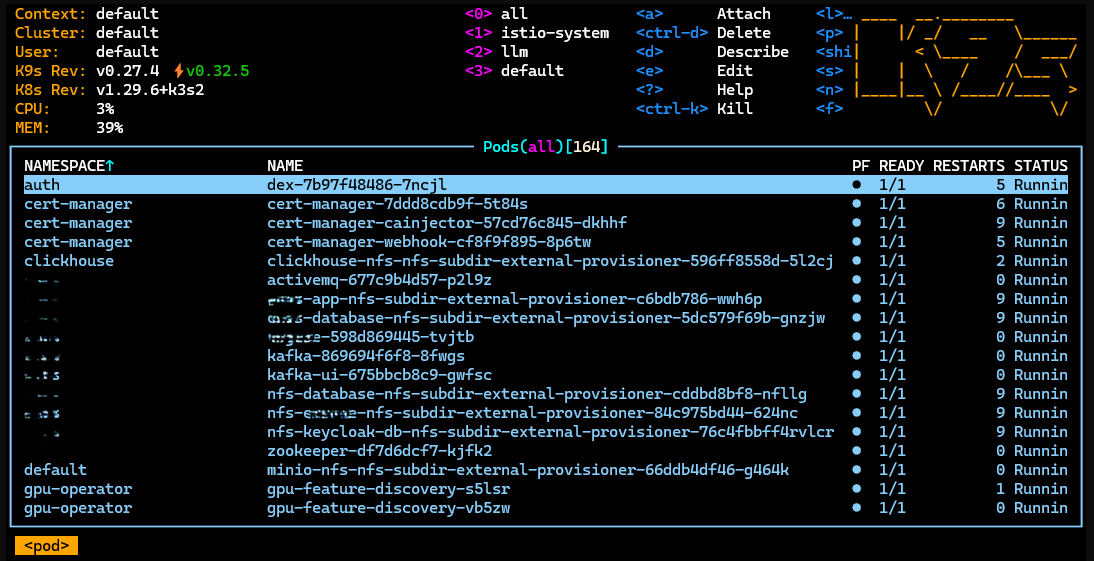

As managing the many Kubeflow pods can be overwhelming, I would like to introduce K9s, a terminal-based UI to interact with your K8s clusters.

To install K9s on Windows via Chocolatey, use the following command:

choco install k9s

# To upgrade in elevated shell

choco upgrade k9s

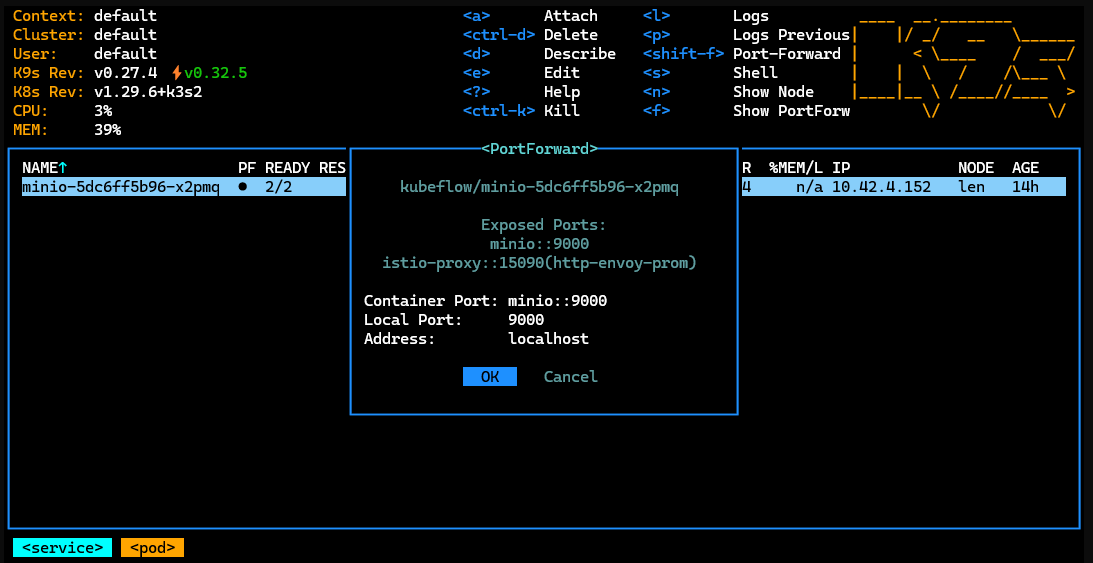

To achieve the same MinIO browser port-forward, follow these steps:

- Type : (colon) and enter svc as the resource to view all the services

- Type / (slash) and enter minio to perform a search for the service

- Press Enter to go to the pod view and type shift-f for the port-forward

Note: You may have to copy the contents of .kube/config from your master node to your %USER%/.kube/config file

Some other useful commands are:

- Press ctrl-a at any time to see all resources

- Press ? to see contextual help

- Press esc to return to the previous resource

- Press ctrl-c to quit K9s

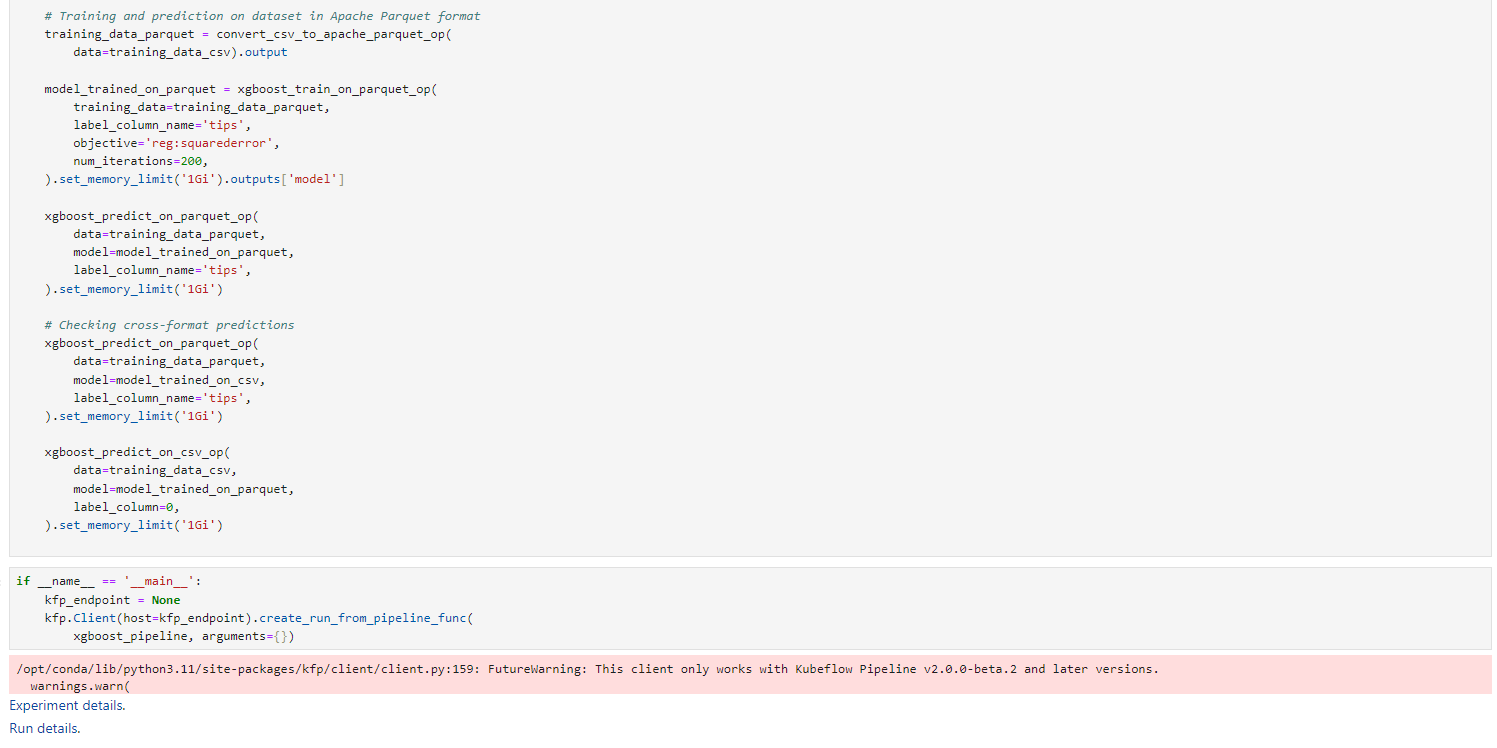

Optional - Run Pipeline from KFP SDK client

To run a pipeline from the KFP SDK client, your notebook must have access to KFP. Referencing the XGBoost sample code, here is the result:

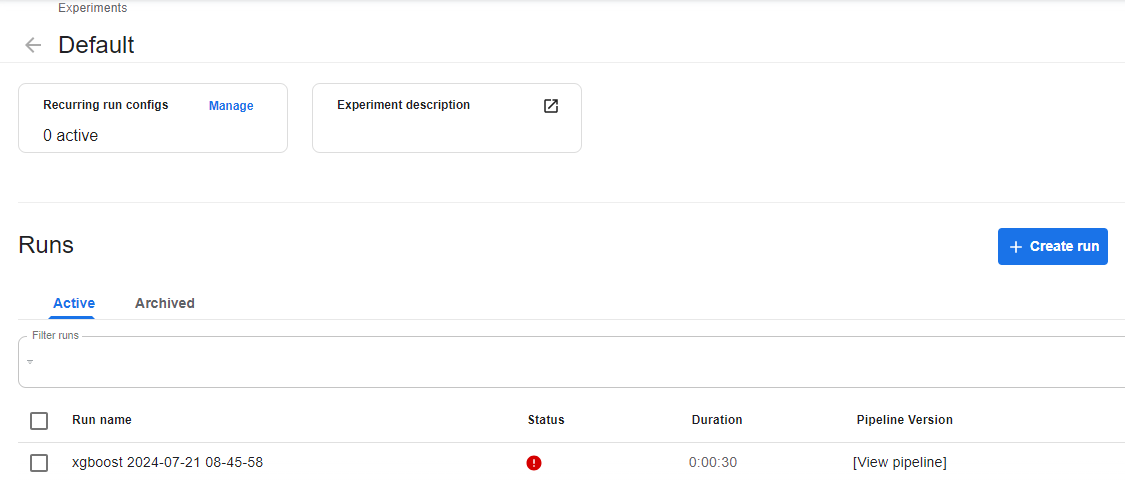

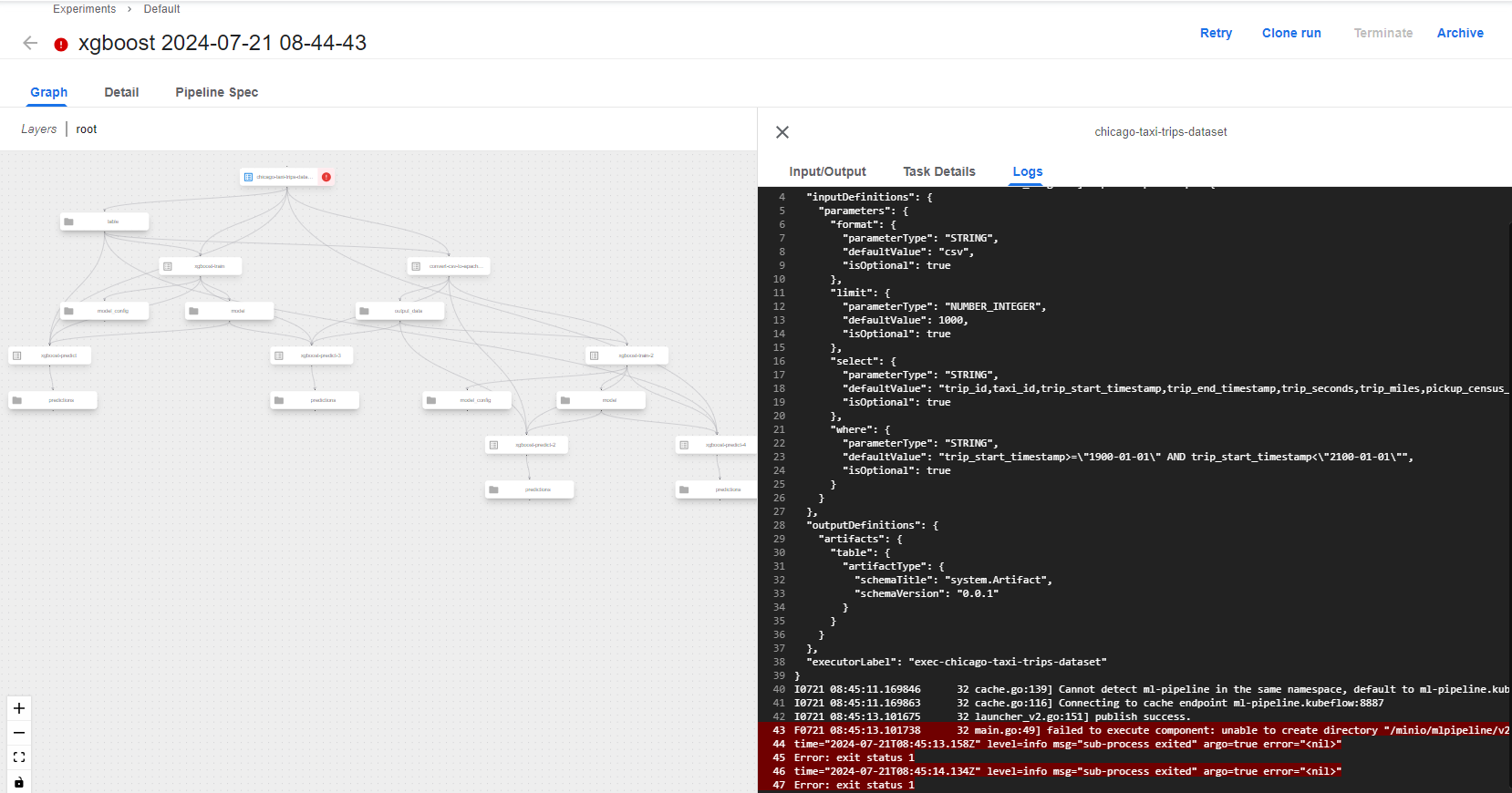

However, there are some RBAC errors. When I click on the Experiment details link, here is the result:

And here is the Run details result:

Troubleshooting

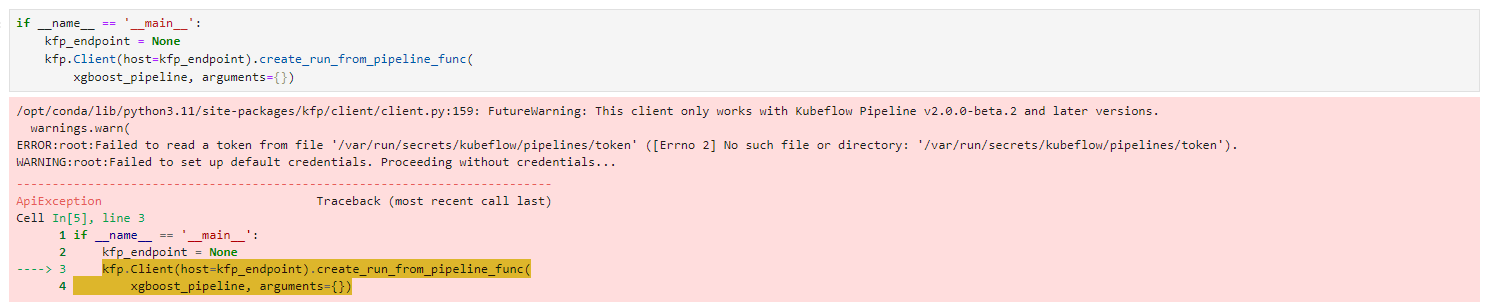

Failed to read a token from file

If you encounter this error when running a pipeline from the KFP client (default location: /var/run/secrets/kubeflow/pipelines/token):

Please proceed to the next error for the fix.

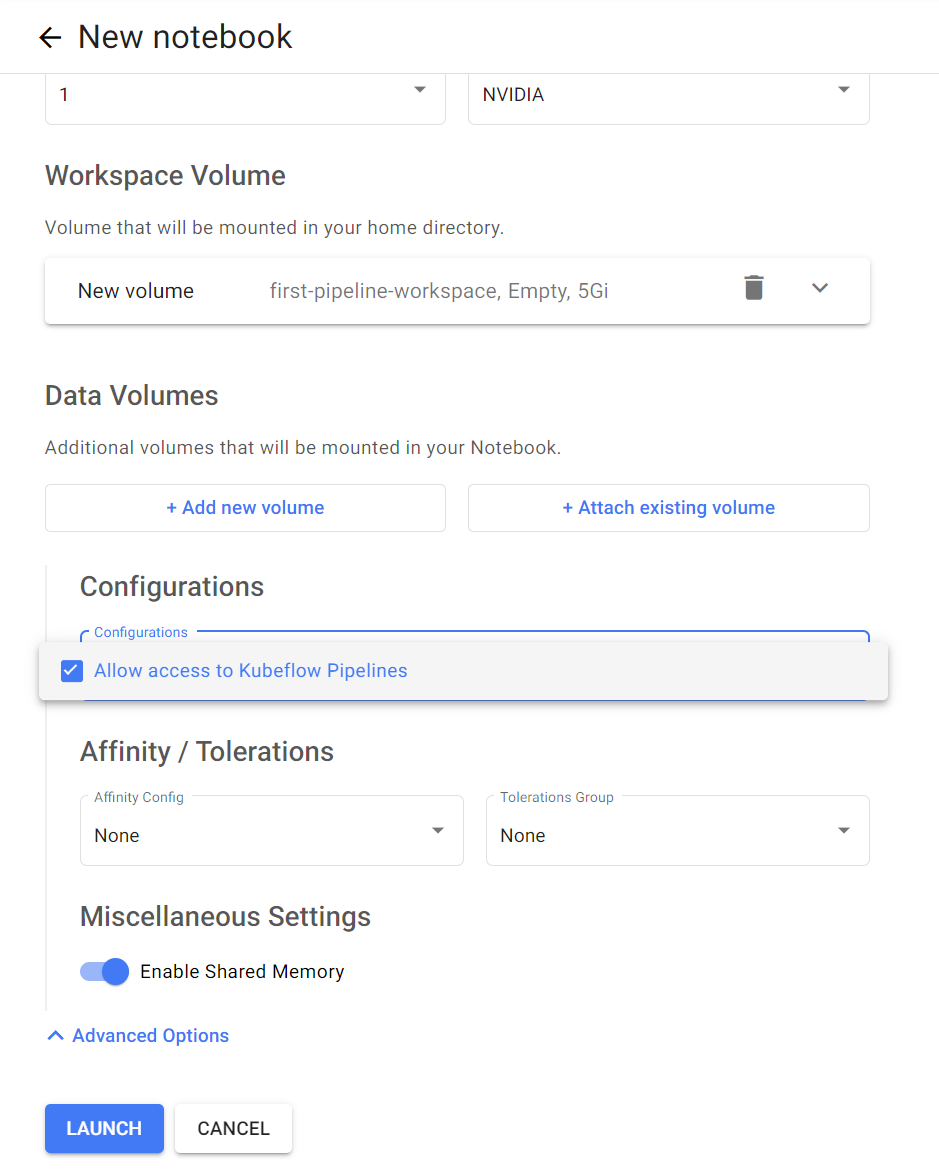

Missing Configurations - Allow access to Kubeflow Pipelines

You might not see this configuration when creating a new notebook:

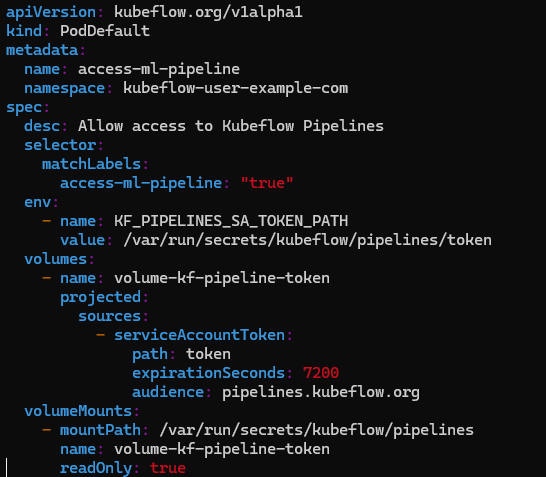

Referencing the Connect API, you may need to use Kubeflow’s PodDefaults to inject the ServiceAccount token volume into your Pods.

To address this issue, pull the latest Kubeflow Manifest and apply the necessary configurations as follows:

cd manifests

kubectl apply -f tests/gh-actions/kf-objects/poddefaults.access-ml-pipeline.kubeflow-user-example-com.yaml

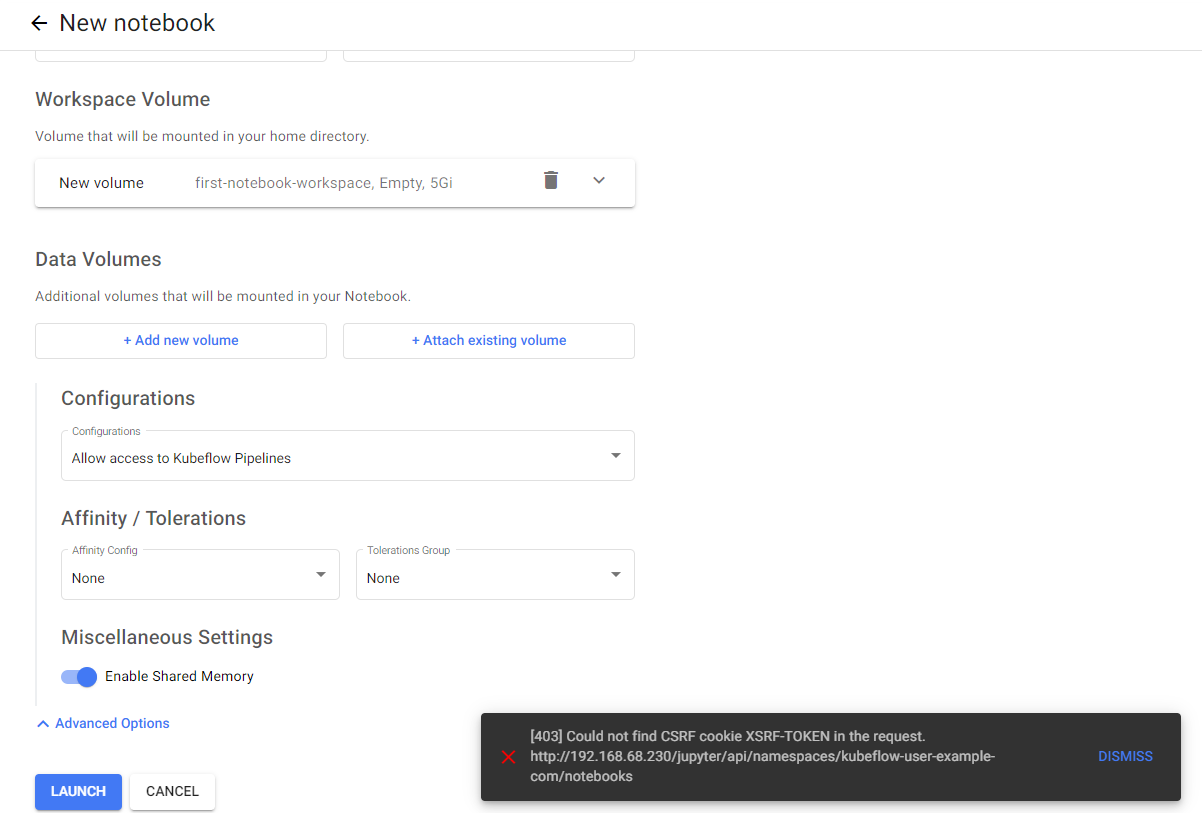

Could not find CSRF cookie XSRF-TOKEN in the request

If you encounter this issue, it could mean that you are exposing Kubeflow over HTTP.

While not recommended due to security risks, if this is for a home lab setup like mine, you may edit apps/jupyter/jupyter-web-app/upstream/base/params.env and set APP_SECURE_COOKIES to false as follows:

JWA_APP_SECURE_COOKIES=false

You may wish to change these as well:

TWA_APP_SECURE_COOKIES=false # in apps/tensorboard/tensorboards-web-app/upstream/base/params.env

VWA_APP_SECURE_COOKIES=false # in apps/volumes-web-app/upstream/base/params.env

APP_SECURE_COOKIES=false # in contrib/kserve/models-web-app/overlays/kubeflow/kustomization.yaml